The current theoretical model for the composition of the universe is that it’s made of normal matter, dark energy and dark matter. A new University of Ottawa study challenges this.

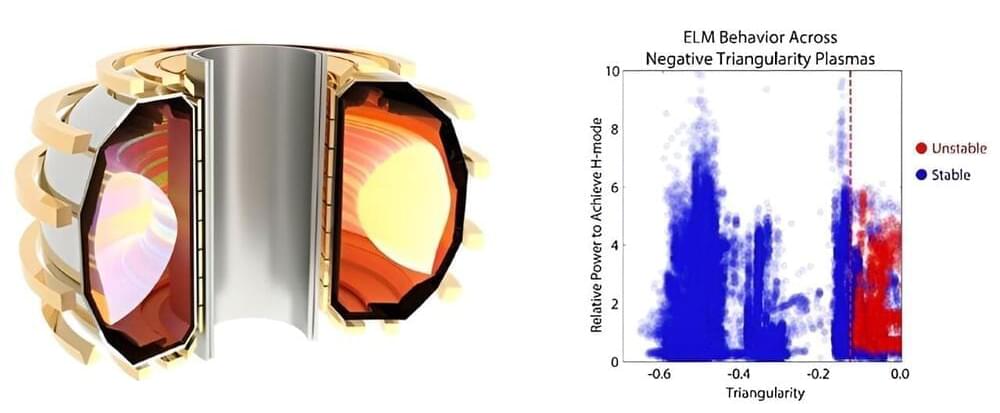

To become commercially viable, fusion power plants must create and sustain the plasma conditions necessary for fusion reactions. However, at high temperatures and densities, plasmas often develop gradients in those temperatures and densities. These gradients can grow into instabilities such as edge localized modes (ELMs).

All non-Google chat GPTs affected by side channel that leaks responses sent to users.

A genetically modified cow has produced milk containing human insulin, according to a new study. The proof-of-concept achievement could be scaled up to, eventually, produce enough insulin to ensure availability and reduced cost for all diabetics requiring the life-maintaining drug.

Unable to rely on their own supply due to damaged pancreatic cells, type 1 diabetics need injectable insulin to live. As do some type 2 diabetics. The World Health Organization estimates that of those who require insulin, between 150 and 200 million people worldwide, only about half are being treated with it. Access to insulin remains inadequate in many low-and middle-income countries – and some high-income countries – and its cost and unavailability have been well-documented.

In a newly published study led by the Department of Animal Sciences in the College of Agricultural, Consumer and Environmental Sciences at the University of Illinois Urbana-Champaign and the Universidade de São Paulo, researchers say they may have developed a way of eliminating insulin scarcity and reducing its cost using cows. Yep, cows.

Amazon.com’s self-driving car unit, Zoox, is seeking to stay abreast of rival Waymo by expanding its vehicles’ testing in California and Nevada to include a wider area, higher speeds and nighttime driving.

The Los Angeles Police Department is warning residents that burglars are using WiFi jammers to easily disarm “connected” surveillance cameras and alarms that are available for cheap on marketplaces like Amazon.

As LA-based news station KTLA5 reports, tech-savvy burglars have been using WiFi jammers, which are small devices that can confuse and overload wireless devices with traffic, to enter homes without setting off alarms — a worrying demonstration of just how easily affordable home security devices from the likes of Ring and Eufy can be disarmed.

As Tom’s Hardware reported last month, instances of WiFi jammers being used by criminals go back several years. Jammers are not only easily available to purchase online, they’re also pretty cheap and can go for as little as $40.

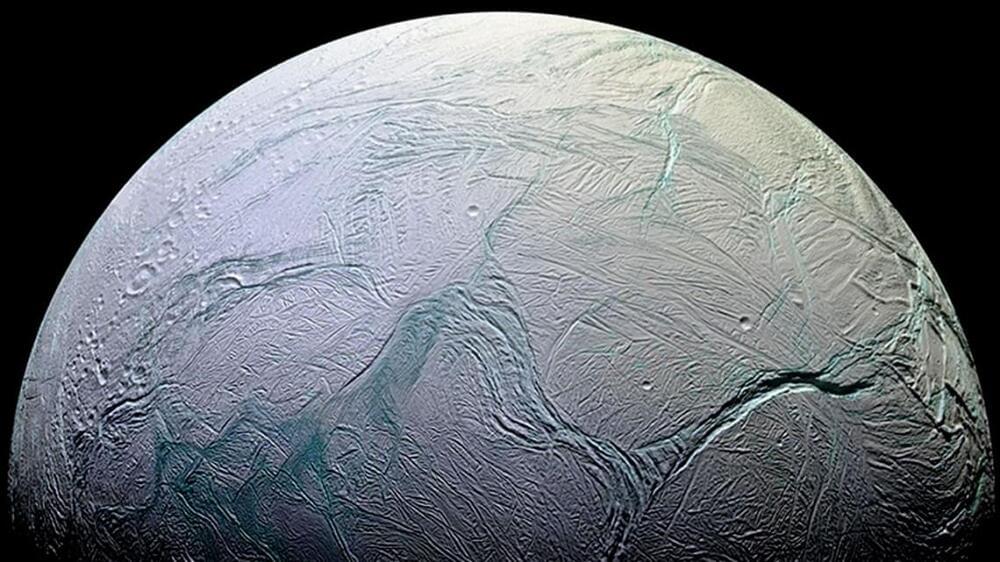

Cornell University astrobiologists have devised a novel way to determine ocean temperatures of distant worlds based on the thickness of their ice shells, effectively conducting oceanography from space.

Available data showing ice thickness variation already allows a prediction for the upper ocean of Enceladus, a moon of Saturn, and a NASA mission’s planned orbital survey of Europa’s ice shell should do the same for the much larger Jovian moon, enhancing the mission’s findings about whether it could support life.

The researchers propose that a process called “ice pumping,” which they’ve observed below Antarctic ice shelves, likely shapes the undersides of Europa’s and Enceladus’ ice shells, but should also operate at Ganymede and Titan, large moons of Jupiter and Saturn, respectively. They show that temperature ranges where the ice and ocean interact — important regions where ingredients for life may be exchanged — can be calculated based on an ice shell’s slope and changes in water’s freezing point at different pressures and salinities.

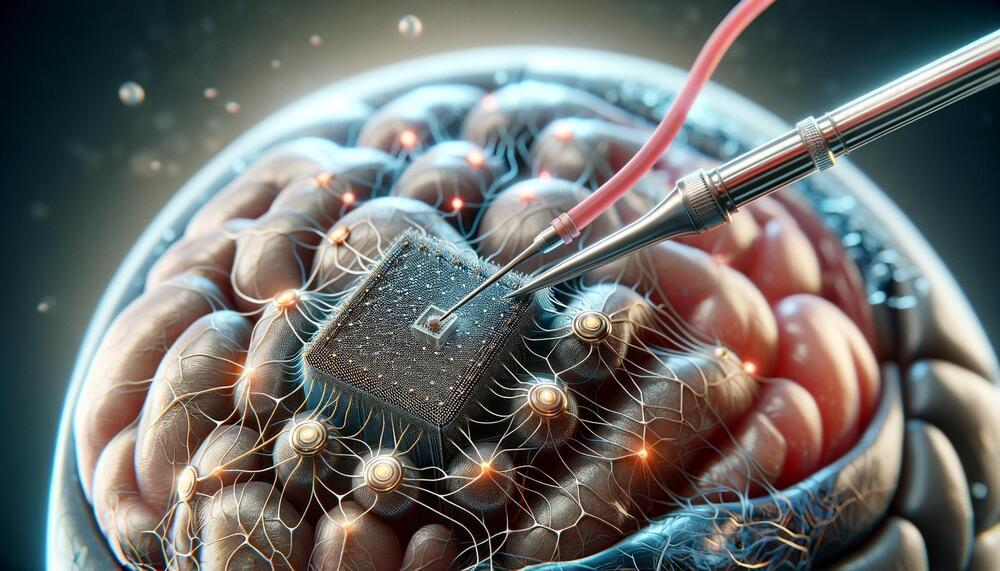

Groundbreaking graphene neurotechnology developed by ICN2 and collaborators promises transformative advances in neuroscience and medical applications, demonstrating high-precision neural interfaces and targeted nerve modulation.

A study published in Nature Nanotechnology presents an innovative graphene-based neurotechnology with the potential for a transformative impact in neuroscience and medical applications. This research, spearheaded by the Catalan Institute of Nanoscience and Nanotechnology (ICN2) together with the Universitat Autònoma de Barcelona (UAB) and other national and international partners, is currently being developed for therapeutic applications through the spin-off INBRAIN Neuroelectronics.

Key Features of Graphene Technology.

A liquid hydrogen airliner with a range of over 5,000 miles. It’s feasible per them, by 2030 and limits pollution.

A breakthrough liquid hydrogen-fueled aircraft concept developed in the United Kingdom could take passengers from London to San Francisco with no layover.

That’s because the massive plane would have an operational range of 5,250 nautical miles (equivalent to air miles) and wouldn’t need to land to fill up with gas. This would provide more than enough range for the flight of roughly 11 hours and 4,664 nautical miles.

This concept plane was developed by the FlyZero project, a program led by the Aerospace Technology Institute with the goal of providing air travel with no pollution (zero-carbon) in the next decade, as the agency states on its website.