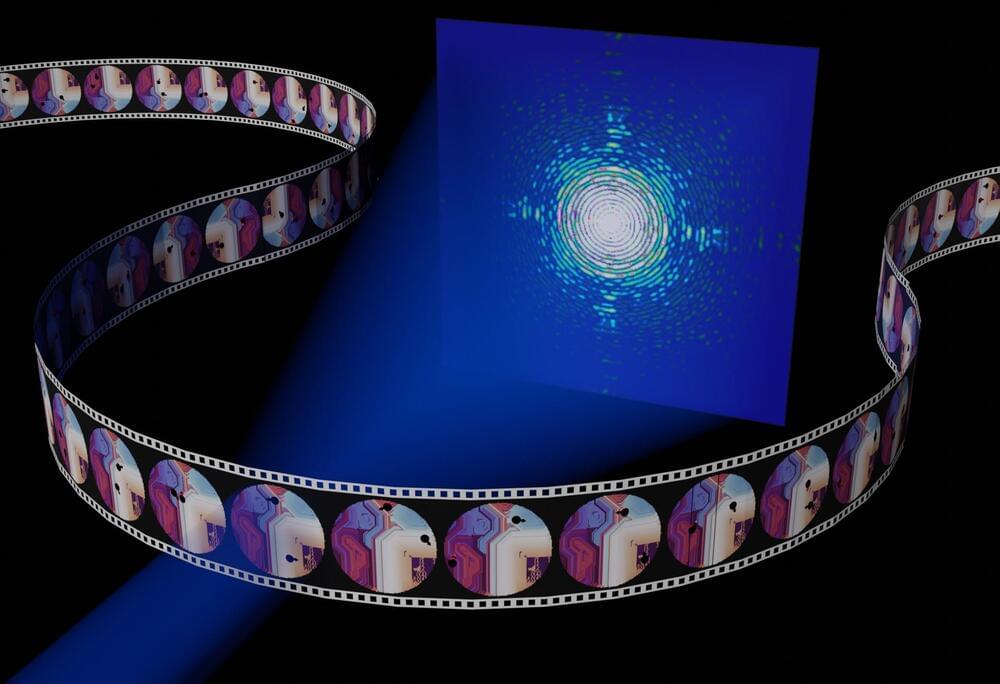

Coherent X-ray imaging has emerged as a powerful tool for studying both nanoscale structures and dynamics in condensed matter and biological systems. The nanometric resolution together with chemical sensitivity and spectral information render X-ray imaging a powerful tool to understand processes such as catalysis, light harvesting or mechanics.

Unfortunately these processes might be random or stochastic in nature. In order to obtain freeze-frame images to study stochastic dynamics, the X-ray fluxes must be very high, potentially heating or even destroying the samples.

Also, detectors acquisition rates are insufficient to capture the fast nanoscale processes. Stroboscopic techniques allow imaging ultrafast repeated processes. But only mean dynamics can be extracted, ruling out measurement of stochastic processes, where the system evolves through a different path in phase space during each measurement. These two obstacles prevent coherent imaging from being applied to complex systems.