2Department of Neurology and.

3Neuroregeneration and Stem Cell Programs, Institute for Cell Engineering, Johns Hopkins University School of Medicine, Baltimore, Maryland, USA.

Every day, your body replaces billions of cells—and yet, your tissues stay perfectly organized. How is that possible?

A team of researchers at ChristianaCare’s Helen F. Graham Cancer Center & Research Institute and the University of Delaware believe they’ve found an answer.

In a study published in Biology of the Cell, they show that just five basic rules may explain how the body maintains the complex structure of tissues like those in the colon, for example, even as its cells are constantly dying and being replaced.

IN A NUTSHELL 🌐 The SynHG project aims to synthesize a complete human genome, opening new horizons in biotechnology. ⚖️ Ethical considerations are central to the project, with a focus on responsible innovation and diverse cultural perspectives. 🧬 Initial steps involve creating a fully synthetic human chromosome, leveraging advances in synthetic biology and DNA chemistry.

Jared Leto, Jeff Bridges, Gillian Anderson, Evan Peters, Tron 3© 2025 — Disney.

How is ventilation at various depth layers of the Atlantic connected and what role do changes in ocean circulation play? Researchers from Bremen, Kiel and Edinburgh have pursued this question and their findings have now been published in Nature Communications.

Salt water in the oceans is not the same everywhere; there are water layers that have different salinities and temperatures. The phenomenon of thermohaline circulation—which results from the differences in density caused by variations in temperature and salinity—drives the Atlantic Meridional Overturning Circulation (AMOC), among other current patterns. Near the surface, however, ocean circulation is also influenced by winds, which are responsible for producing the large subtropical gyres in the Atlantic, both on the northern and southern sides of the equator.

These gyres play an important role in marine ecosystems because they provide organisms on the sea floor with oxygen, which is then consumed in part by the decomposition of organic matter. If there is a paucity of fresh, cold, and oxygen-rich water transported in to ventilate these areas, so to speak, oxygen minimum zones result.

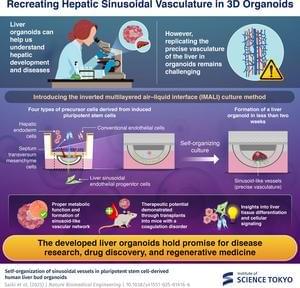

Liver organoids with proper blood vessel networks have been successfully produced, as reported by researchers from Institute of Science Tokyo and Cincinnati Children’s Hospital Medical Center. This advancement addresses a major challenge in replicating the liver’s complex vasculature in lab-grown tissues. Using a novel 3D culture system, the researchers achieved the self-organization of four distinct precursor cell types into functional organoids, capable of producing essential clotting factors in a haemophilia A mouse model.

Over the past decade, organoids have become a major focus in biomedical research. These simplified, lab-grown organs can mimic important aspects of human biology, serving as an accessible and powerful tool to study diseases and test drugs. However, replicating the intricate arrangements and networks of blood vessels found in real organs remains a major hurdle. This is especially true for the liver, whose metabolic and detoxification functions rely on its highly specialized vasculature.

Because of such limitations, scientists haven’t fully tapped into the potential of liver organoids for studying and treating liver diseases. For example, in hemophilia A, a condition where the body cannot produce enough of a critical clotting factor, current treatments often involve expensive and frequent injections. An ideal long-term solution would restore the body’s ability to produce its own clotting factors, which could, in theory, be achieved using liver organoids with fully functional blood vessel structures called sinusoids.

Eight healthy babies have been born in the UK using a new IVF technique that successfully reduced their risk of inheriting genetic diseases from their mothers, the results of a world-first trial said Wednesday.

The findings were hailed as a breakthrough which raises hopes that women with mutations in their mitochondrial DNA could one day have children without passing debilitating or deadly diseases on to the children.

One out of every 5,000 births is affected by mitochondrial diseases, which cannot be treated, and include symptoms such as impaired vision, diabetes and muscle wasting.

Canadian researchers from the Weizmann Institute and Intel Labs have presented a new algorithm that allows different AI models unite and work together to increase efficiency and reduce costs.