People store large quantities of data in their electronic devices and transfer some of this data to others, whether for professional or personal reasons. Data compression methods are thus of the utmost importance, as they can boost the efficiency of devices and communications, making users less reliant on cloud data services and external storage devices.

Researchers at the Central China Institute of Artificial Intelligence, Peng Cheng Laboratory, Dalian University of Technology, the Chinese Academy of Sciences and University of Waterloo recently introduced LMCompress, a new data compression approach based on large language models (LLMs), such as the model underpinning the AI conversational platform ChatGPT.

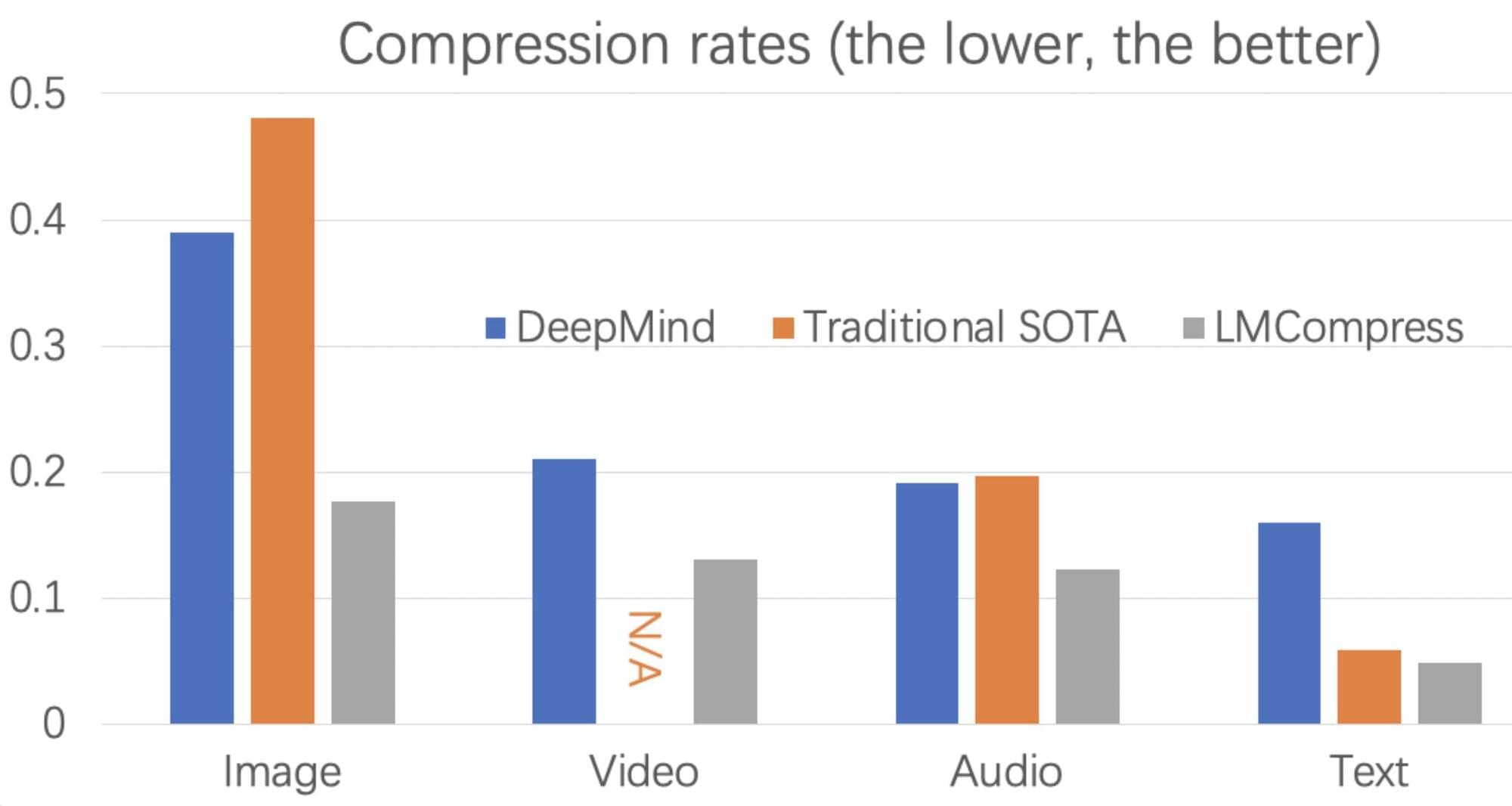

Their proposed method, outlined in a paper published in Nature Machine Intelligence, was found to be significantly more powerful than classical data compression algorithms.