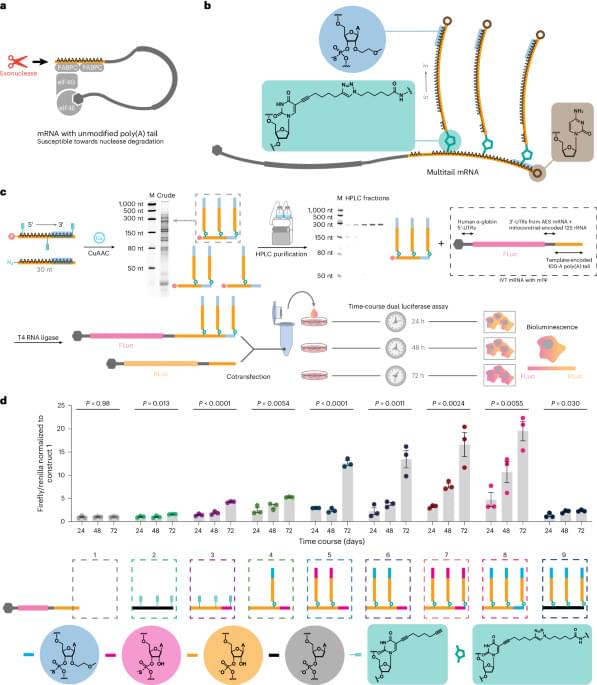

MRNA with engineered poly(A) tails produces prolonged higher levels of protein.

THURSDAY, March 21, 2024 (HealthDay News) — A germ commonly found in the human mouth can travel to colon tumors and appears to speed their growth, new research shows.

The finding might lead to new insights into fighting colon cancer, which kills more than 52,000 Americans each year, according to the American Cancer Society.

Researchers at the Fred Hutchinson Cancer Center in Seattle looked at levels of a particular oral bacterium, Fusobacterium nucleatum, in colon tumor tissues taken from 200 colon cancer patients.

The big picture: Sand batteries might not be as efficient for generating electricity as they are for heating, but they could still have a huge impact on climate emissions — about 9% of the heat needed for buildings and industry comes from district heating systems, and 90% of those rely on fossil fuels.

We could then supplement the sand batteries with another alternative form of storage, such as flow batteries, to generate electricity from renewables year-round — completing the transition to a clean energy future.

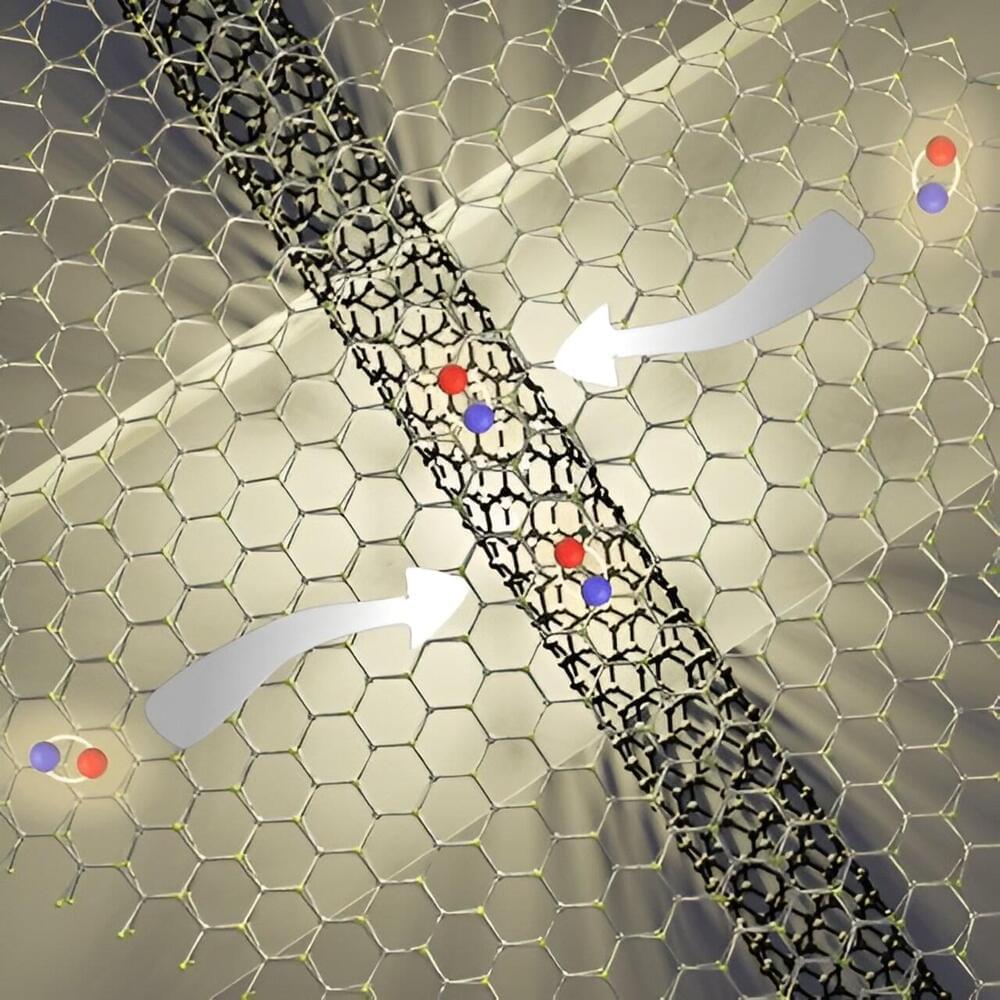

Diamond is the strongest material known. However, another form of carbon has been predicted to be even tougher than diamond. The challenge is how to create it on Earth.

The eight-atom body-centered cubic (BC8) crystal is a distinct carbon phase: not diamond, but very similar. BC8 is predicted to be a stronger material, exhibiting a 30% greater resistance to compression than diamond. It is believed to be found in the center of carbon-rich exoplanets. If BC8 could be recovered under ambient conditions, it could be classified as a super-diamond.

This crystalline high-pressure phase of carbon is theoretically predicted to be the most stable phase of carbon under pressures surpassing 10 million atmospheres.

In preparation for a permanent human colony on the Moon, DARPA has awarded a contract to Northrop Grumman to develop a lunar railway concept, as part of the 10-year Lunar Architecture (LunA-10) Capability Study.

Running a train on the Moon may seem profoundly silly, but there is some very firm logic behind it. Even as the first astronauts were landing on the Sea of Tranquility in 1969, it was realized that a permanent human presence on Mars would require an infrastructure to maintain it. That includes mines for water ice, nuclear power plants, factories, and railways.

Though many people think the Moon is small, it is, in fact, a very large place with a surface area equivalent to that of Africa. Over such an expanse, even a limited presence would require some sort of a transport system to link various outposts and activities.

In the last decade, the advances made into the reprogramming of somatic cells into induced pluripotent stem cells (iPSCs) led to great improvements towards their use as models of diseases. In particular, in the field of neurodegenerative diseases, iPSCs technology allowed to culture in vitro all types of patient-specific neural cells, facilitating not only the investigation of diseases’ etiopathology, but also the testing of new drugs and cell therapies, leading to the innovative concept of personalized medicine. Moreover, iPSCs can be differentiated and organized into 3D organoids, providing a tool which mimics the complexity of the brain’s architecture. Furthermore, recent developments in 3D bioprinting allowed the study of physiological cell-to-cell interactions, given by a combination of several biomaterials, scaffolds, and cells.

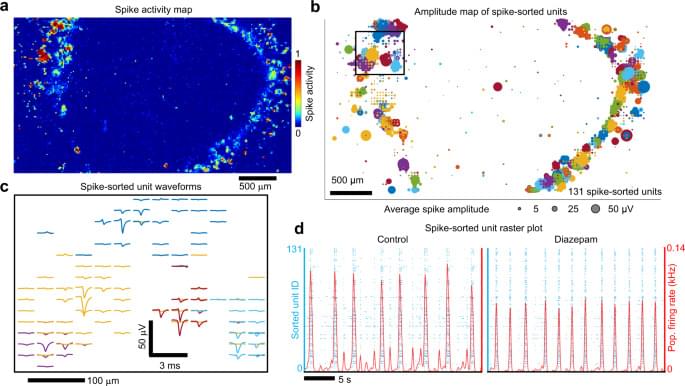

Human brain organoids are an intrinsically self-organized neuronal ensemble grown from three-dimensional assemblies of human-iPSCs. As shown here, brain organoids offer a window into the complex neuronal activity that emerges from intrinsically-formed circuits capable of mirroring aspects of the developing human brain32. Applying high-density CMOS MEA to large multi-cellular networks spanning millimeters of the brain organoid cross-sections we isolated single-unit activity and computed the timing of successive action potentials not due to refractoriness referred to as ISIs. As observed in neocortical neurons in vivo, we observed action potentials with irregular ISI’s that followed a Poisson-like process. From a set of 224 neurons analyzed from four different organoids, 16% ± 8% of the total units fit a Poisson distribution (Fig. 3) with, by definition, the CV approaches one for a perfectly homogenous Poisson process, whereas purely periodic distributions have CV values of zero. Thus, a minority fraction of ISIs were highly irregular (Fig. 3), whereas a majority displayed comparatively more regular spiking patterns with less variation (denoted by a lower CV), which may function to send lower-noise spike-rate signals. ISI distributions have also been fitted to gamma distributions that are mathematically equivalent to an exponential distribution when the shape parameter (k) is one and converges to a normal distribution for large k, thus providing a useful measure of ISI-regularity similar to the CV28. Depending on architectonically defined brain regions with specialized cellular compositions and intrinsic circuitry, neurons process information differently67,68,69. Indeed, neuronal firing varies considerably across cortical regions of monkeys28,70,71. Therefore, different organizational features across the brain organoid may exhibit different dynamics to account for the observed ISI distributions. The minority fraction of irregular ISI distributions may be a feature of higher levels of entropy and circuit complexity and contain increased capacity for computation and information transfer as found in prefrontal cortex compared to more regular firing patters found in motor regions28.

We derived a graph of weighted edges that couple single unit node pairs to send and receive spikes over a wide spatial range. Due to the thickness of our organoid slices, many neurons in the slice are too far from any electrode for their spikes to be detected53. Thus, we cannot rule out the possibility that intermediate undetected neurons may account for the coupling between two correlated units. The graph does not imply downstream or upstream routes of information transfer beyond the individual binary couplings. Importantly, what the network does demonstrate is a non-random pattern of a relatively small number of statistically strong (reliable) couplings against a backdrop of weaker couplings. As demonstrated in the murine brain51,52, high anatomical connection strength edges shape a non-random framework against a background of weaker ones (Fig. 6 and Supplementary Fig. 14). The majority of the singe units (nodes), which we refer to as brokers, have large proportions of incoming and outgoing edges. The dynamic balance among receivers and senders could likely reflect short-term plasticity72.

Brain organoids—composed of roughly one million cells—have neuronal assemblies of sufficient size, cellular orientation, connectivity and co-activation capable of generating field potentials in the extracellular space from their collective transmembrane currents. The basis for low frequency LFPs may be the cellular diversity that emerges in the organoid from the variety of GABAergic cells (Fig. 2), consistent with their role in the generation of highly correlated activity networks detected as LFPs31, parvalbumin cells (Fig. 2c), associated with sustaining network dynamics73, and axon tracts that extended over millimeters (Fig. 2b). Coherence of theta oscillations over spatial extents of the organoid was observed and was unlikely due to volume conduction from distant sources, as happens in EEG and MEG measurements54, because the voltage recordings were conducted within a small tissue volume (≈3.5 mm3). Consistent with minimal volume conduction effects, we validated theta oscillations by demonstrating that the imaginary part of coherency54 projected onto the same spatial locations identified by cross-correlation analysis (Supplementary Fig. 19). Correlations between theta oscillations and local neuronal firing (Fig. 7) strongly supported a local source for the rhythmic activity19,20,53. The local volume through which theta dispersed extended to the z-dimension as shown with the Neuropixels shank (Fig. 9).

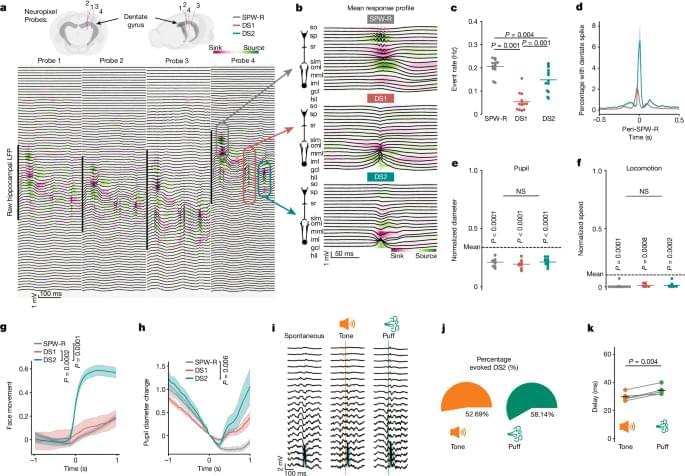

Here we show that DS2 is an online, synchronous population event accompanied by widespread increases in neural activity and brief arousalions. On the basis of the stationary activation of place cells with fields close to the mouse’s current location, we propose that DS2 may serve as a mechanism to regularly ground the hippocampal representation of position in an environment during immobility. Rapidly switching between current (DS2) and remote (SPW-R) locations would enable cognitive flexibility that varies with sudden changes in the animal’s internal state or changes in the environment (for example, a startling noise). Synchronous neural activity during DS2 may provide opportunity windows for synaptic plasticity, consistent with our findings linking DS2 to associative memory formation.

At the microcircuit level, distinct brain states are shaped by the non-uniform recruitment of local inhibitory cells, which are key for directing information flow27. As arousal-activated AACs heterogeneously innervate principal cells19,20 and are highly active during DS2 but mostly silent during SPW-Rs, this GABAergic cell (and probably others, such as TORO cells) may be important in regulating the distinct ensemble activity between DS2 and SPW-Rs. At a network level, DS2 is thought to be primarily triggered by the medial entorhinal cortex, which contains neurons that encode self-referenced movement variables, locations and environmental borders28,29,30. This self-referenced spatial input may indeed be key for recruiting spatially tuned hippocampal cells corresponding to an animal’s current position during DS2. Both tones and air puffs reliably evoked DS2 and promoted current position encoding, but the identity of the stimulus (tone versus puff) could not be reliably decoded.