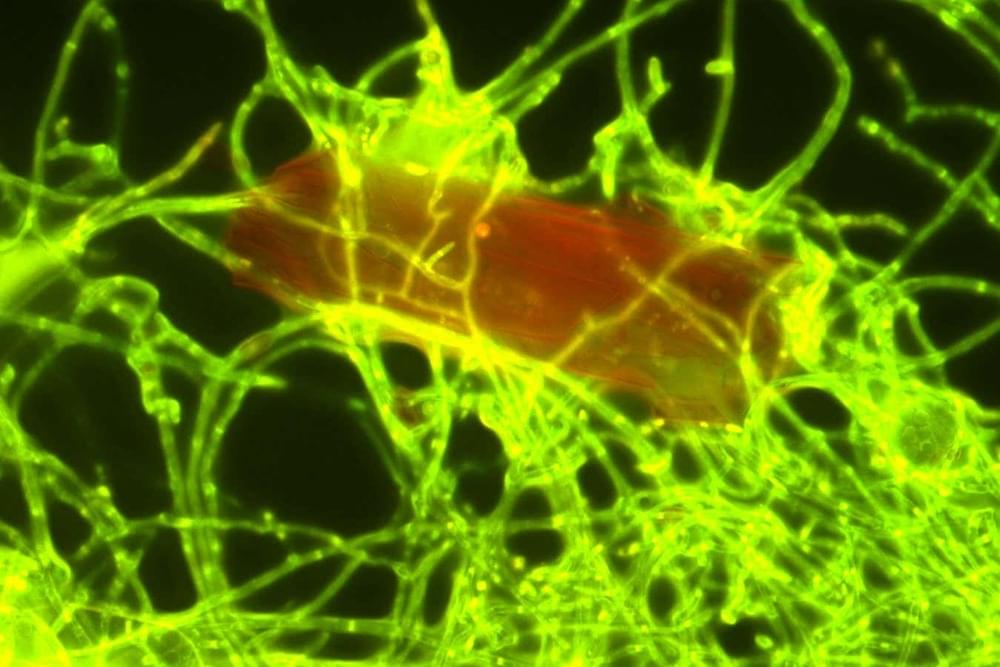

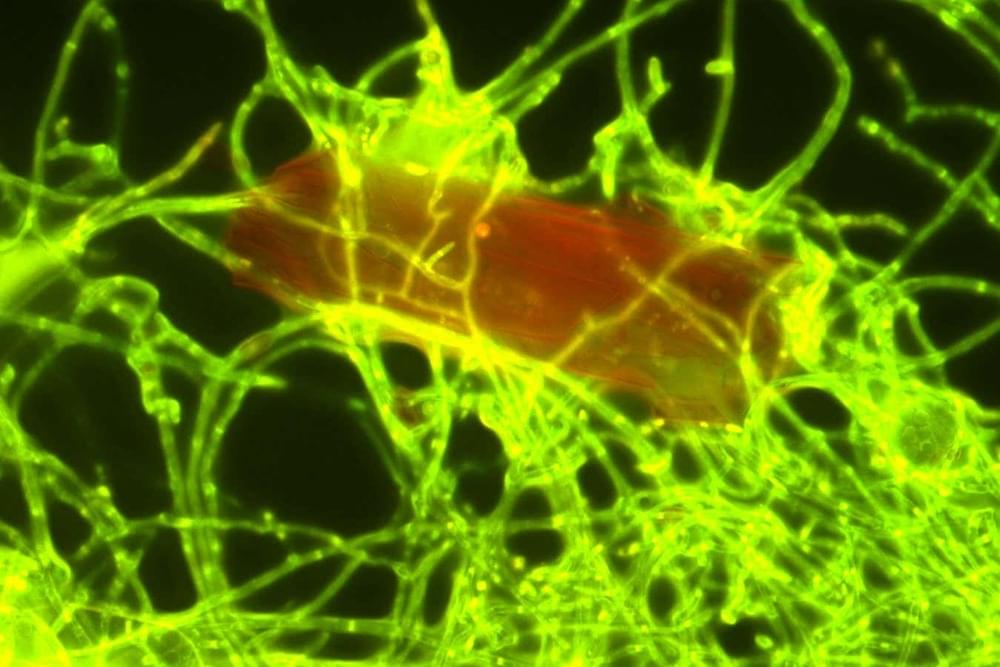

The plastic-digesting capabilities of the fungus Parengyodontium album could be harnessed to degrade polyethylene, the most abundant type of plastic in the ocean.

The plastic-digesting capabilities of the fungus Parengyodontium album could be harnessed to degrade polyethylene, the most abundant type of plastic in the ocean.

1. Privacy is important, but not always guaranteed. Grantcharov realized very quickly that the only way to get surgeons to use the black box was to make them feel protected from possible repercussions. He has designed the system to record actions but hide the identities of both patients and staff, even deleting all recordings within 30 days. His idea is that no individual should be punished for making a mistake.

The black boxes render each person in the recording anonymous; an algorithm distorts people’s voices and blurs out their faces, transforming them into shadowy, noir-like figures. So even if you know what happened, you can’t use it against an individual.

But this process is not perfect. Before 30-day-old recordings are automatically deleted, hospital administrators can still see the operating room number, the time of the operation, and the patient’s medical record number, so even if personnel are technically de-identified, they aren’t truly anonymous. The result is a sense that “Big Brother is watching,” says Christopher Mantyh, vice chair of clinical operations at Duke University Hospital, which has black boxes in seven operating rooms.

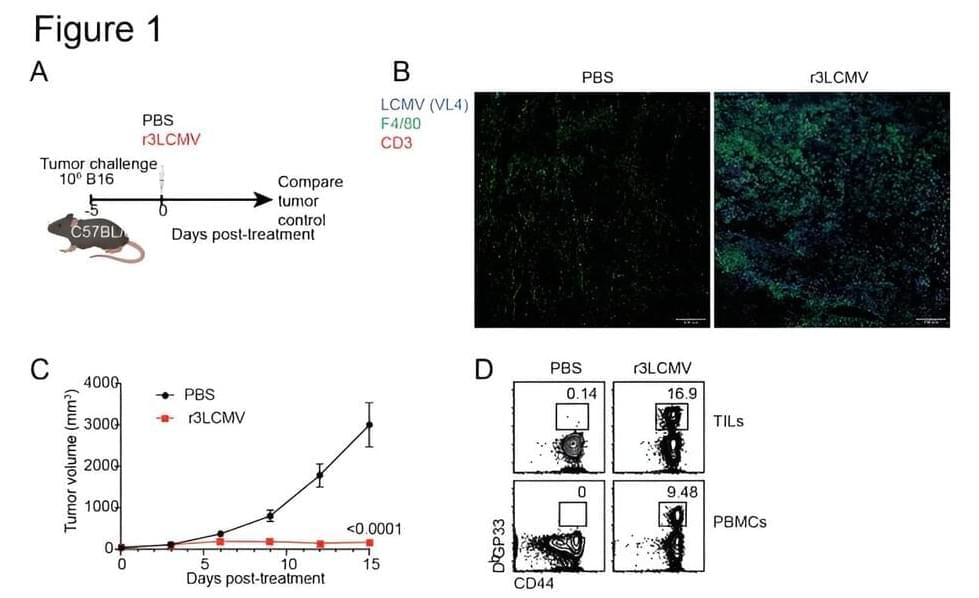

Currently, so-called “oncolytic viruses” such as herpes are used to treat some types of cancer because of their ability to kill cancer cells. But these therapies are not effective with some tumors and their use poses safety concerns, especially in immunosuppressed patients, underscoring the need for safer alternatives, Penaloza-MacMaster said.

In addition to helping clear the tumors, the therapy also helped prevent future cancer in these mice. Healthy mice that were first treated with the LCMV therapy were more resistant to developing tumors later in life.

This phenomenon might be explained by a poorly understood biological process known as “trained immunity.” Trained immunity occurs when a previous infection enhances the immune system’s ability to respond to different diseases in the future. For example, studies have shown that children who received the tuberculosis (TB) vaccine exhibit improved protection against other microorganisms, not just TB. This differs from the typical vaccine response, such as with the SARS-CoV-2 vaccine, which primarily protects against this specific virus.

Astronomers are expecting a “new star” to appear in the night sky anytime between now and September in a celestial event that has been years in the making, according to NASA.

“It’s a once-in-a-lifetime event that will create a lot of new astronomers out there, giving young people a cosmic event they can observe for themselves, ask their own questions, and collect their own data,” said Dr. Rebekah Hounsell, an assistant research scientist specializing in nova events at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, in a statement. “It’ll fuel the next generation of scientists.”

The expected brightening event, known as a nova, will occur in the Milky Way’s Corona Borealis, or Northern Crown constellation, which is located between the Boötes and Hercules constellations.

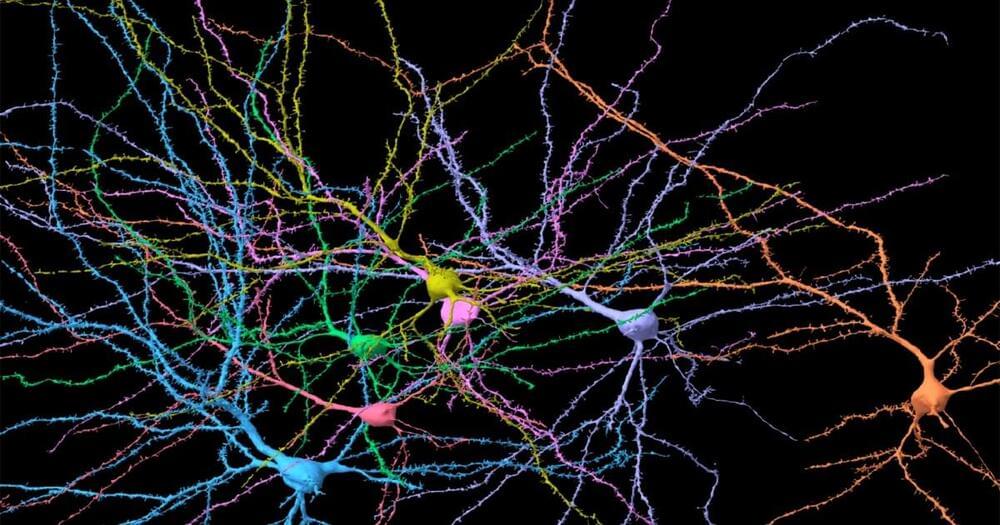

The study offers promising evidence that exercise can counteract age-related changes in the brain, particularly by rejuvenating microglia. The findings contribute to our understanding of how physical activity can benefit cognitive health and open up new avenues for developing interventions to prevent or slow cognitive decline during aging.

“One of the goals is it to encourage elderly to exercise as we have demonstrated that it is possible to reverse some of the negative aspect of ageing on the brain and thereby improve cognitive performance,” Vukovic said. “The other long-term goals is to find ways and treatments to help elicit the beneficial aspect of exercise on the brain in those individual that are unable to exercise or bed-bound.”

The study, “Exercise rejuvenates microglia and reverses T cell accumulation in the aged female mouse brain,” was authored by Solal Chauquet, Emily F. Willis, Laura Grice, Samuel B. R. Harley, Joseph E. Powell, Naomi R. Wray, Quan Nguyen, Marc J. Ruitenberg, Sonia Shah, and Jana Vukovic.

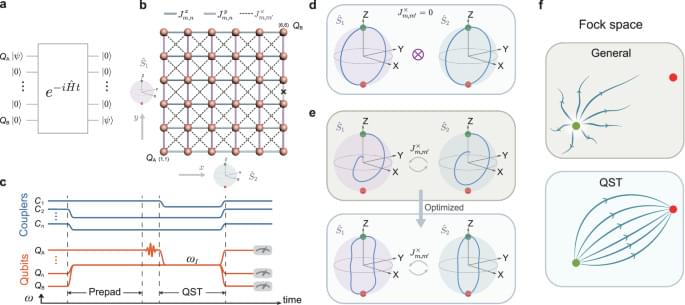

Faithful transfer of quantum states between different parts of a single complex quantum circuit will become more and more important as quantum computing devices grow in size. Here, the authors transfer single-qubit excitations, two-qubit entangled states, and two excitations across a 6 × 6 superconducting qubit device.

What might the orbit of a primordial black hole going in and out of a Sun-like star look like?