Michel Talagrand innovative work has allowed others to tackle problems involving random processes.

Michel Talagrand innovative work has allowed others to tackle problems involving random processes.

Apple quietly submitted a research paper last week related to its work on a multimodal large language model (MLLM) called MM1. Apple doesn’t explain what the meaning behind the name is, but it’s possible it could stand for MultiModal 1.

Being multimodal, MM1 is capable of working with both text and images. Overall, its capabilities and design are similar to the likes of Google’s Gemini or Meta’s open-source LLM Llama 2.

An earlier report from Bloomberg said Apple was interested in incorporating Google’s Gemini AI engine into the iPhone. The two companies are reportedly still in talks to let Apple license Gemini to power some of the generative AI features coming to iOS 18.

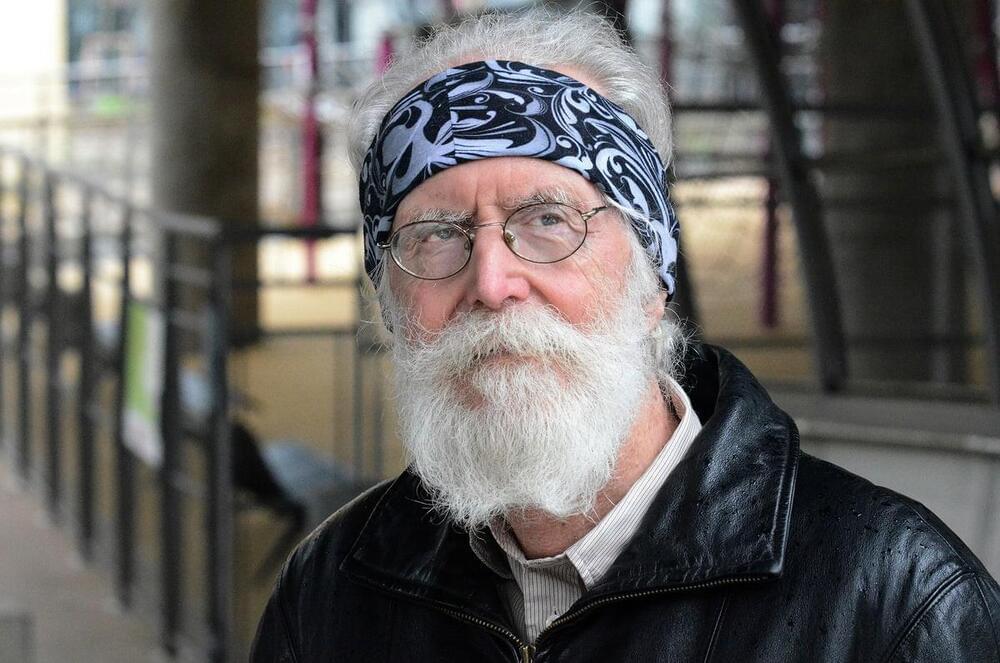

Google just announced that it has been riverline floods, up to seven days in advance in some cases. This isn’t just tech company hyperbole, as the findings were actually published Nature. Floods are the most common natural disaster throughout the world, so any early warning system is good news.

Floods have been notoriously tricky to predict, as most rivers don’t have streamflow gauges. Google got around this problem by with all kinds of relevant data, including historical events, river level readings, elevation and terrain readings and more. After that, the company generated localized maps and ran “hundreds of thousands” of simulations in each location. This combination of techniques allowed the models to accurately predict upcoming floods.

Four of ChargePoint’s EV charger models can now support Tesla’s North American Charging Standard (NACS) connector cables.

The Campbell, California-based EV infrastructure company posted on Twitter that NACS cables for its Express 250 DC fast charger are now available for EV charging site owners to order. The NACS cables can be retrofitted onto existing DC fast charger models because most ChargePoint stations offer modularity.

Tesla drivers can currently use ChargePoint DC fast chargers with readily available adapters, and Tesla also sells a CCS Combo 1 adapter.

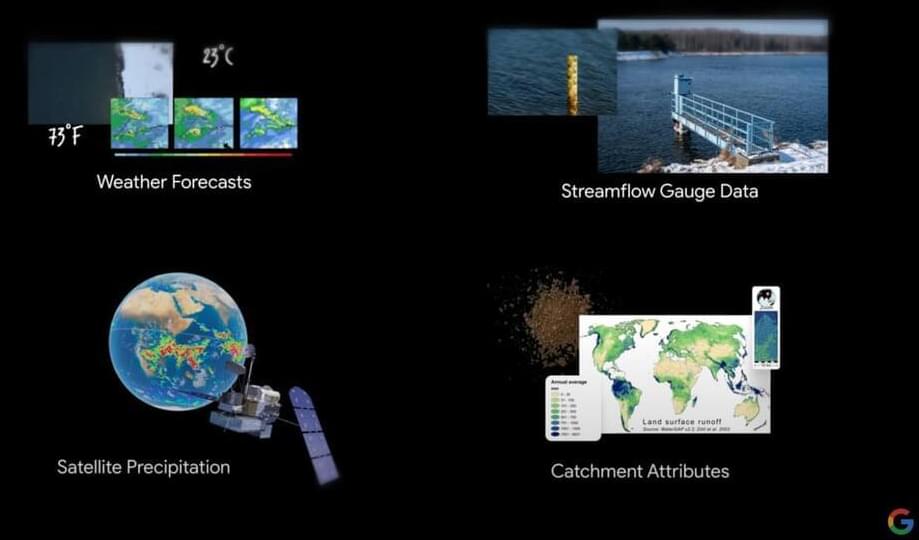

Particles colliding in accelerators produce numerous cascades of secondary particles. The electronics processing the signals avalanching in from the detectors then have a fraction of a second in which to assess whether an event is of sufficient interest to save it for later analysis. In the near future, this demanding task may be carried out using algorithms based on AI, the development of which involves scientists from the Institute of Nuclear Physics of the PAS.

Anyone considering a rooftop solar system will have a lot to like about Texas-based Yotta Energy’s innovation.

Interestingly, it’s what the setup doesn’t include that could be most game-changing for the small-and medium-sized businesses targeted by the company, per a CleanTechnica report.

There’s no extra land area needed for energy storage — and no trenching, no structure, and no foundation, either. Owners won’t need landscaping, fencing, or aesthetics. And there’s no cause for extra building and electrical permits for a big battery.