While roboticists have introduced increasingly advanced systems over the past decades, most existing robots are not yet able to manipulate objects with the same dexterity and sensing ability as humans. This, in turn, adversely impacts their performance in various real-world tasks, ranging from household chores to the clearing of rubble after natural disasters and the assembly or performing maintenance tasks, particularly in high-temperature working environments such as steel mills and foundries, where elevated temperatures can significantly degrade performance and compromise the precision required for safe operations.

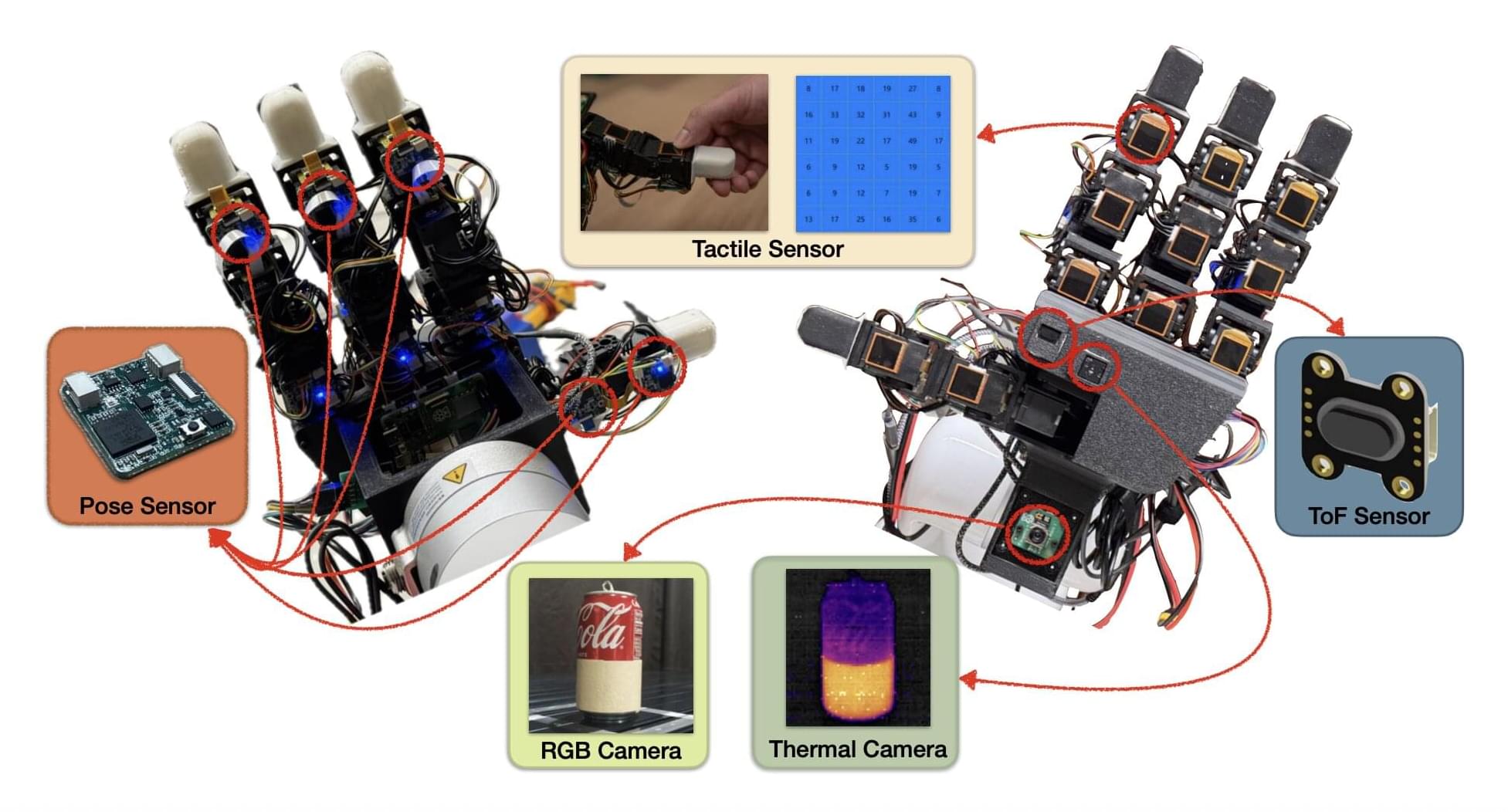

Researchers at the University of Southern California recently developed the MOTIF (Multimodal Observation with Thermal, Inertial, and Force sensors) hand, a new robotic hand that could improve the object manipulation capabilities of humanoid robots. The innovation, presented in a paper posted to the arXiv preprint server, features a combination of sensing devices, including tactile sensors, a depth sensor, a thermal camera, inertial measurement unit (IMU) sensors and a visual sensor.

“Our paper emerged from the need to advance robotic manipulation beyond traditional visual and tactile sensing,” Daniel Seita, Hanyang Zhou, Wenhao Liu, and Haozhe Lou told Tech Xplore. “Current multi-fingered robotic hands often lack the integrated sensing capabilities necessary for complex tasks involving thermal awareness and responsive contact feedback.”