😀 yay face_with_colon_three

Researchers in Sweden coaxed wood to conduct electricity, then used it to make a climate-friendlier building block of electronics.

Akash Systems has signed a non-binding preliminary memorandum of terms with the U.S. Department of Commerce for $18.2 million in direct funding and $50 million in federal and state tax credits through the CHIPS Act. Although this isn’t yet a binding contract that will give the company the promised funds, it’s an important first step in the negotiation process for the Oakland-based startup, which shows that both the company and the U.S. government are gradually moving towards a formal agreement. According to Akash Systems (h/t Axios), it will use the funds to ramp up its operations for producing diamond-cooled semiconductors for AI, data centers, space applications, and defense markets.

Diamond-cooling technology goes deeper than just thermal paste with nano-diamond technology. For example, some use synthetic diamonds as the chip substrate, utilizing the material’s thermal conductivity to more efficiently move heat away from the processor. So, let’s look closer at Akash’s solution.

I believe that nanotechnology could be imbedded into paper so a paper computer could give one the same information as a smartphone but at pennies per smartphone. Right now we can print out 3D copies of paper phones and other things next would be nanotechnology made of paper with quantum mechanical engineering.

Irish company Mcor’s unique paper-based 3D printers make some very compelling arguments. For starters, instead of expensive plastics, they build objects out of cut-and-glued sheets of standard 80 GSM office paper. That means printed objects come out at between 10–20 percent of the price of other 3D prints, and with none of the toxic fumes or solvent dips that some other processes require.

Secondly, because it’s standard paper, you can print onto it in full color before it’s cut and assembled, giving you a high quality, high resolution color “skin” all over your final object. Additionally, if the standard hard-glued object texture isn’t good enough, you can dip the final print in solid glue, to make it extra durable and strong enough to be drilled and tapped, or in a flexible outer coating that enables moving parts — if you don’t mind losing a little of your object’s precision shape.

The process is fairly simple. Using a piece of software called SliceIt, a 3D model is cut into paper-thin layers exactly the thickness of an 80 GSM sheet. If your 3D model doesn’t include color information, you can add color and detail to the model through a second piece of software called ColorIt.

Year 2021 face_with_colon_three

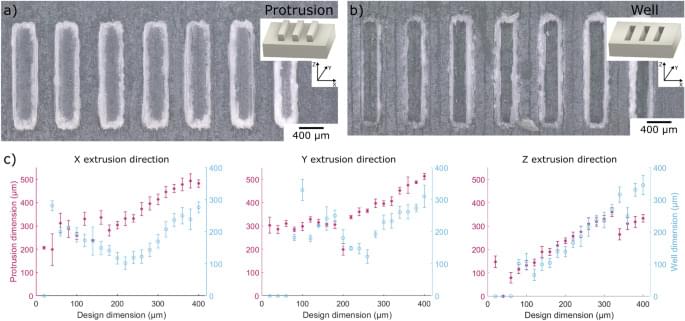

Scientific Reports — 3D printed microfluidic lab-on-a-chip device for fiber-based dual beam optical manipulation. The final 3D printed chip offers three key features, such as an optimized fiber channel design for precise alignment of optical fibers, an optically clear window to visualize the trapping region, and a sample channel which facilitates hydrodynamic focusing of samples. A square zig–zag structure incorporated in the sample channel increases the number of particles at the trapping site and focuses the cells and particles during experiments when operating the chip at low Reynolds number. To evaluate the performance of the device for optical manipulation, we implemented on-chip, fiber-based optical trapping of different-sized microscopic particles and performed trap stiffness measurements. In addition, optical stretching of MCF-7 cells was successfully accomplished for the purpose of studying the effects of a cytochalasin metabolite, pyrichalasin H, on cell elasticity. We observed distinct changes in the deformability of single cells treated with pyrichalasin H compared to untreated cells. These results demonstrate that 3D printed microfluidic lab-on-a-chip devices offer a cost-effective and customizable platform for applications in optical manipulation.

Science Corporation, a biotech startup launched by a Neuralink cofounder, claims that it’s achieved a breakthrough in brain-computer interface technology that can help patients with severe vision loss.

In preliminary clinical trials, legally blind patients who had lost their central vision received the company’s retina implants, which restored their eyesight and even allowed them to read books and recognize faces, the startup announced last week.

“To my knowledge, this is the first time that restoration of the ability to fluently read has ever been definitively shown in blind patients,” CEO Max Hodak, who was president of Neuralink before founding Science Corp, said in a statement.

Aging is characterized by a gradual decline in function, partly due to accumulated molecular damage. Human skin undergoes both chronological aging and environmental degradation, particularly UV-induced photoaging. Detrimental structural and physiological changes caused by aging include epidermal thinning due to stem cell depletion and dermal atrophy associated with decreased collagen production. Here, we present a comprehensive single-cell atlas of skin aging, analyzing samples from young, middle-aged, and elderly individuals, including both sun-exposed and sun-protected areas. This atlas reveals age-related cellular composition and function changes across various skin cell types, including epidermal stem cells, fibroblasts, hair follicles, and endothelial cells. Using our atlas, we have identified basal stem cells as a highly variable population across aging, more so than other skin cell populations such as fibroblasts. In basal stem cells, we identified ATF3 as a novel regulator of skin aging. ATF3 is a transcriptional factor for genes involved in the aging process, with its expression reduced by 20% during aging. Based on this discovery, we have developed an innovative mRNA-based treatment to mitigate the effects of skin aging. Cell senescence decreased 25% in skin cells treated with ATF3 mRNA, and we observed an over 20% increase in proliferation in treated basal stem cells. Importantly, we also found crosstalk between keratinocytes and fibroblasts as a critical component of therapeutic interventions, with ATF3 rescue of basal cells significantly enhancing fibroblast collagen production by approximately 200%. We conclude that ATF3-targeted mRNA treatment effectively reverses the effects of skin aging by modulating specific cellular mechanisms, offering a novel, targeted approach to human skin rejuvenation.

The authors have declared no competing interest.