Feb 21, 2023

The fungus in the HBO series The Last of Us turns humans into zombies. Should you be afraid?

Posted by Jose Ruben Rodriguez Fuentes in categories: biological, neuroscience

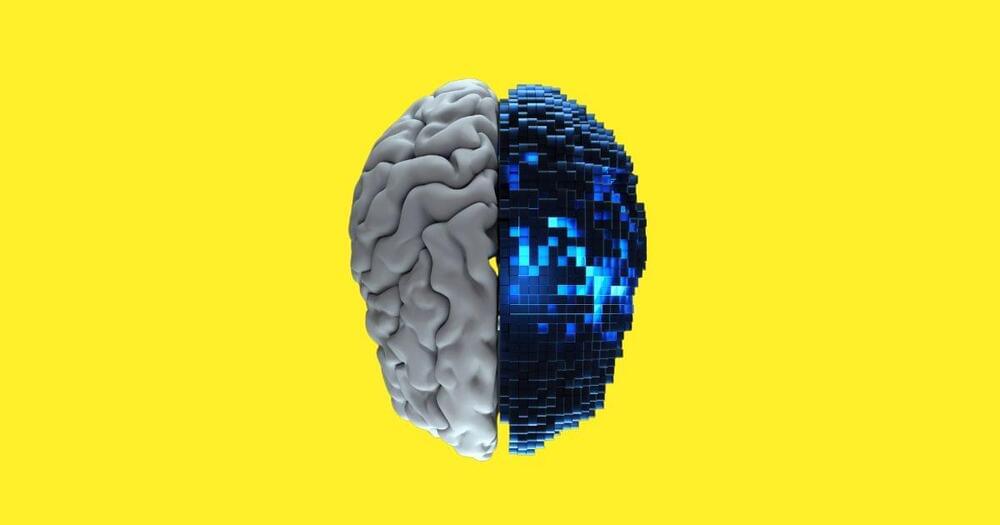

The fungal pathogen that wipes out much of humanity in HBO’s latest series The Last of Us is real, but can the cordyceps fungus actually turn humans into zombies one day?

“It’s highly unlikely because these are organisms that have become really well adapted to infecting ants,” Rebecca Shapiro, assistant professor at University of Guelph’s department of molecular and cellular biology, told Craig Norris, host of CBC Kitchener-Waterloo’s The Morning Edition.

In the television series, the fungus infects the brain of humans and turns them into zombies. In real life, it can only infect ants and other insects in this manner.