Time loops have long been the stuff of science fiction. Now, using the rules of quantum mechanics, we have a way to effectively transport a particle back in time – here’s how.

Time loops have long been the stuff of science fiction. Now, using the rules of quantum mechanics, we have a way to effectively transport a particle back in time – here’s how.

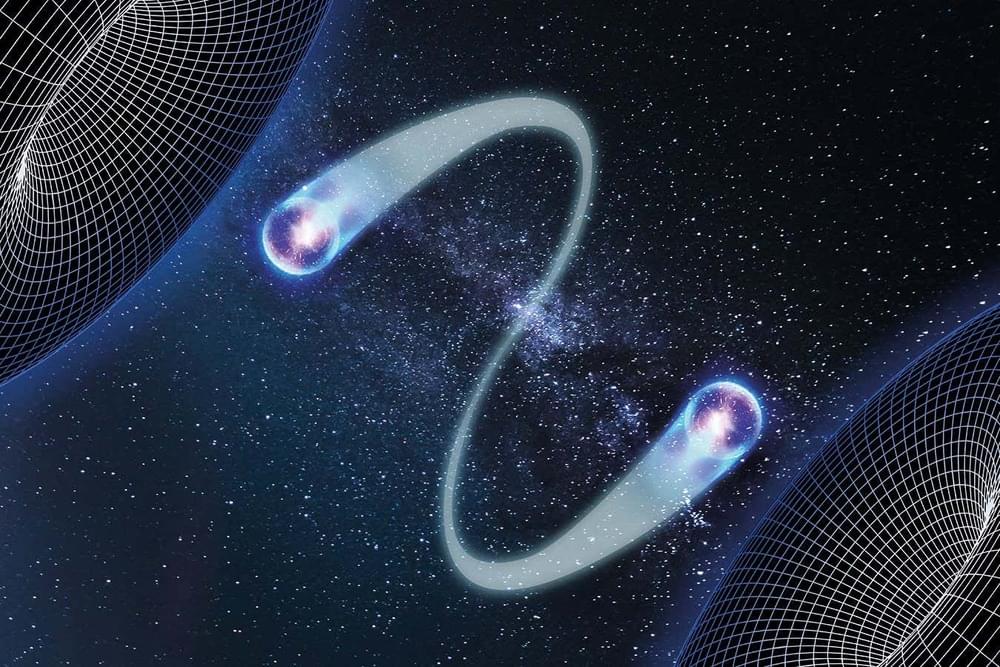

Dr. Deepan Balakrishnan, the first author, said, “Our work shows the theoretical framework for single-shot 3D imaging with TEMs. We are developing a generalized method using physics-based machine learning models that learn material priors and provide 3D relief for any 2D projection.”

The team also envisions further generalizing the formulation of pop-out metrology beyond TEMs to any coherent imaging system for optically thick samples (i.e., X-rays, electrons, visible light photons, etc.).

Prof Loh added, “Like human vision, inferring 3D information from a 2D image requires context. Pop-out is similar, but the context comes from the material we focus on and our understanding of how photons and electrons interact with them.”

With the rise of AI, we’re abstracting complexity by embracing technologies that resonate with human intuition. Take ChatGPT, for instance. We can simply articulate our goals in plain English, and it generates code for provisioning the infrastructure accordingly.

Another approach is using visualization. For example, with Brainboard, you can draw your cloud infrastructure, and the necessary deployment and management code is automatically generated.

These examples illustrate the next-generation software and mindset. The shift is happening now, and the next set of tools will be adapted and optimized for humans.

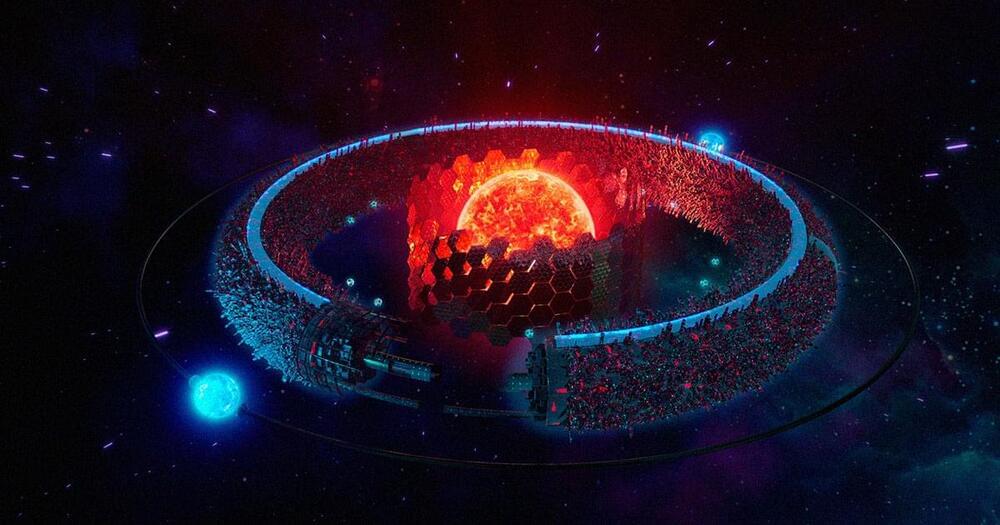

We’d need an astronomical amount of resources to construct a Dyson sphere, a giant theoretical shell that would harvest all of a given star’s energy, around the Sun.

In fact, as science journalist Jaime Green explores in her new book “The Possibility of Life,” we’d have to go as far as to demolish a Jupiter-sized planet to build such a megastructure, a concept first devised by physicist Freeman Dyson in 1960.

“If you wanted enough material to build such a thing, you’d essentially have to disassemble a planet, and not just a small one — more like Jupiter,” Green writes in her book.

Water is usually something you’d want to keep away from electronic circuits, but engineers in Germany have now developed a new concept for water-based switches that are much faster than current semiconductor materials.

Transistors are a fundamental component of electronic systems, and in a basic sense they process data by switching between conductive and non-conductive states – zeroes and ones – as the semiconductor materials in them encounter electrical currents. The speed of this switching (along with the number of transistors in a chip) is a primary factor in how fast a computer system can be.

Now, researchers at Ruhr University Bochum have developed a new type of circuit that can switch much faster than existing semiconductor materials. The key ingredient is, surprisingly, water, with iodide ions dissolved into it to make it salty. A custom-made nozzle fans this water out into a flattened jet only a few microns thick.

Year 2023 face_with_colon_three

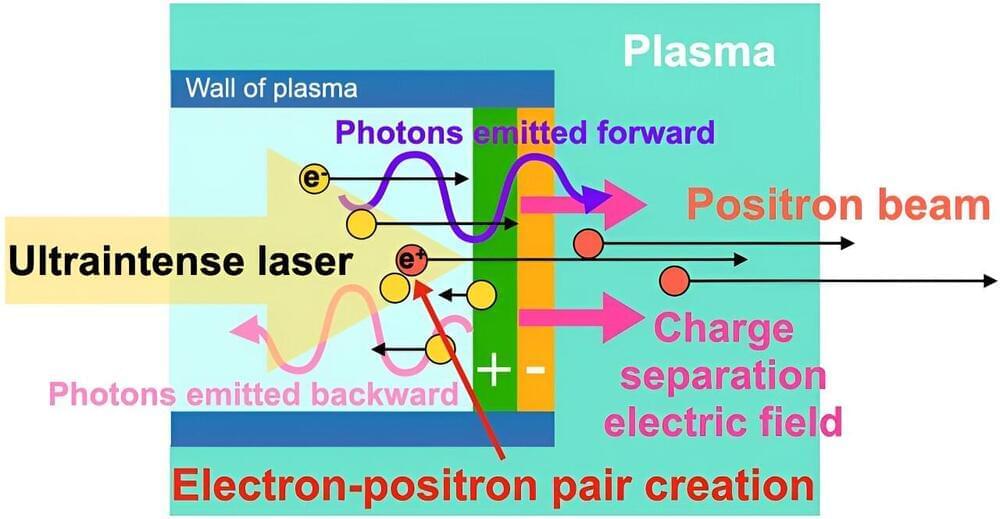

A team led by researchers at Osaka University and University of California, San Diego has conducted simulations of creating matter solely from collisions of light particles. Their method circumvents what would otherwise be the intensity limitations of modern lasers and can be readily implemented by using presently available technology. This work might help experimentally test long-standing theories such as the Standard Model of particle physics, and possibly the need to revise them.

One of the most striking predictions of quantum physics is that matter can be generated solely from light (i.e., photons), and in fact, the astronomical bodies known as pulsars achieve this feat. Directly generating matter in this manner has not been achieved in a laboratory, but it would enable further testing of the theories of basic quantum physics and the fundamental composition of the universe.

In a study published in Physical Review Letters, a team led by researchers at Osaka University has simulated conditions that enable photon –photon collisions, solely by using lasers. The simplicity of the setup and ease of implementation at presently available laser intensities make it a promising candidate for near-future experimental implementation.