A radical research program in deep design.

Scientists have accidentally discovered a particle that has mass when it’s traveling in one direction, but no mass while traveling in a different direction. Known as semi-Dirac fermions, particles with this bizarre behavior were first predicted 16 years ago.

The discovery was made in a semi-metal material called ZrSiS, made up of zirconium, silicon and sulfur, while studying the properties of quasiparticles. These emerge from the collective behavior of many particles within a solid material.

“This was totally unexpected,” said Yinming Shao, lead author on the study. “We weren’t even looking for a semi-Dirac fermion when we started working with this material, but we were seeing signatures we didn’t understand – and it turns out we had made the first observation of these wild quasiparticles that sometimes move like they have mass and sometimes move like they have none.”

Real life modern Frankenstein.

World-leading scientists have called for a halt on research to create “mirror life” microbes amid concerns that the synthetic organisms would present an “unprecedented risk” to life on Earth.

The international group of Nobel laureates and other experts warn that mirror bacteria, constructed from mirror images of molecules found in nature, could become established in the environment and slip past the immune defences of natural organisms, putting humans, animals and plants at risk of lethal infections.

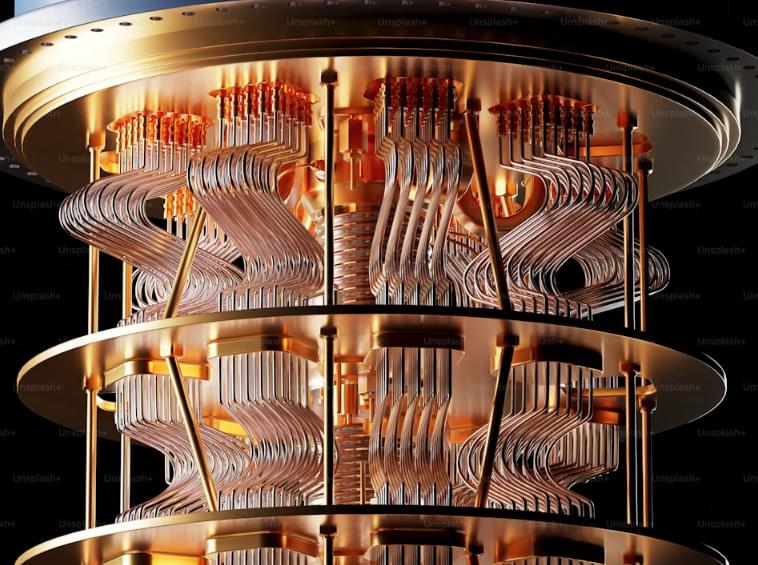

China has reached a new milestone in quantum computing with the development of Tianyan-504, a powerful 504-qubit quantum computer.

The Tianyan-504 quantum computer was developed through collaboration between the China Telecom Quantum Group (CTQG), the Center for Excellence in Quantum Information and Quantum Physics under the Chinese Academy of Sciences, and QuantumCTek, a quantum technology company based in Anhui Province.

China has made a significant leap in quantum computing with the unveiling of the Tianyan-504, a record-breaking quantum computer.

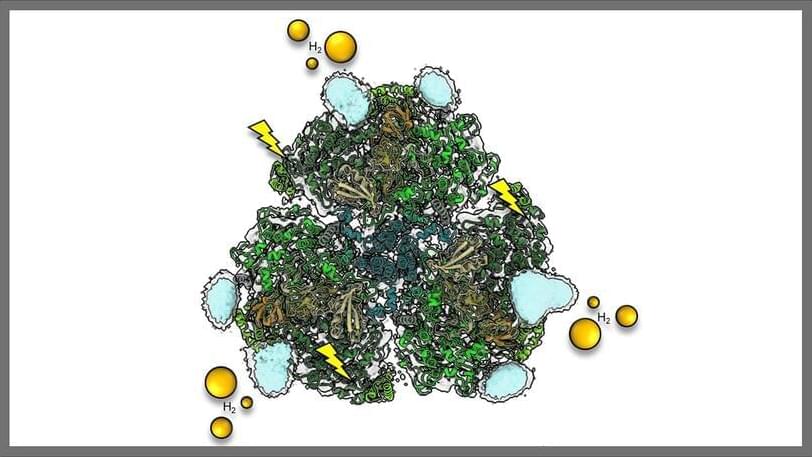

Photosynthesis is one of the most efficient natural processes for converting light energy from the sun into chemical energy vital for life on earth. Proteins called photosystems are critical to this process and are responsible for the conversion of light energy to chemical energy.

Combining one kind of these proteins, called photosystem I (PSI), with platinum nanoparticles, microscopic particles that can perform a chemical reaction that produces hydrogen — a valuable clean energy source — creates a biohybrid catalyst. That is, the light absorbed by PSI drives hydrogen production by the platinum nanoparticle.

In a recent breakthrough, researchers at the U.S. Department of Energy’s (DOE) Argonne National Laboratory and Yale University have determined the structure of the PSI biohybrid solar fuel catalyst. Building on more than 13 years of research pioneered at Argonne, the team reports the first high-resolution view of a biohybrid structure, using an electron microscopy method called cryo-EM. With structural information in hand, this advancement opens the door for researchers to develop biohybrid solar fuel systems with improved performance, which would provide a sustainable alternative to traditional energy sources.

Argonne and Yale researchers shed light on the structure of a photosynthetic hybrid for the first time, enabling advancements in clean energy production.

What can solar eclipses teach us about the Sun and how it interacts with the Earth’s atmosphere? This is what a recent press briefing conducted at the American Geophysical Union 2024 Fall Meeting hopes to address as a team of scientists from the Citizen CATE 2024 (Continental-America Telescopic Eclipse) project reported on findings that were obtained during the April 8, 2024, total solar eclipse over North America.

“Scientists and tens of thousands of volunteer observers were stationed throughout the Moon’s shadow,” said Dr. Kelly Korreck, who is the NASA Program Manager for the 2023 and 2024 Solar Eclipses. “Their efforts were a crucial part of the Heliophysics Big Year – helping us to learn more about the Sun and how it affects Earth’s atmosphere when our star’s light temporarily disappears from view.”

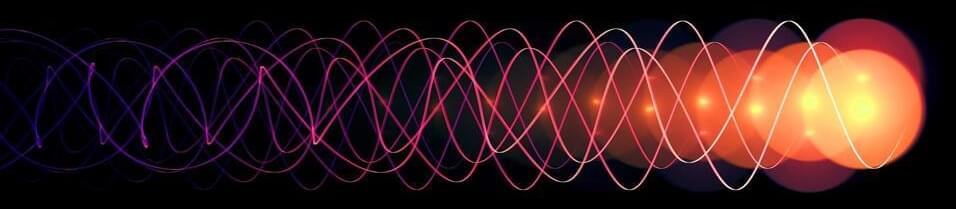

Consisting of a combination of both professional and citizen scientists using a combination of images, spectroscopy, and ham radios, the large team comprised of Citizen CATE 2024 made groundbreaking observations of the 2024 solar eclipse, along with ascertaining how radio signals were influenced during the eclipse. In the end, the team of more than 800 individuals discovered that eclipses produce atmospheric gravity waves, or ripples within the Earth’s atmosphere. Additionally, the ham radio operators, comprised of more than 6,350 individuals, discovered that radio communications improved both within and outside the eclipses’ path of totality at frequencies between 1 to 7 Megahertz, whereas communications became worse at frequencies above 10 Megahertz.