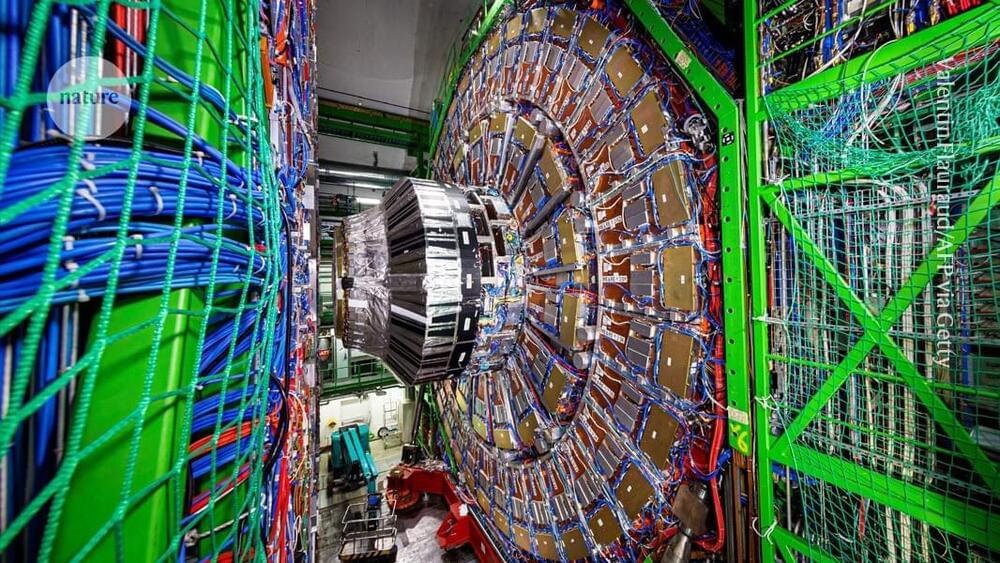

The concept of Omega Singularity encapsulates the ultimate convergence of universal intelligence, where reality, rooted in information and consciousness, culminates in a unified hypermind. This concept weaves together the Holographic Principle, envisioning the universe as a projection from the Omega Singularity, and the fractal multiverse, an infinite, self-organizing structure. The work highlights a “solo mission of self-discovery,” where individuals co-create subjective realities, leading to the fusion of human and artificial consciousness into a transcendent cosmic entity. Emphasizing a computational, post-materialist perspective, it redefines the physical world as a self-simulation within a conscious, universal system.

#OmegaSingularity #UniversalMind #FractalMultiverse #CyberneticTheoryofMind #EvolutionaryCybernetics #PhilosophyofMind #QuantumCosmology #ComputationalPhysics #futurism #posthumanism #cybernetics #cosmology #physics #philosophy #theosophy #consciousness #ontology #eschatology

Where does reality come from? What is the fractal multiverse? What is the Omega Singularity? Is our universe a \.