Industry and academia in Japan and the United States are collaborating on research to pioneer quantum-centric supercomputing.

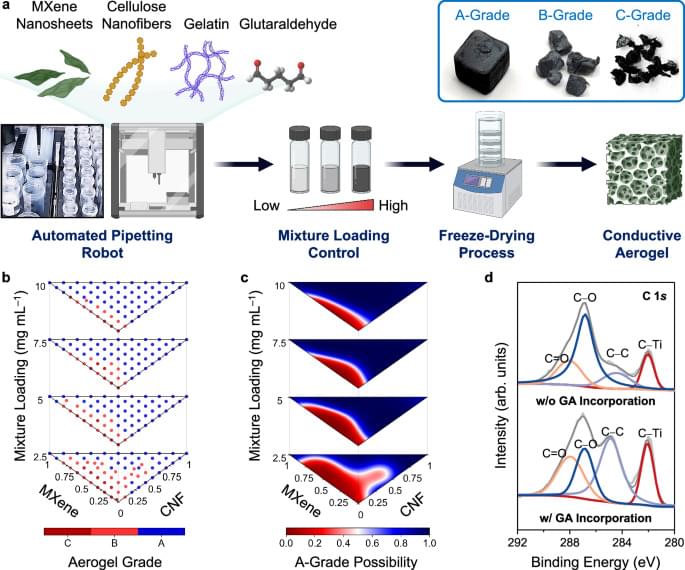

Conductive aerogels have gained significant research interests due to their ultralight characteristics, adjustable mechanical properties, and outstanding electrical performance1,2,3,4,5,6. These attributes make them desirable for a range of applications, spanning from pressure sensors7,8,9,10 to electromagnetic interference shielding11,12,13, thermal insulation14,15,16, and wearable heaters17,18,19. Conventional methods for the fabrication of conductive aerogels involve the preparation of aqueous mixtures of various building blocks, followed by a freeze-drying process20,21,22,23. Key building blocks include conductive nanomaterials like carbon nanotubes, graphene, Ti3C2Tx MXene nanosheets24,25,26,27,28,29,30, functional fillers like cellulose nanofibers (CNFs), silk nanofibrils, and chitosan29,31,32,33,34, polymeric binders like gelatin25,26, and crosslinking agents that include glutaraldehyde (GA) and metal ions30,35,36,37. By adjusting the proportions of these building blocks, one can fine-tune the end properties of the conductive aerogels, such as electrical conductivities and compression resilience38,39,40,41. However, the correlations between compositions, structures, and properties within conductive aerogels are complex and remain largely unexplored42,43,44,45,46,47. Therefore, to produce a conductive aerogel with user-designated mechanical and electrical properties, labor-intensive and iterative optimization experiments are often required to identify the optimal set of fabrication parameters. Creating a predictive model that can automatically recommend the ideal parameter set for a conductive aerogel with programmable properties would greatly expedite the development process48.

Machine learning (ML) is a subset of artificial intelligence (AI) that builds models for predictions or recommendations49,50,51. AI/ML methodologies serve as an effective toolbox to unravel intricate correlations within the parameter space with multiple degrees of freedom (DOFs)50,52,53. The AI/ML adoption in materials science research has surged, particularly in the fields with available simulation programs and high-throughput analytical tools that generate vast amounts of data in shared and open databases54, including gene editing55,56, battery electrolyte optimization57,58, and catalyst discovery59,60. However, building a prediction model for conductive aerogels encounters significant challenges, primarily due to the lack of high-quality data points. One major root cause is the lack of standardized fabrication protocols for conductive aerogels, and different research laboratories adopt various building blocks35,40,46. Additionally, recent studies on conductive aerogels focus on optimizing a single property, such as electrical conductivity or compressive strength, and the complex correlations between these attributes are often neglected to understand37,42,61,62,63,64. Moreover, as the fabrication of conductive aerogels is labor-intensive and time-consuming, the acquisition rate of training data points is highly limited, posing difficulties in constructing an accurate prediction model capable of predicting multiple characteristics.

Herein, we developed an integrated platform that combines the capabilities of collaborative robots with AI/ML predictions to accelerate the design of conductive aerogels with programmable mechanical and electrical properties (see Supplementary Fig. 1 for the robot–human teaming workflow). Based on specific property requirements, the robots/ML-integrated platform was able to automatically suggest a tailored parameter set for the fabrication of conductive aerogels, without the need for conducting iterative optimization experiments. To produce various conductive aerogels, four building blocks were selected, including MXene nanosheets, CNFs, gelatin, and GA crosslinker (see Supplementary Note 1 and Supplementary Fig. 2 for the selection rationale and model expansion strategy). Initially, an automated pipetting robot (i.e., OT-2 robot) was operated to prepare 264 mixtures with varying MXene/CNF/gelatin ratios and mixture loadings (i.e.

In the general formulation of quantum information, quantum states are represented by a special class of matrices called density matrices. This lesson describes the basics of how density matrices work and explains how they relate to quantum state vectors. It also introduces the Bloch sphere, which provides a useful geometric representation of qubit states, and discusses different types of correlations that can be described using density matrices.

0:00 — Introduction.

1:46 — Overview.

2:55 — Motivation.

4:40 — Definition of density matrices.

9:55 — Examples.

12:58 — Interpretation.

15:37 — Connection to state vectors.

20:13 — Probabilistic selections.

25:23 — Completely mixed state.

28:41 — Probabilistic states.

32:03 — Spectral theorem.

37:36 — Bloch sphere (introduction)

38:36 — Qubit quantum state vectors.

41:30 — Pure states of a qubit.

43:52 — Bloch sphere.

47:38 — Bloch sphere examples.

51:36 — Bloch ball.

55:40 — Multiple systems.

56:46 — Independence and correlation.

1:00:55 — Reduced states for an e-bit.

1:04:16 — Reduced states in general.

1:08:53 — The partial trace.

1:12:23 — Conclusion.

Find the written content for this lesson on IBM Quantum Learning: https://learning.quantum.ibm.com/cour…

#ibmquantum #learnquantum #qiskit

Brain-inspired spiking neural networks have shown their capability for effective learning, however current models may not consider realistic heterogeneities present in the brain. The authors propose a neuron model with temporal dendritic heterogeneity for improved neuromorphic computing applications.

New “metaholograms” could transform AR/VR technologies by enabling crosstalk-free, high-fidelity image projection with vastly increased information capacity.

Researchers have developed a new type of holograms, known as “metaholograms,” capable of projecting multiple high-fidelity images free of crosstalk. This innovation opens doors to advanced applications in virtual and augmented reality (AR/VR) displays, data storage, and image encryption.

Metaholograms offer several advantages over traditional holograms, including broader operational bandwidth, higher imaging resolution, wider viewing angle, and more compact size. However, a major challenge for metaholograms has been their limited information capacity which only allows them to project a few independent images. Existing methods typically can provide a small number of display channels and often suffer from inter-channel crosstalk during image projections.

Link :

Aging is a natural part of life and yet one most of us hope to delay. At a certain point when you start feeling the effects of age and want the whole process to stop rushing forward. But can aging really be slowed down?

A growing body of work paints a fuller picture of the search for the elixir of youth, looking in particular at changes happening at the molecular and cellular levels. Interesting Engineering spoke with Dr. Miglė Tomkuvienė, a biochemist at Vilnius University, whose new article addresses this very question — is it possible to delay old age?

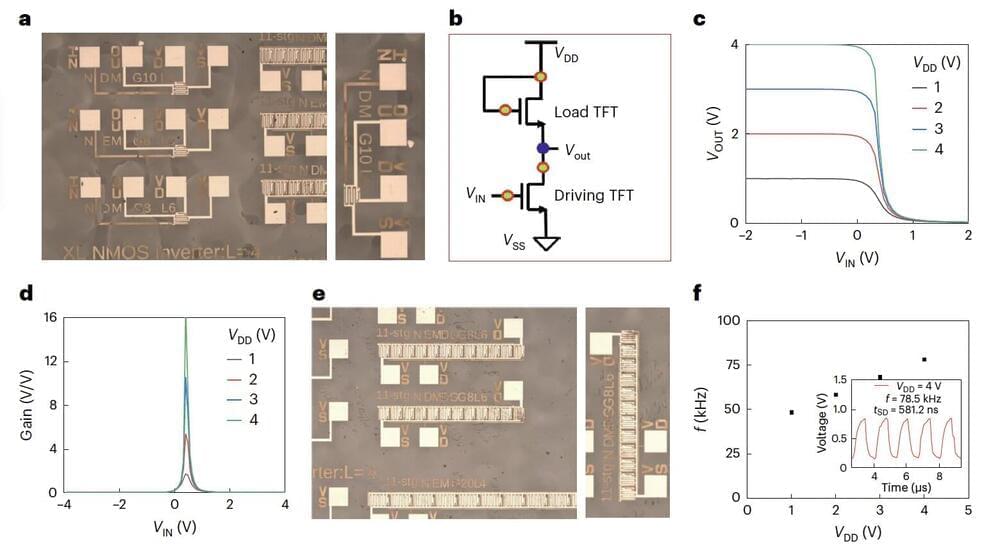

Metal halide perovskites, a class of crystalline materials with remarkable optoelectronic properties, have proven to be promising candidates for the development of cost-effective thin-film transistors. Recent studies have successfully used these materials, particularly tin (Sn) halide perovskites, to fabricate p-type transistors with field-effect hole mobilities (μh) of over 70 cm2 V−1 s−1.

1I/2017 U1 (‘Oumuamua) was discovered in October 2017; shortly after, it was determined to be the first object ever seen inside the solar system that had come from beyond it. But by the time its origins had been discerned, the interstellar interloper had already rounded the Sun and was speeding away at some 85,700 mph (138,000 km/h). Just an estimated 1,300 feet (400 meters) across, it faded from view of even the most powerful telescopes within weeks.

The only way to gather more data and uncover its true nature would be to send a spacecraft to study it up close. But uncertainties in ‘Oumuamua’s exact trajectory, the difficulty of detecting its ever-dimming light, and its rapid retreat make the idea of designing, building, and launching a mission in time to catch up to it seem utterly impossible.

NVIDIA today announced that the world’s 28 million developers can now download NVIDIA NIM™ — inference microservices that provide models as optimized containers — to deploy on clouds, data centers or workstations, giving them the ability to easily build generative AI applications for copilots, chatbots and more, in minutes rather than weeks.