Acting as an optics simulator, XLuminA explores all possible optical configurations.

Max Planck researchers developed an AI framework that autonomously designs microscopy experiments, optimizing it 10,000 times faster.

Acting as an optics simulator, XLuminA explores all possible optical configurations.

Max Planck researchers developed an AI framework that autonomously designs microscopy experiments, optimizing it 10,000 times faster.

HighlyCitedPapers.

📝 — Schulze, et al.

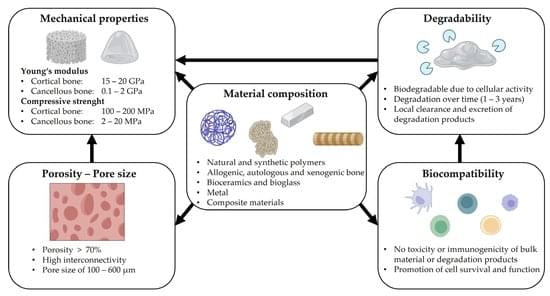

The present work reviews the strategies and technical approaches used to overcome the multilayered problems associated with large bone defect healing in long bones, with emphasis on research rooted in scaffold-guided tissue regeneration.

Full text is available 👇

Bone generally displays a high intrinsic capacity to regenerate. Nonetheless, large osseous defects sometimes fail to heal. The treatment of such large segmental defects still represents a considerable clinical challenge. The regeneration of large bone defects often proves difficult, since it relies on the formation of large amounts of bone within an environment impedimental to osteogenesis, characterized by soft tissue damage and hampered vascularization. Consequently, research efforts have concentrated on tissue engineering and regenerative medical strategies to resolve this multifaceted challenge. In this review, we summarize, critically evaluate, and discuss present approaches in light of their clinical relevance; we also present future advanced techniques for bone tissue engineering, outlining the steps to realize for their translation from bench to bedside.

HighlyCitedPapers.

📝 The Many Faces of Immune Activation in HIV-1 Infection: A Multifactorial Interconnection — Mazzuti, et al.

Full text is available 👇

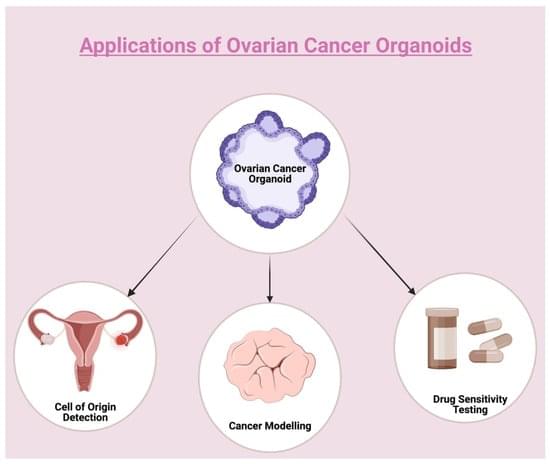

Ovarian cancer (OC) is the leading cause of death from gynecological malignancies. Despite great advances in treatment strategies, therapeutic resistance and the gap between preclinical data and actual clinical efficacy justify the necessity of developing novel models for investigating OC. Organoids represent revolutionary three-dimensional cell culture models, deriving from stem cells and reflecting the primary tissue’s biology and pathology. The aim of the current review is to study the current status of mouse-and patient-derived organoids, as well as their potential to model carcinogenesis and perform drug screenings for OC.

Just a few months after the previous record was set, a start-up called Quantinuum has announced that it has entangled the largest number of logical qubits – this will be key to quantum computers that can correct their own errors.

Dyson spheres and rings have always held a special fascination for me. The concept is simple: build a great big structure either as a sphere or ring to harness the energy from a star. Dyson rings are far more simple and feasible to construct and in a recent paper a team of scientists explore how we might detect them by analyzing the light from distant stars. The team suggests they might be able to detect Dyson rings around pulsars using their new technique.

Like their spherical cousins, Dyson rings remain for now, a popular idea in science fiction yet they are starting to appear more and more in scientific debates. The concept of the ring is similar to the sphere, a megastructure designed to encircle a star, harnessing its energy on a gargantuan scale.

It might consist of a series of satellites or even habitats in a circular orbit with solar collectors and unlike the spheres, require far less resources to build. The concept of the sphere was first proposed by physicist and mathematician Freeman Dyson in 1960. Such structures might be detectable and reveal the existence of intelligent civilizations.

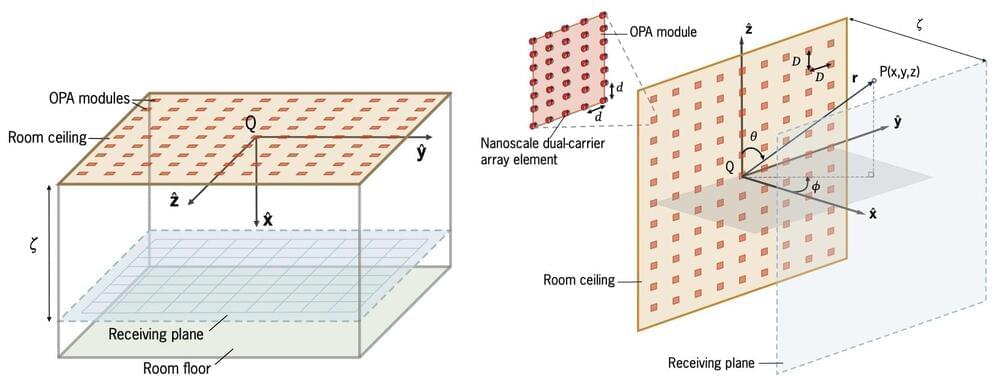

As our devices multiply and data demands grow, traditional wireless systems are hitting their limits. To meet these challenges, we have turned to an innovative solution. At the University of Melbourne and Monash University, we have developed a dual-carrier Modular Optical Phased Array (MOPA) communication system. At the core of our innovation is a groundbreaking concept: a modular phased array.

This design is inspired by the quantum superposition principle, applying its logic to enhance technical performance and efficiency. This cutting-edge technology is designed to make indoor wireless networks faster, more reliable and more secure, while addressing the limitations of traditional systems. Our research is published in the IEEE Open Journal of the Communications Society.

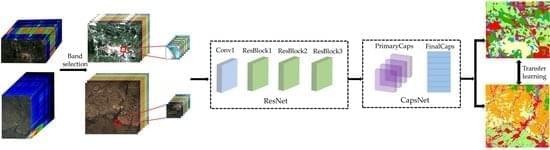

Land cover classification (LCC) of heterogeneous mining areas is important for understanding the influence of mining activities on regional geo-environments. Hyperspectral remote sensing images (HSI) provide spectral information and influence LCC. Convolutional neural networks (CNNs) improve the performance of hyperspectral image classification with their powerful feature learning ability. However, if pixel-wise spectra are used as inputs to CNNs, they are ineffective in solving spatial relationships. To address the issue of insufficient spatial information in CNNs, capsule networks adopt a vector to represent position transformation information. Herein, we combine a clustering-based band selection method and residual and capsule networks to create a deep model named ResCapsNet. We tested the robustness of ResCapsNet using Gaofen-5 Imagery. The images covered two heterogeneous study areas in Wuhan City and Xinjiang Province, with spatially weakly dependent and spatially basically independent datasets, respectively. Compared with other methods, the model achieved the best performances, with averaged overall accuracies of 98.45 and 82.80% for Wuhan study area, and 92.82 and 70.88% for Xinjiang study area. Four transfer learning methods were investigated for cross-training and prediction of those two areas and achieved good results. In summary, the proposed model can effectively improve the classification accuracy of HSI in heterogeneous environments.

Saar Yoskovitz is Co-Founder & CEO at Augury, a pioneer in AI-driven Machine Health and Process Health solutions for industrial sectors.

American manufacturers are at a crossroads, needing to decide between evolution and obsolescence. The tools that historically drove profitability and efficiency are no longer having an impact. Labor is hard to find and harder to keep. The National Association of Manufacturing projects that 2.1 million manufacturing roles will go unfilled by 2030. This hard truth is compounded by findings in Augury’s State of Production Health report, which reveals that 91% of manufacturers say that the mass exodus of industry veterans will worsen the knowledge gap.

An alarming rate of brain drain is looming over the industrial sector. As tenured employees reach retirement age and fewer professionals line up to take their place, more manufacturers are turning to artificial intelligence (AI) to bridge the gap.