LLMs use “Bag of Heuristics” to do approximate retrieval.

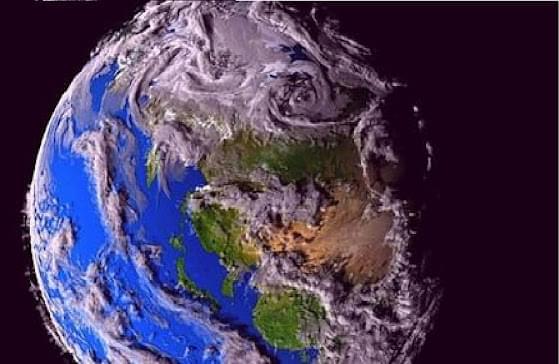

Do planets have intelligence? That seems to be the main idea behind a new hypothesis put forth by astrobiologists: that planets are also intelligent beings. This thought experiment is based on the idea that planets like Earth have undergone changes due to the collective activity of life, such as that of microorganisms or plants, which has given them the ability to develop a life of their own.

The research, which was published in the International Journal of Astrobiology, establishes a framework for evaluating a planet’s intelligence. To think of intelligence in terms of an intergalactic body rather than sentient creatures like humans is a startling prospect. But in a way, a planet can have a “green mind ”; this paradigm offers fresh perspectives on how to deal with crises like climate change and technological upheaval.

The researchers defined planetary intelligence as cognitive activity and knowledge operating on a large planetary scale. We know intelligence as a concept describes individuals, collective groups, even the curious behaviors of viruses or molds. The underground networks of fungi, for instance, are the breathing life of forests; they form a life system that recognizes changing climate conditions and actively respond to them. These things profoundly alter the condition of the entire planet.

Using this model, researchers may be able to identify antibody drugs that can target a variety of infectious diseases.

MIT researchers have developed a computational technique that allows large language models to predict antibody structures more accurately. Their work could enable researchers to sift through millions of possible antibodies to identify those that could be used to treat SARS-CoV-2 and other infectious diseases.

Check out the full article here: https://www.wevolver.com/article/a-new-computational-model-c…accurately.

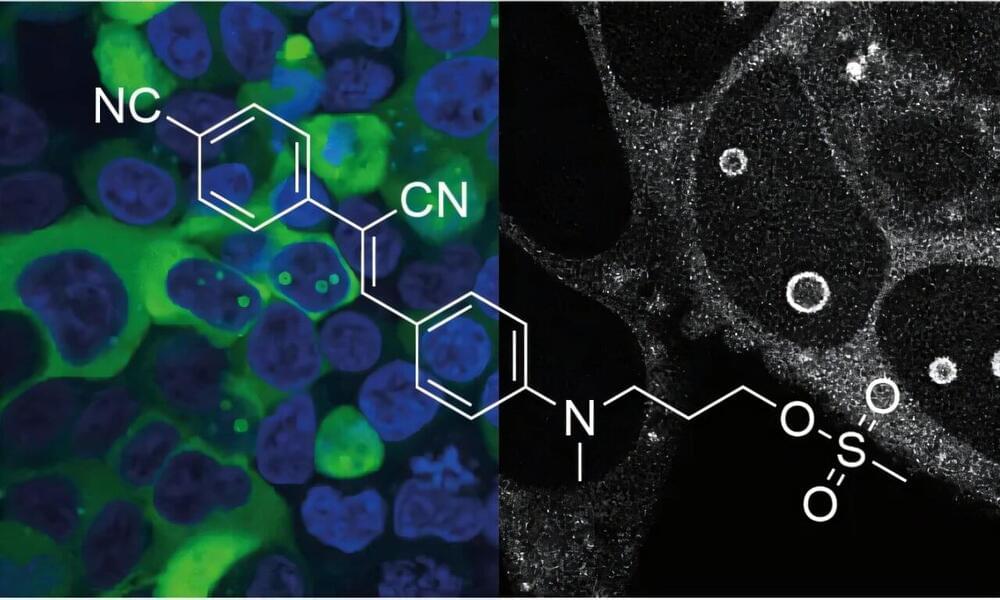

The specific labeling of RNA in living cells poses many challenges. In a new article published in the journal Nature Chemical Biology, researchers from the University of Innsbruck describe a structure-guided approach to the formation of covalent (i.e., irreversibly tethered) RNA-ligand complexes.

The key to this is the modification of the original ligand with a reactive “handle” that allows it to react with a nucleobase at the RNA binding site. This was first demonstrated in vitro and in vivo using the example of an RNA riboswitch.

The versatility of the approach is highlighted by the first covalent “fluorescent light-up RNA aptamer” (coFLAP). This system retains its strong fluorescence during imaging in living cells even after washing, can be used for high-resolution microscopy and is particularly suitable for FRAP (fluorescence recovery after photobleaching) for monitoring intracellular RNA dynamics.

The National Weather Service issued a red flag warning (warm temperatures, strong winds and low humidity) for Southern California that spans from Tuesday to Wednesday in the Santa Barbara, Los Angeles and Ventura counties, and from Tuesday to Thursday in the San Bernadino, Orange, Riverside and San Diego counties.

Sporadic power outages have materialized in the San Fernando Valley, a highly populated area north of the Hollywood Hills, with the Los Angeles Department of Water and Power reporting a few thousand customers without power as of 5 p.m. PST.

Green tech innovations to combat climate change.

As the world faces the accelerating impacts of climate change, there is an urgent need for solutions that can reduce greenhouse gas emissions, promote sustainability, and protect the environment. Green technology, or “green tech,” is playing a critical role in the fight against climate change. This innovative field focuses on creating products, services, and systems that minimize the environmental impact of human activities while promoting sustainability. In this article, we explore the latest green tech innovations and how they are transforming industries, driving sustainability, and contributing to a greener planet.

NeuroXess, a Chinese startup, has made two major breakthroughs in brain-computer interface (BCI) technology, coming to the aid of a brain-damaged patient. The first was its ability to decode thoughts to speech in real time, and the other was remotely controlling a robot arm using thoughts alone.

Testing of the startup’s new BCI also enabled the user to talk to an artificial intelligence (AI) model and operate a digital avatar. These tests are result of an experiment undertaken in August 2024 at Huashan Hospital, where neurosurgeons implanted a 256-channel, high-throughput flexible BCI device into the patient’s brain.

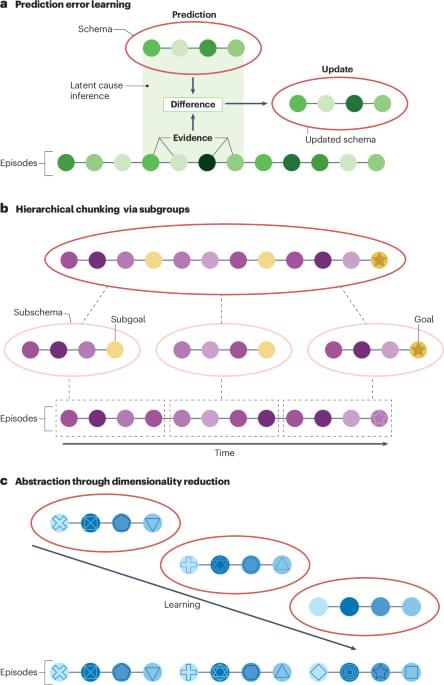

A computational account of how schemas are learned through experience is lacking. In this Perspective, Bein and Niv synthesize schema theory and reinforcement learning research to derive computational principles that might govern schema learning and then propose their mediation via dimensionality reduction in the medial prefrontal cortex.

Summary: While humans share over 95% of their genome with chimpanzees, our brains are far more complex due to differences in gene expression. Research shows that human brain cells, particularly glial cells, exhibit higher levels of upregulated genes, enhancing neural plasticity and development.

Oligodendrocytes, a glial cell type, play a key role by insulating neurons for faster and more efficient signaling. This study underscores that the evolution of human intelligence likely involved coordinated changes across all brain cell types, not just neurons.