The Call is still open on senescence in brain aging and Alzheimers disease!

Submit your paper today! 📩

Understanding Senescence in Brain Aging and Alzheimer’s Disease

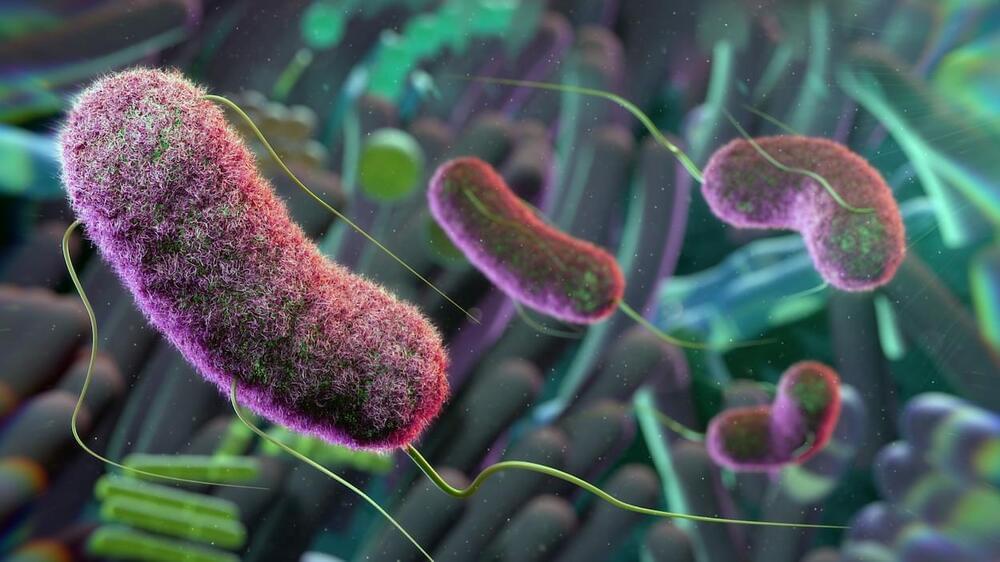

Guest Editors Drs. Julie Andersen and Darren Baker, Associate Editor Dr. Anna Csiszar and Editor-in-Chief Dr. Zoltan Ungvari, and the editorial team of GeroScience (Journal of the American Aging Association; 2018 Impact Factor: 6.44) invite submission of original research articles, opinion papers and review articles related to research focused on understanding the role of senescence in brain aging and in Alzheimer’s disease. Senescent cells accumulate in aging and pathological conditions associated with accelerated aging. While earlier investigations focused on cellular senescence in tissues and cells outside of the brain (e.g. adipose tissue, dermal fibroblasts, cells of the cardiovascular system), more recent studies started to explore the role of senescent cells in age-related decline of brain function and the pathogenesis of neurodegenerative disease and vascular cognitive impairment. This call-for-papers is aimed at providing a platform for the dissemination of critical novel ideas related to the functional and physiological consequences of senescence in diverse brain cell types (e.g., oligodendrocytes, pericytes, astrocytes, endothelial cells, microglia, neural stem cells), with the ultimate goal to identify novel targets for treatment and prevention Alzheimer’s disease, Parkinson’s disease and vascular cognitive impairment. We welcome manuscripts focusing on senescent-cell-targeting mouse models, the role of paracrine senescence, senescence pathways in terminally differentiated neurons, the pleiotropic effects of systemic senescence, the role of senescence in neuroinflammation and the protective effects of senolytic therapies. We are especially interested in manuscripts exploring the causal role of molecular mechanisms of aging in induction of cellular senescence as well as links between lifestyle (e.g., diet, exercise, smoking), medical treatments (e.g. cancer treatments), exposure environmental toxicants and cellular senescence in the brain. We encourage submission of manuscripts on developing innovative strategies to identify and target senescent cells for prevention/treatment of age-related diseases of the brain. Authors are also encouraged to submit manuscripts focusing on translational aspects of senescence research.

All manuscripts accepted from this Call for Papers will be included in a unique online article collection to further highlight the importance of this topic. All manuscripts should be submitted online here: https://www.editorialmanager.com/jaaa/default.aspx.