Breakthroughs in synthetic biology could create mirror versions of natural molecules, with devastating consequences for life on Earth.

By Simon Makin

Breakthroughs in synthetic biology could create mirror versions of natural molecules, with devastating consequences for life on Earth.

By Simon Makin

Mosasaurs are extinct marine reptiles that dominated Earth’s oceans during the Late Cretaceous period.

Mosasaurs, extinct marine reptiles that dominated Earth’s oceans during the Late Cretaceous period, have fascinated scientists since their discovery in 1766 near Maastricht, Netherlands. These formidable lizards are iconic examples of macroevolution, showcasing the emergence of entirely new animal groups.

Michael Polcyn, a paleontologist from Utrecht University, has presented the most comprehensive study yet on their early evolution, ecology, and feeding biology. His findings, aided by advanced imaging technologies, provide fresh insights into the origins, relationships, and behaviors of these ancient giants.

Microorganisms produce a wide variety of natural products that can be used as active ingredients to treat diseases such as infections or cancer. The blueprints for these molecules can be found in the microbes’ genes, but often remain inactive under laboratory conditions.

A team of researchers at the Helmholtz Institute for Pharmaceutical Research Saarland (HIPS) has now developed a genetic method that leverages a natural bacterial mechanism for the transfer of genetic material and uses it for the production of new active ingredients. The team has published its results in the journal Science.

In contrast to humans, bacteria have the remarkable ability to exchange genetic material with one another. A well-known example with far-reaching consequences is the transfer of antibiotic resistance genes between bacterial pathogens. This gene transfer allows them to adapt quickly to different environmental conditions and is a major driver of the spread of antibiotic resistance.

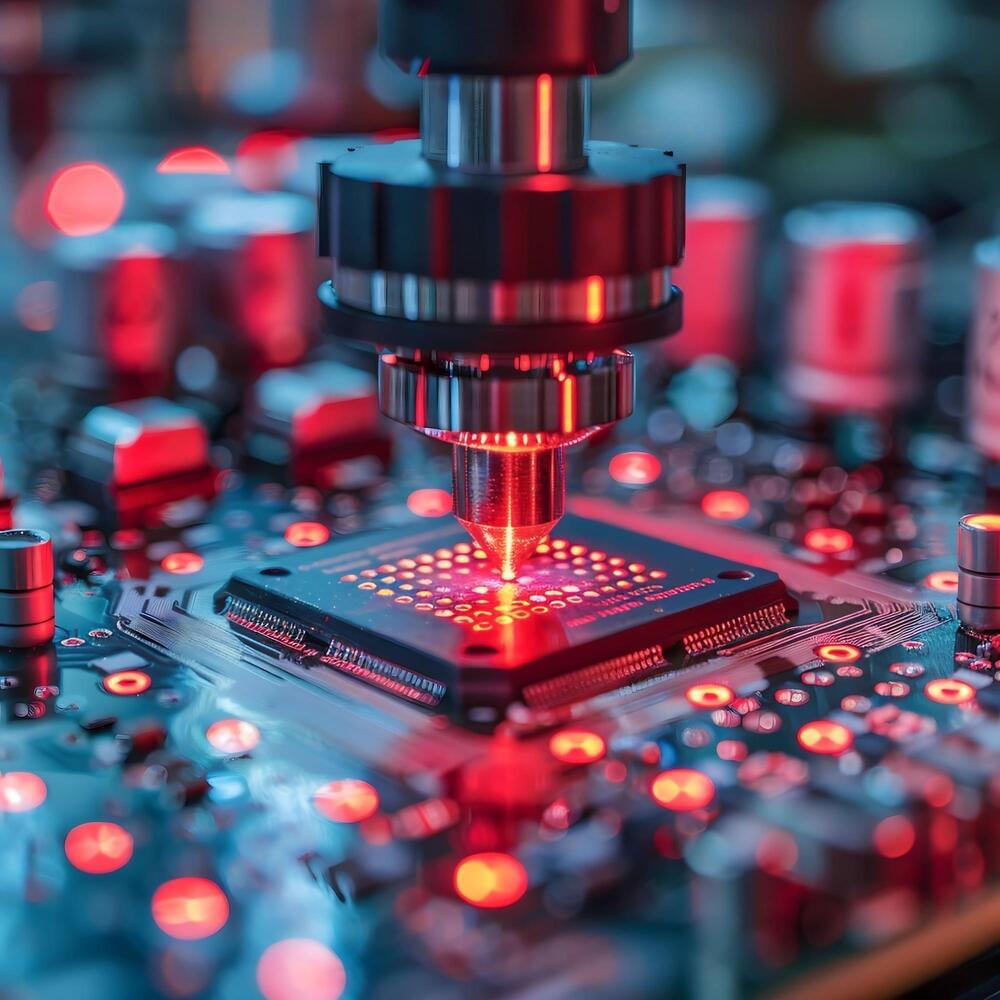

University of Central Florida (UCF) researcher Debashis Chanda, a professor at UCF’s NanoScience Technology Center, has developed a new technique to detect long wave infrared (LWIR) photons of different wavelengths or “colors.”

The research was recently published in Nano Letters.

The new detection and imaging technique will have applications in analyzing materials by their spectral properties, or spectroscopic imaging, as well as thermal imaging applications.

Astrocytes are star-shaped glial cells in the central nervous system that support neuronal function, maintain the blood-brain barrier, and contribute to brain repair and homeostasis. The evolution of these cells throughout the progression of Alzheimer’s disease (AD) is still poorly understood, particularly when compared to that of neurons and other cell types.

Researchers at Massachusetts General Hospital, the Massachusetts Alzheimer’s Disease Research Center, Harvard Medical School and Abbvie Inc. set out to fill this gap in the literature.

Their paper, published in Nature Neuroscience, provides one of the most detailed accounts to date of how different astrocyte subclusters respond to AD across different brain regions and disease stages, providing valuable insights into the cellular dynamics of the disease.

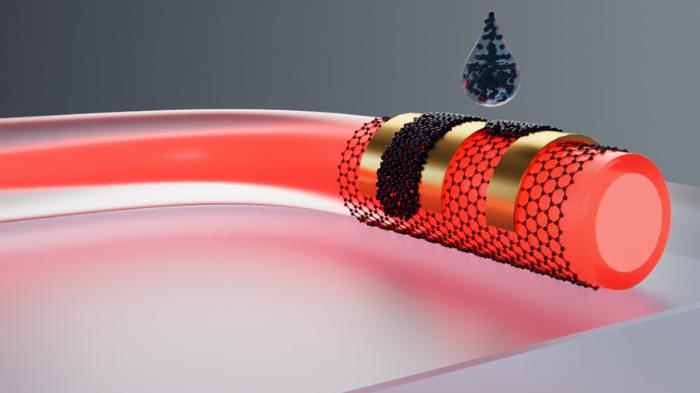

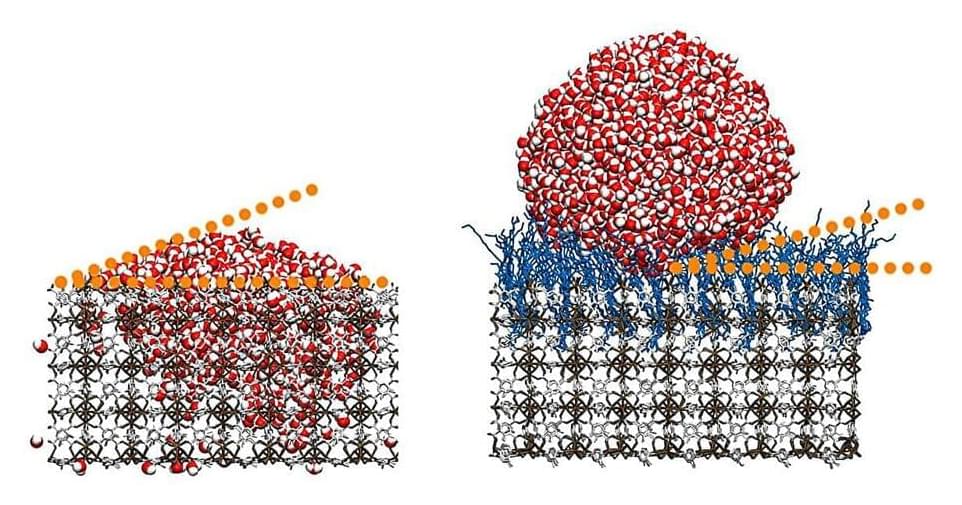

Scientists from Karlsruhe Institute of Technology (KIT) and the Indian Institute of Technology Guwahati (IITG) have developed a surface material that repels water droplets almost completely. Using an entirely innovative process, they changed metal-organic frameworks (MOFs)—artificially designed materials with novel properties—by grafting hydrocarbon chains.

The resulting superhydrophobic (extremely water-repellent) properties are interesting for use as self-cleaning surfaces that need to be robust against environmental influences, such as on automobiles or in architecture. The study was published in the journal Materials Horizons.

MOFs (metal-organic frameworks) are composed of metals and organic linkers that form a network with empty pores resembling a sponge. Their volumetric properties—unfolding two grams of this material would yield the area of a football pitch—make them an interesting material in applications such as gas storage, carbon dioxide separation, or novel medical technologies.