At some point, theoretical physics shades into science fiction. This is a beautiful little book, by a celebrated physicist and writer, about a phenomenon that is permitted by equations but might not actually exist. Or perhaps white holes do exist, and are everywhere: we just haven’t noticed them yet. No such controversy exists about black holes, wh…

Exploring the cutting edge of genetic engineering, the development of programmable recombinases and zinc finger domains is ushering in a new era of precision in DNA manipulation. These advances enable precise genomic alterations, from single nucleotide changes to the insertion of large DNA segments, potentially transforming the landscape of therapeutic gene editing and opening new possibilities in personalised medicine.

Nvidia’s Blackwell isn’t taking any prisoners.

A monster of a chip that combines two dies.

Hopper is 4,000 FLOPS at FP8 and Blackwell is at 20,000 FLOPS at FP4.

Kicking off the biggest GTC conference yet, NVIDIA founder and CEO Jensen Huang unveils NVIDIA Blackwell, NIM microservices, Omniverse Cloud APIs and more.

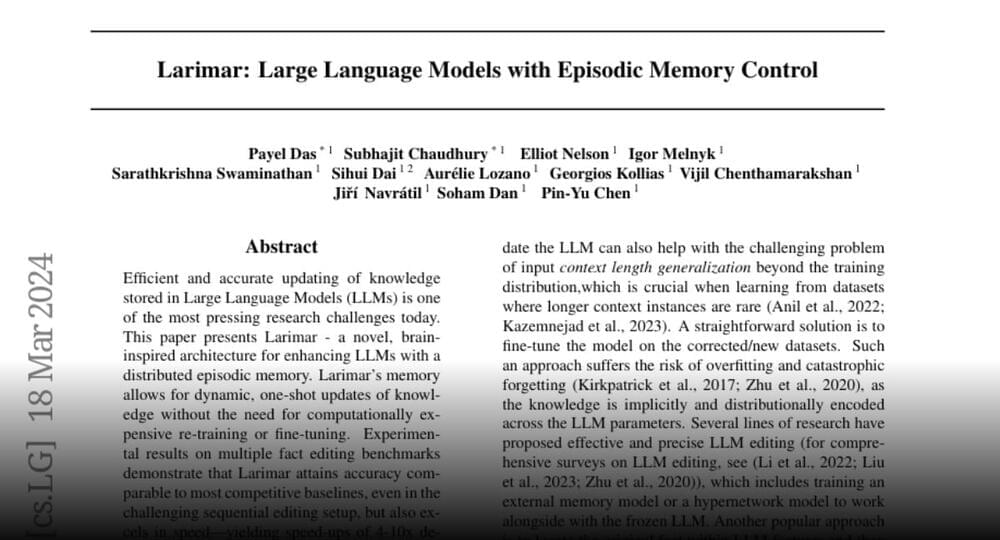

Larimar.

Large Language Models with Episodic Memory Control.

Efficient and accurate updating of knowledge stored in Large Language Models (LLMs) is one of the most pressing research challenges today.

Join the discussion on this paper page.

Tesla’s FSD Beta 12.3 shows improvement in navigating tight and congested roads, but still struggles with some scenarios and needs more work on winding mountain roads.

Questions to inspire discussion.

How does Tesla’s FSD Beta 12.3 perform on congested roads?

—The FSD Beta 12.3 shows confidence and assertiveness in navigating tight and congested roads, with improved performance compared to previous versions.

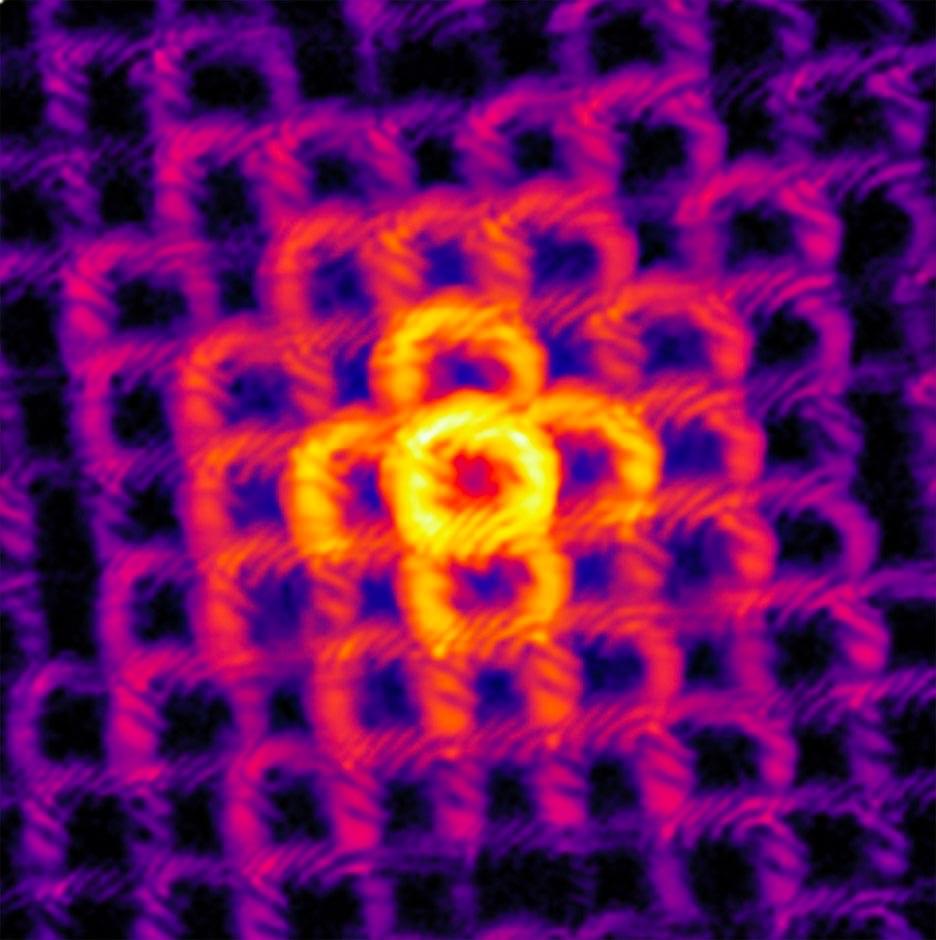

To study nanoscale patterns in tiny electronic or photonic components, a new method based on lensless imaging allows for near-perfect high-resolution microscopy. Ptychography, a powerful form of lensless imaging, uses a scanning beam to collect scattered light for image reconstruction, facing challenges with periodic samples.

Summary: Adolescents engaging in “transcendent thinking”—the practice of looking beyond the immediate context to understand deeper meanings and implications—can significantly influence their brain development. The study highlights how this complex form of thinking fosters coordination between the brain’s executive control and default mode networks, crucial for psychological functioning.

Analyzing high school students’ responses to global teen stories, researchers found that transcendent thinking not only enhances brain network coordination over time but also predicts key psychosocial outcomes in young adulthood. These groundbreaking findings underline the potential of civically minded education in supporting adolescents’ cognitive and emotional development.

Fermat’s last theorem puzzled mathematicians for centuries until it was finally proven in 1993. Now, researchers want to create a version of the proof that can be formally checked by a computer for any errors in logic.

By Alex Wilkins

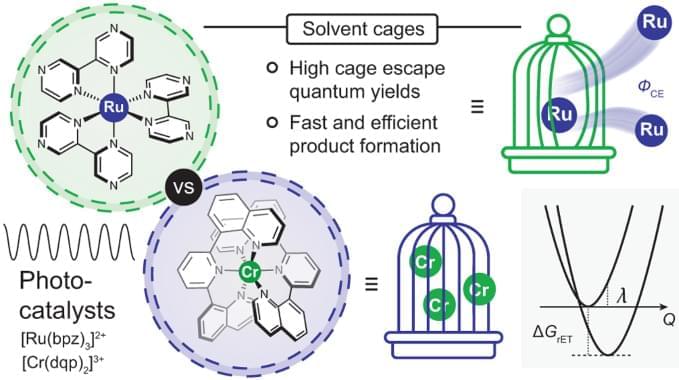

The 3 MLCT-excited [Ru(bpz)3]2+ and the spin-flip excited states of [Cr(dqp)2]3+ underwent photoinduced electron-transfer reactions with 12 amine-based electron donors similarly well, but provided cage escape quantum yields differing by up to an order of magnitude. In three exemplary benchmark photoredox reactions performed with different electron donors, the differences in the reaction rates observed when using either [Ru(bpz)3]2+ or [Cr(dqp)2]3+ as photocatalyst correlated with the magnitude of the cage escape quantum yields. These correlations indicate that the cage escape quantum yields play a decisive role in the reaction rates and quantum efficiencies of the photoredox reactions, and also illustrate that luminescence quenching experiments are insufficient for obtaining quantitative insights into photoredox reactivity.

From a purely physical chemistry perspective, these findings are not a priori surprising as the rate of photoproduct formation in an overall reaction comprising several consecutive elementary steps can be expressed as the product of the quantum yields of the individual elementary steps45,46. A recent report on solvent-dependent cage escape and photoredox studies suggested that the correlations between photoredox product formation rates and cage escape quantum yields might be observable11, but we are unaware of previous reports that have been able to demonstrate that the rate of product formation in several batch-type photoreactions correlates with the cage escape quantum yields determined from laser experiments. Synthetic photochemistry and mechanistic investigations are often conducted under substantially different conditions, which can lead to controversial discrepancies47,48,49, whereas here their mutual agreement seems remarkable, particularly given the complexity of the overall reactions.

The available data and the presented analysis suggest that the different cage escape behaviours of [Ru(bpz)3]2+ and [Cr(dqp)2]3+ originate in the fact that for any given electron donor, in-cage reverse electron transfer is ~0.3 eV more exergonic for the RuII complex than for the CrIII complex. Thermal reverse electron transfer between caged radical pairs therefore occurs more deeply in the Marcus inverted region with [Ru(bpz)3]2+ than with [Cr(dqp)2]3+, decelerating in-cage charge recombination in the RuII complex and increasing the cage escape quantum yields compared with the CrIII complex (Fig. 3D).

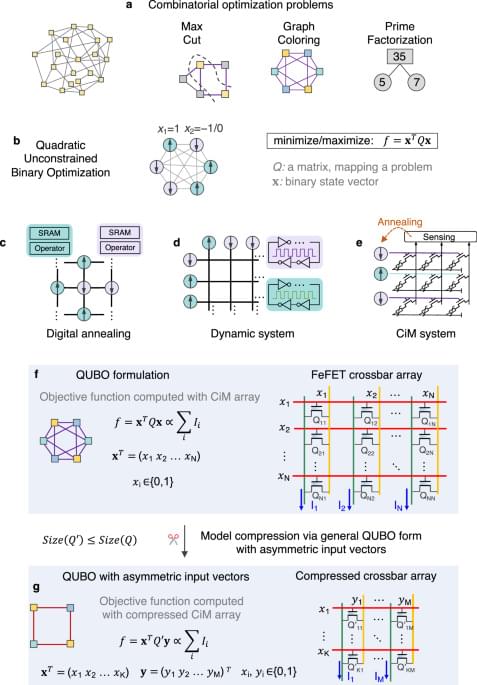

Yin et al. realize a FeFET based compute-in-memory annealer as an efficient combinatorial optimization solver through algorithm-hardware co-design with a FeFET chip, matrix lossless compression, and a multi-epoch simulated annealing algorithm.