Seedless blackberry, CRISPR.

US company introduces the world’s first seedless blackberry, revolutionizing fruit consumption and market dynamics.

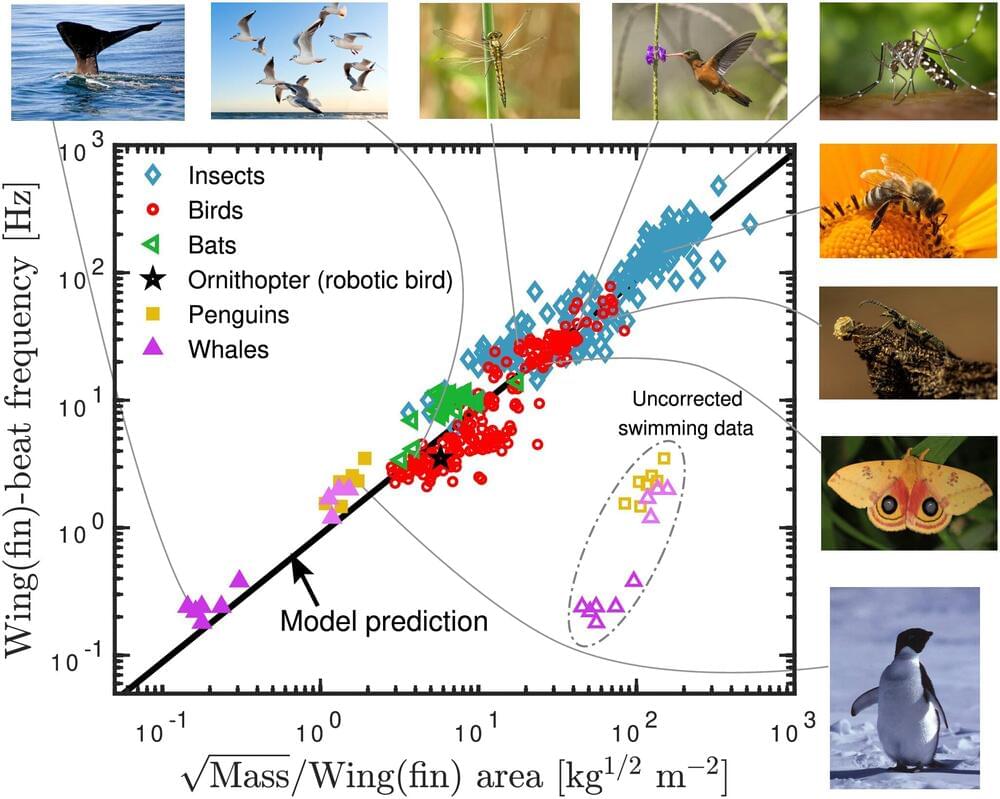

A single universal equation can closely approximate the frequency of wingbeats and fin strokes made by birds, insects, bats and whales, despite their different body sizes and wing shapes, Jens Højgaard Jensen and colleagues from Roskilde University in Denmark report in a new study published in PLOS ONE on June 5.

The ability to fly has evolved independently in many different animal groups. To minimize the energy required to fly, biologists expect that the frequency that animals flap their wings should be determined by the natural resonance frequency of the wing. However, finding a universal mathematical description of flapping flight has proved difficult.

Researchers used dimensional analysis to calculate an equation that describes the frequency of wingbeats of flying birds, insects and bats, and the fin strokes of diving animals, including penguins and whales.

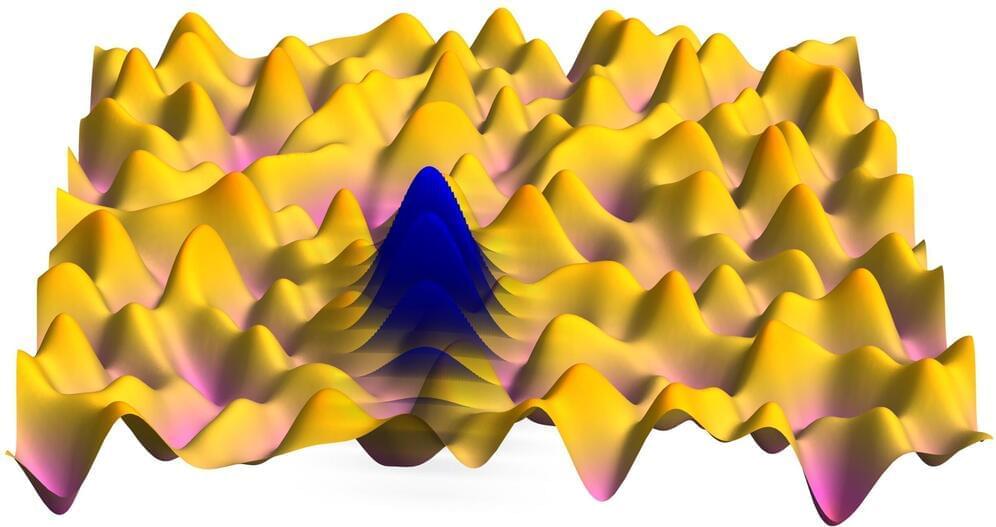

Researchers at Harvard University, Sabanci University, and Peking University recently gathered findings that could shed light on the origin of the high-temperature absorption peaks observed in strange metals, a class of materials exhibiting unusual electronic properties that do not conform to the conventional theory of metals.

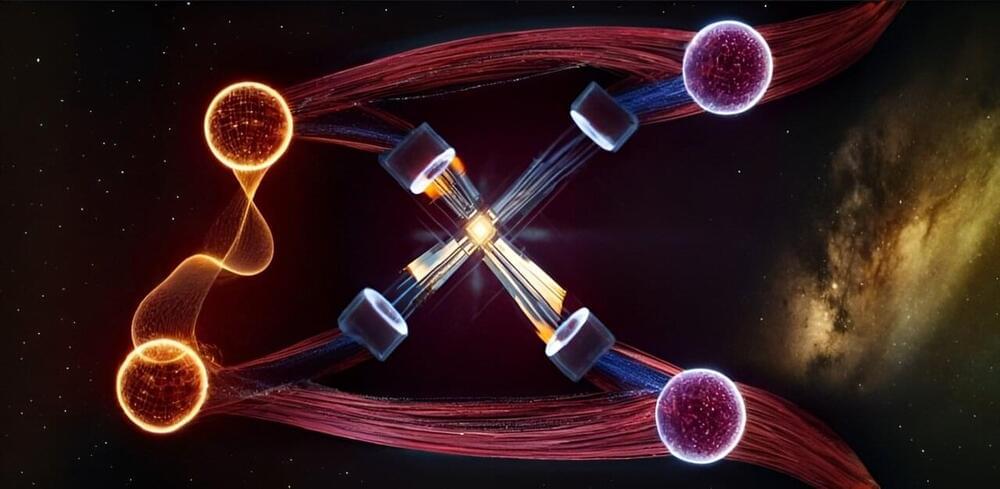

In a development at the intersection of quantum mechanics and general relativity, researchers have made significant strides toward unraveling the mysteries of quantum gravity. This work sheds new light on future experiments that hold promise for resolving one of the most fundamental enigmas in modern physics: the reconciliation of Einstein’s theory of gravity with the principles of quantum mechanics.