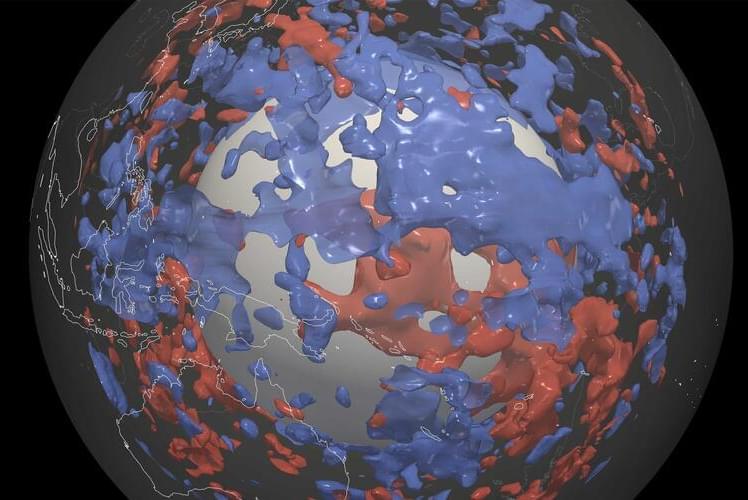

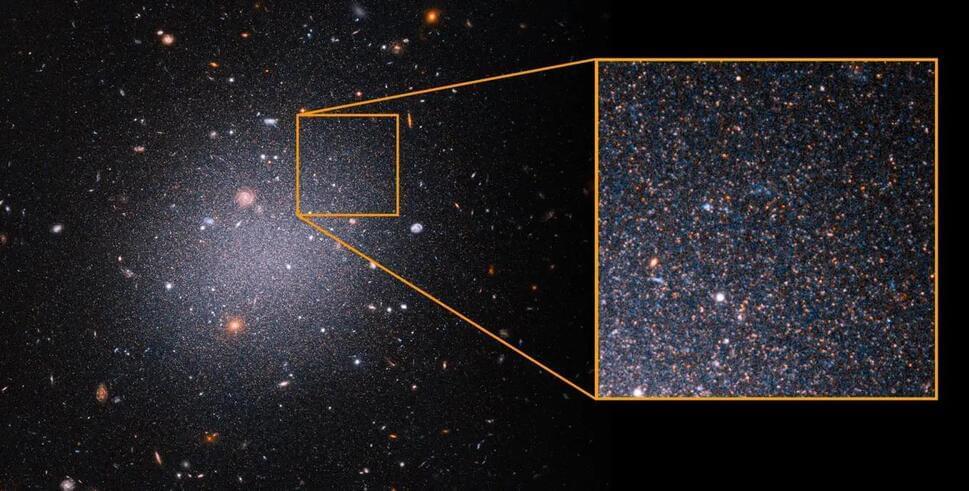

In October 2022, scientists detected the explosive death of a star 2.4 billion light-years away that was brighter than any ever recorded.

As the star’s core collapsed down into a black hole, the gamma-ray burst emitted by the star – an event named GRB 221009A – erupted with energies of up to 18 teraelectronvolts. Gamma-ray bursts are already the brightest explosions our Universe can produce; but GRB 221009A was an absolute record-smasher, earning it the moniker “the BOAT” – Brightest Of All Time.

There is, however, something wrong with the picture, according to a team of astrophysicists led by Giorgio Galanti of the National Institute for Astrophysics (INAF) in Italy. Based on cutting-edge models of the Universe, we shouldn’t be able to see photons more powerful than 10 teraelectronvolts in data from the Large High Altitude Air Shower Observatory (LHAASO) that made the detection.