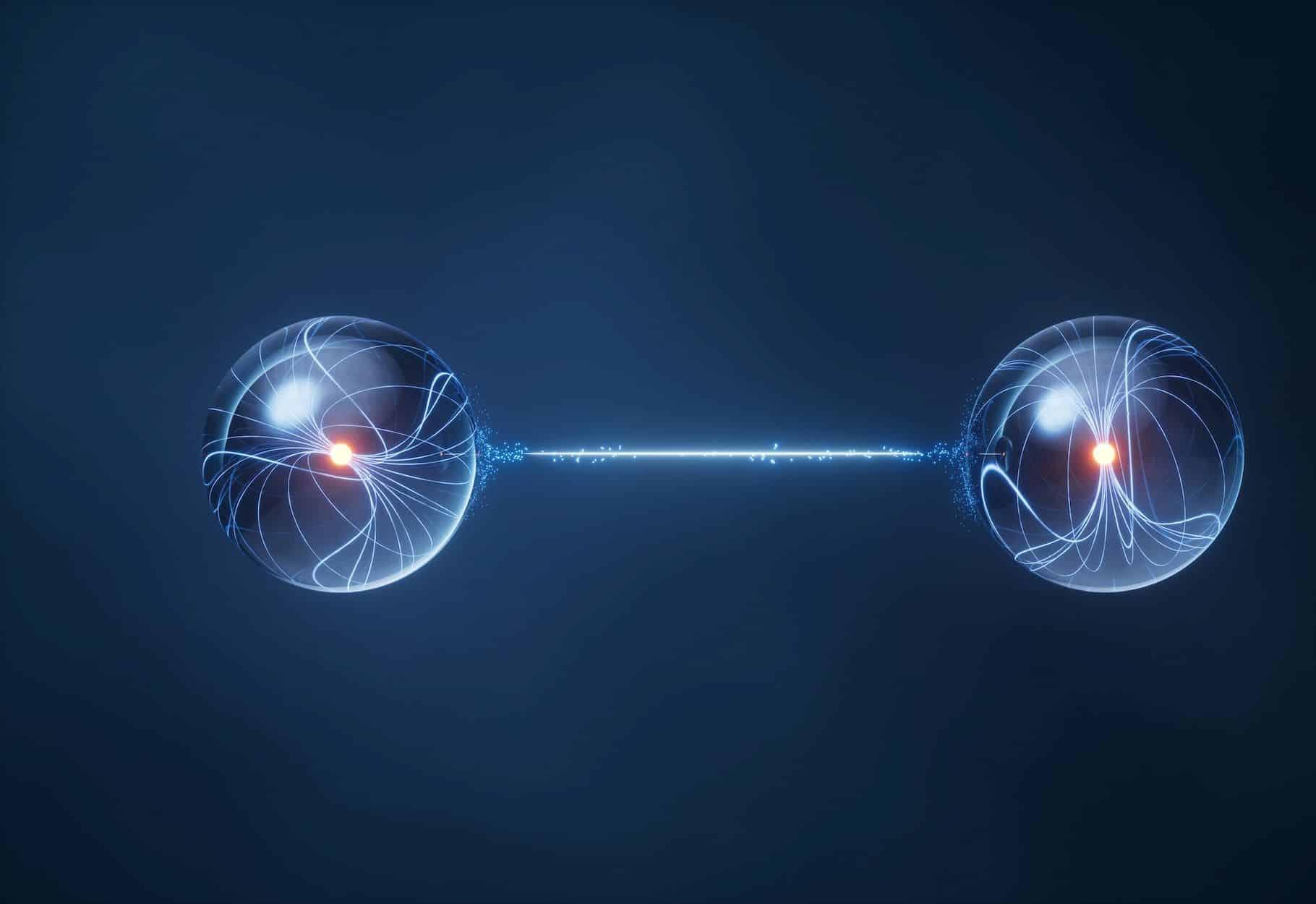

In the realm of quantum information distribution, sending a signal from point A to point B is like a baseball pitcher relaying a secret pitch call to the catcher. The pitcher has to disguise the signal from the opposing team and coaches, base runners, and even onlookers in the stands so no one else cracks the code.

The catcher can’t just stay in one spot or rely on the same finger pattern every time, because savvy opponents are constantly working to decipher any predictable sequence. If the signs are intercepted or misread, the batter gains an advantage, and the entire inning can unravel for the pitcher.

But what if there was a way for pitchers to bolster their signals by adding extra layers of “dimensionality” to each call, effectively increasing the chances of delivering it correctly to the catcher no matter how many eyes are watching? What if by incorporating more nuanced gestures—a subtle shift in glove position, a specific tap on the mound—the pitcher could craftily conceal their intentions?