Year 2015 face_with_colon_three

Researchers from MIT have created a 3D printer that is able to create objects out of glass.

Year 2015 face_with_colon_three

Researchers from MIT have created a 3D printer that is able to create objects out of glass.

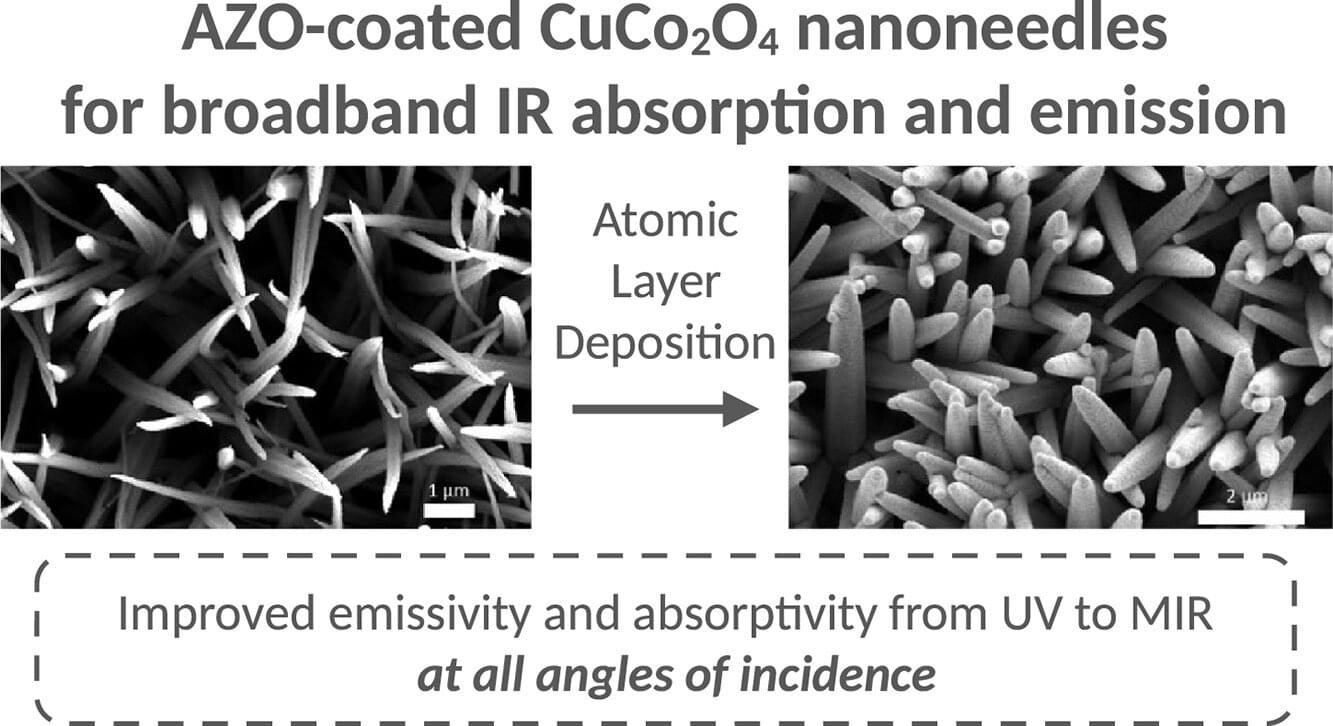

Using state-of-the-art equipment, researchers in the Thermophysical Properties of Materials group from the University of the Basque Country (EHU) have analyzed the capacity of ultra-black copper cobaltate nanoneedles to effectively absorb solar energy. They showed that the new nanoneedles have excellent thermal and optical properties and are particularly suited to absorbing energy. This will pave the way toward concentrated solar power in the field of renewable energies.

The tests were carried out in a specialized lab that has the capacity to undertake high temperature research. The results were published in the journal Solar Energy Materials and Solar Cells.

Renewable energy of the future is concentrated solar power because it can be easily used to store thermal energy. Despite the fact that, historically, it is more expensive and complex than photovoltaic power, in recent years huge advances have taken place in this technology, and concentrated solar power plants are spreading across more and more countries as a resource for a sustainable future.

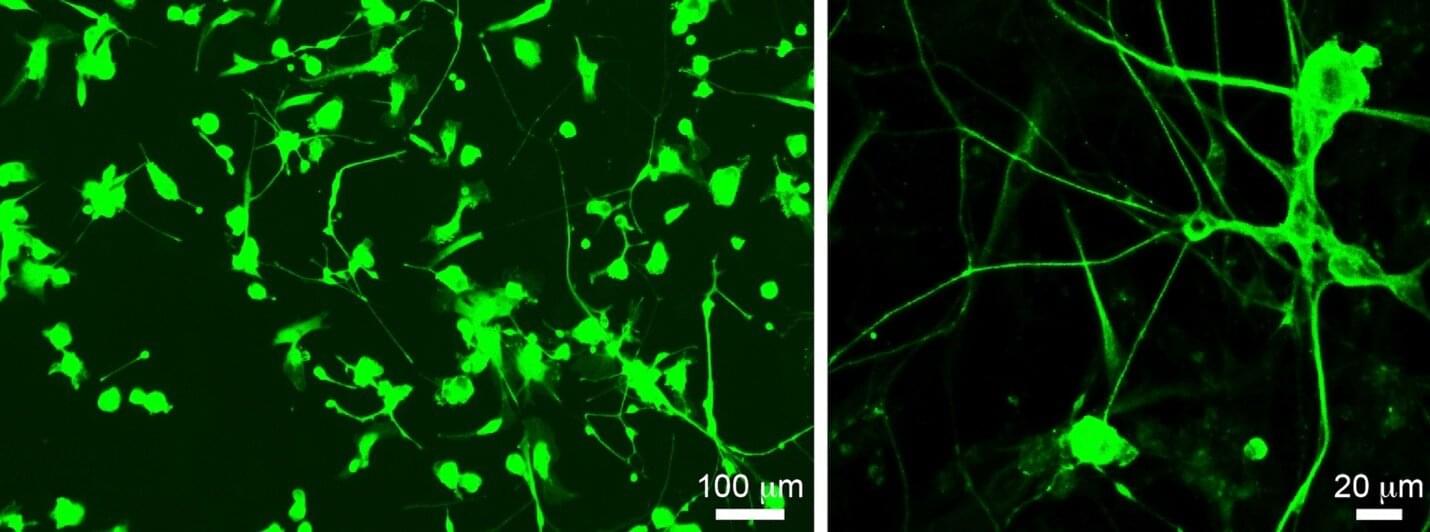

Spinal cord injury (SCI) remains a major unmet medical challenge, often resulting in permanent paralysis and disability with no effective treatments. Now, researchers at University of California San Diego School of Medicine have harnessed bioinformatics to fast-track the discovery of a promising new drug for SCI. The results will also make it easier for researchers around the world to translate their discoveries into treatments. The findings are published in the journal Nature.

One of the reasons SCI results in permanent disability is that the neurons that form our brain and spinal cord cannot effectively regenerate. Encouraging neurons to regenerate with drugs offers a promising possibility for treating these severe injuries.

The researchers found that under specific experimental conditions, some mouse neurons activate a specific pattern of genes related to neuronal growth and regeneration. To translate this fundamental discovery into a treatment, the researchers used data-driven bioinformatics approaches to compare their pattern to a vast database of compounds, looking for drugs that could activate these same genes and trigger neurons to regenerate.

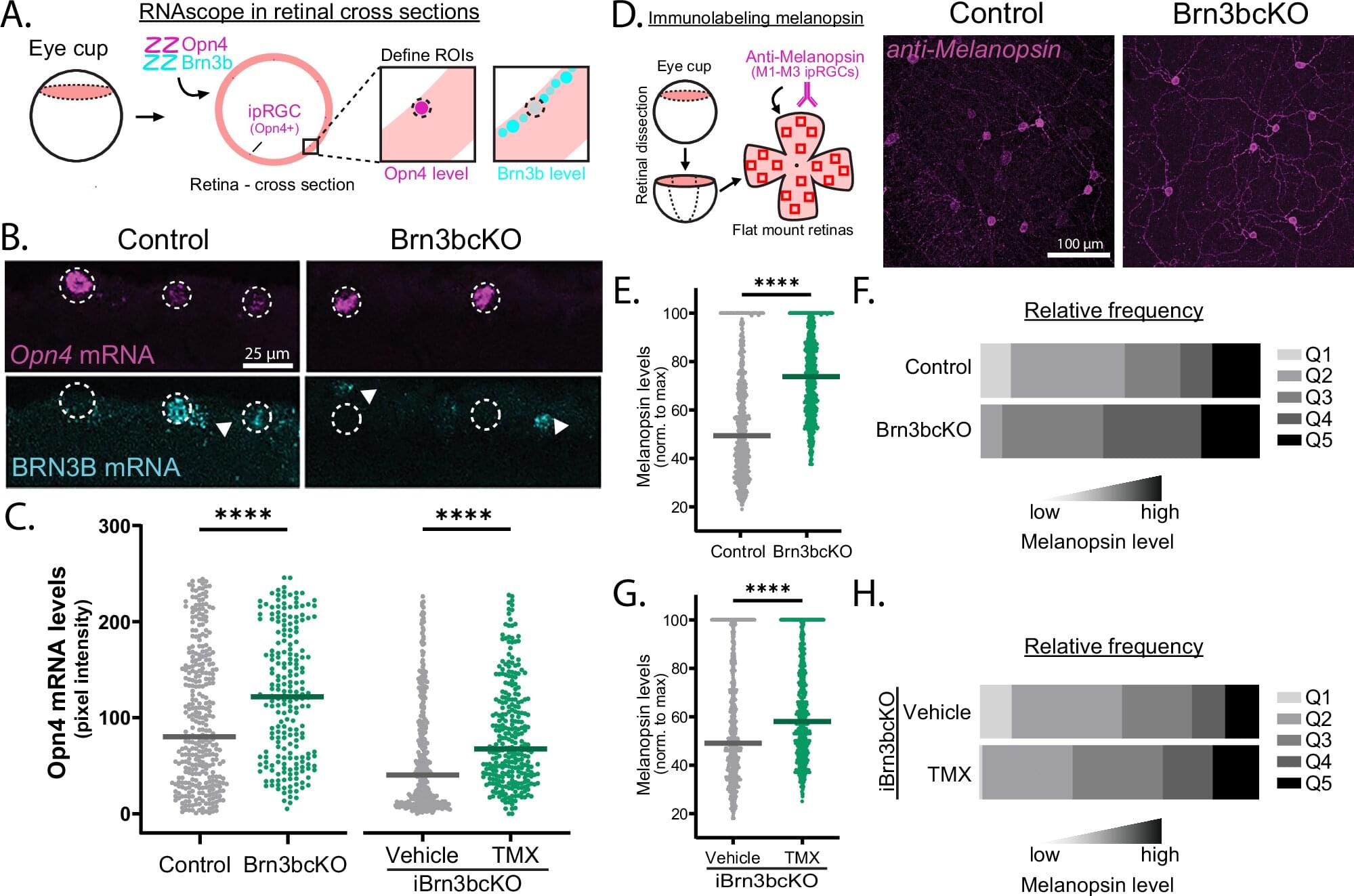

A recent study led by Tiffany Schmidt, Ph.D., associate professor of Ophthalmology and of Neurobiology in the Weinberg College of Arts and Sciences, has discovered previously unknown cellular mechanisms that shape neuron identity in retinal cells, findings that may improve the understanding of brain circuitry and disease. The study is published in Nature Communications.

Schmidt’s laboratory studies melanopsin-expressing, intrinsically photosensitive retinal ganglion cells (ipRGCs), a type of neuron in the retina that plays a key role in synchronizing the body’s internal clock to the daily light/dark cycle.

There are six subtypes of ipRGCs—M1 to M6—and each expresses a different amount of the protein melanopsin, which makes the ipRGCs directly sensitive to light. However, the mechanisms which give rise to each ipRGCs subtype’s unique structural and functional features have previously remained elusive.

For 25 years, scientists have studied “SuperAgers”—people aged 80 and above whose memory rivals those decades younger. Research reveals that their brains either resist Alzheimer’s-related plaques and tangles or remain resilient despite having them.

These individuals maintain a youthful brain structure, with a thicker cortex and unique neurons linked to memory and social skills. Insights from their biology and behavior could inspire new strategies to protect cognitive health into late life.

For the past 25 years, researchers at Northwestern Medicine have been examining people aged 80 and older, known as “SuperAgers,” to uncover why their minds stay so sharp.

A research team, led by Professor Heein Yoon in the Department of Electrical Engineering at UNIST has unveiled an ultra-small hybrid low-dropout regulator (LDO) that promises to advance power management in advanced semiconductor devices. This innovative chip not only stabilizes voltage more effectively, but also filters out noise—all while taking up less space—opening new doors for high-performance system-on-chips (SoCs) used in AI, 6G communications, and beyond.

The new LDO combines analog and digital circuit strengths in a hybrid design, ensuring stable power delivery even during sudden changes in current demand—like when launching a game on your smartphone—and effectively blocking unwanted noise from the power supply.

What sets this development apart is its use of a cutting-edge digital-to-analog transfer (D2A-TF) method and a local ground generator (LGG), which work together to deliver exceptional voltage stability and noise suppression. In tests, it kept voltage ripple to just 54 millivolts during rapid 99 mA current swings and managed to restore the voltage to its proper level in just 667 nanoseconds. Plus, it achieved a power supply rejection ratio (PSRR) of −53.7 dB at 10 kHz with a 100 mA load, meaning it can effectively filter out nearly all noise at that frequency.

Scientists have identified hundreds of genes that may increase the risk of developing Alzheimer’s disease but the roles these genes play in the brain are poorly understood. This lack of understanding poses a barrier to developing new therapies, but in a study published in the American Journal of Human Genetics, researchers at Baylor College of Medicine and the Jan and Dan Duncan Neurological Research Institute (Duncan NRI) at Texas Children’s Hospital offer new insights into how Alzheimer’s disease risk genes affect the brain.

“We studied fruit fly versions of 100 human Alzheimer’s disease risk genes,” said first author Dr. Jennifer Deger, a neuroscience graduate in Baylor’s Medical Scientist Training Program (M.D./Ph. D.), mentored by Drs. Joshua Shulman and Hugo Bellen.

“We developed fruit flies with mutations that ‘turned off’ each gene and determined how this affected the fly’s brain structure, function and stress resilience as the flies aged.”