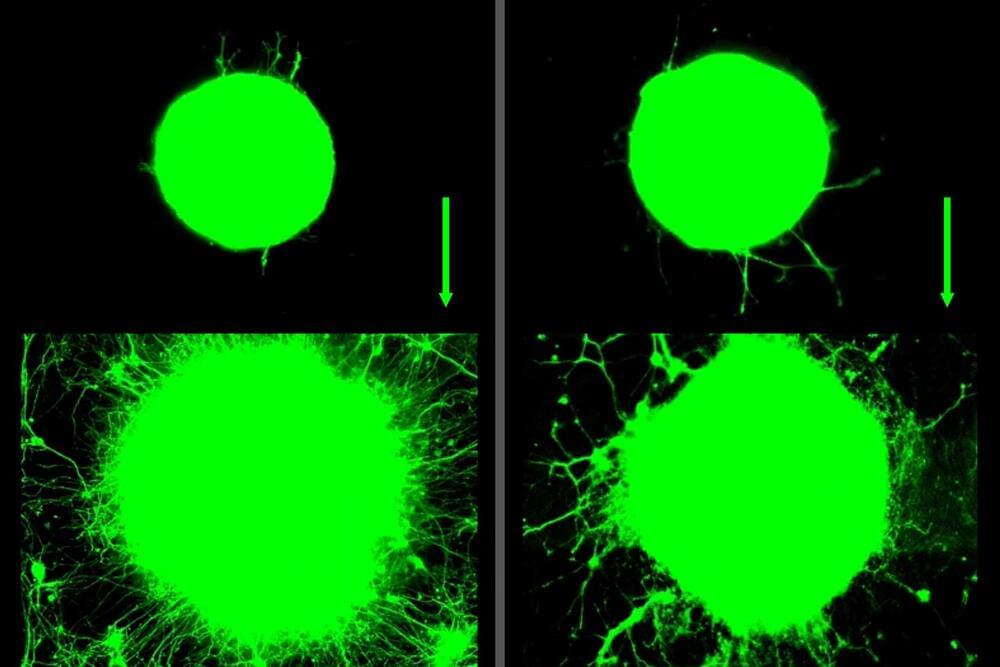

Summary: A recent study offers new insights into how brain regions coordinate during rest, using resting-state fMRI (rsfMRI) and neural recordings in mice. By comparing blood flow patterns with direct neural activity, researchers found that some brain activity remains “invisible” in traditional rsfMRI scans. This hidden activity suggests that current brain imaging techniques may miss key elements of neural behavior.

The findings, potentially applicable to human studies, may refine our understanding of brain networks. Further research could improve the accuracy of interpreting brain activity.