Results of a trial revealed that a unique investigational drug formulation called Rhenium Obisbemeda (186RNL) more than doubled median survival and progression-free time, compared with standard median survival and progression rates, and with no dose-limiting toxic effects.

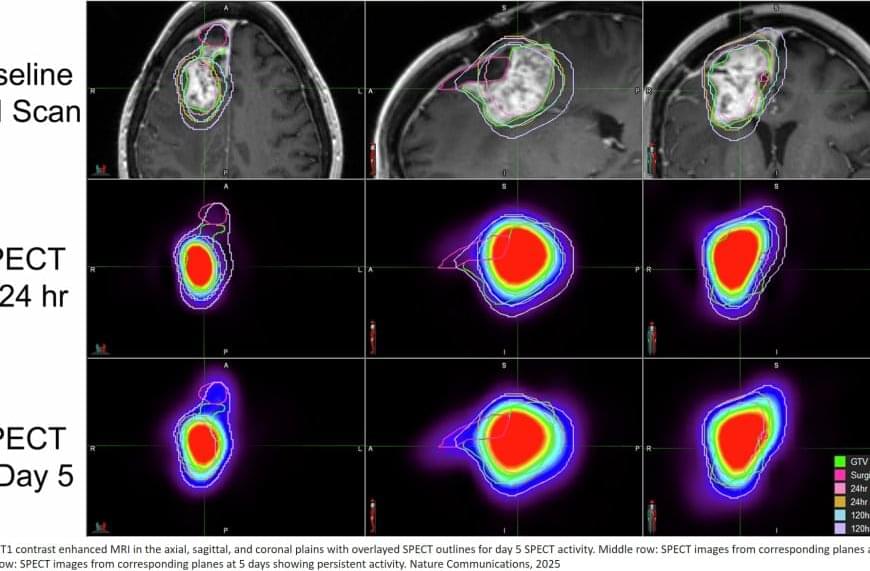

Rhenium Obisbemeda enables very high levels of a specific activity of rhenium-186 (186Re), a beta-emitting radioisotope, to be delivered by tiny liposomes, referring to artificial vesicles or sacs having at least one lipid bilayer. The researchers used a custom molecule known as BMEDA to chelate or attach 186Re and transport it into the interior of a liposome where it is irreversibly trapped.

In this trial, known as the phase 1 ReSPECT-GBM trial, scientists set out to determine the maximum tolerated dose of the drug, as well as safety, overall response rate, disease progression-free survival and overall survival.

After failing one to three therapies, 21 patients who were enrolled in the study between March 5, 2015, and April 22, 2021, were treated with the drug administered directly to the tumors using neuronavigation and convection catheters.

The researchers observed a significant improvement in survival compared with historical controls, especially in patients with the highest absorbed doses, with a median survival and progression-free time of 17 months and 6 months, respectively, for doses greater than 100 gray (Gy), referring to units of radiation.

Importantly, they did not observe any dose-limiting toxic effects, with most adverse effects deemed unrelated to the study treatment.