Scientists have taken a major step toward solving a long-standing mystery in particle physics, by finding no sign of the particle many hoped would explain it.

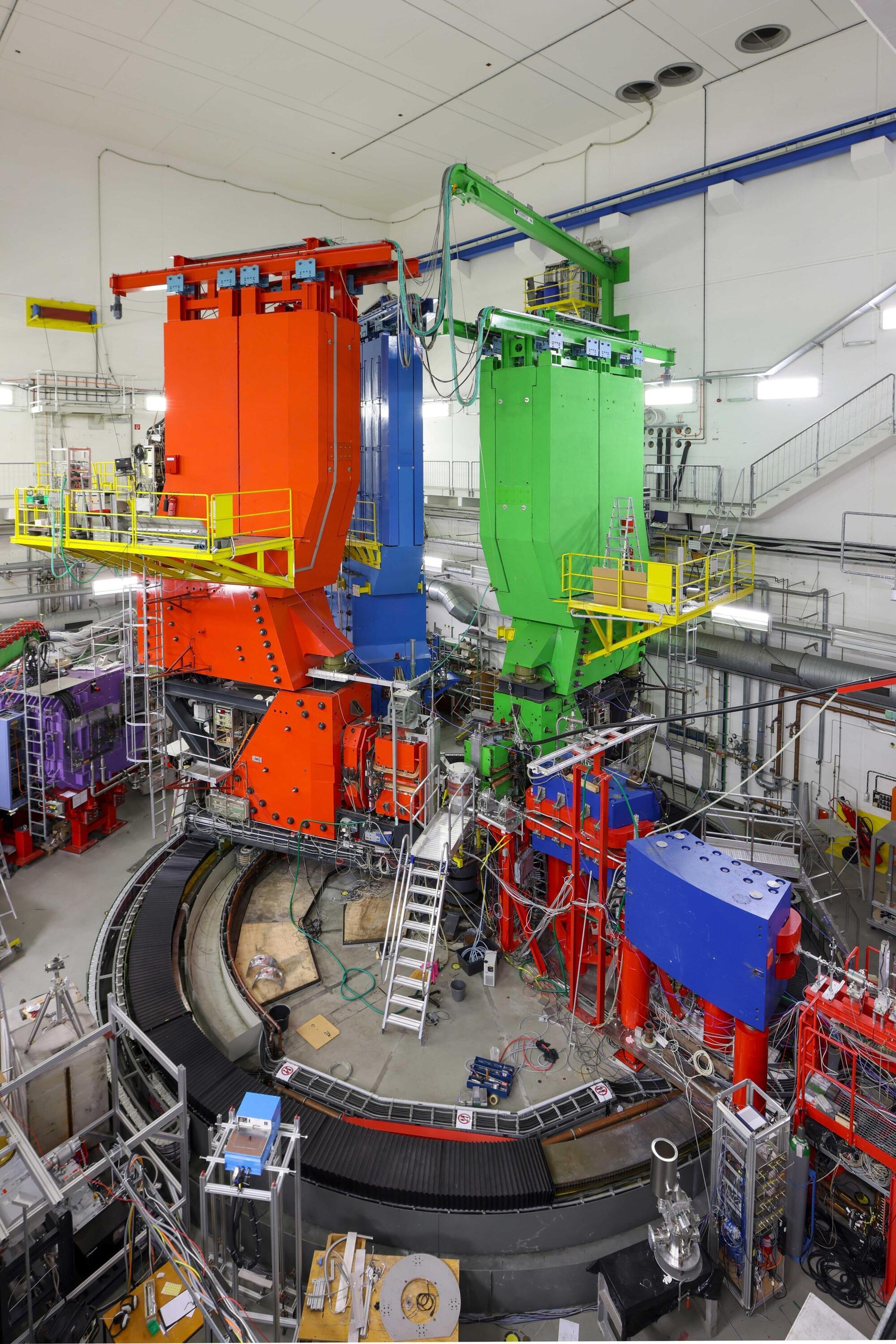

An international collaboration of scientists, including from The University of Manchester, working on the MicroBooNE experiment at the U.S. Department of Energy’s Fermi National Accelerator Laboratory announced that they have found no evidence for a fourth type of neutrino, known as a sterile neutrino.

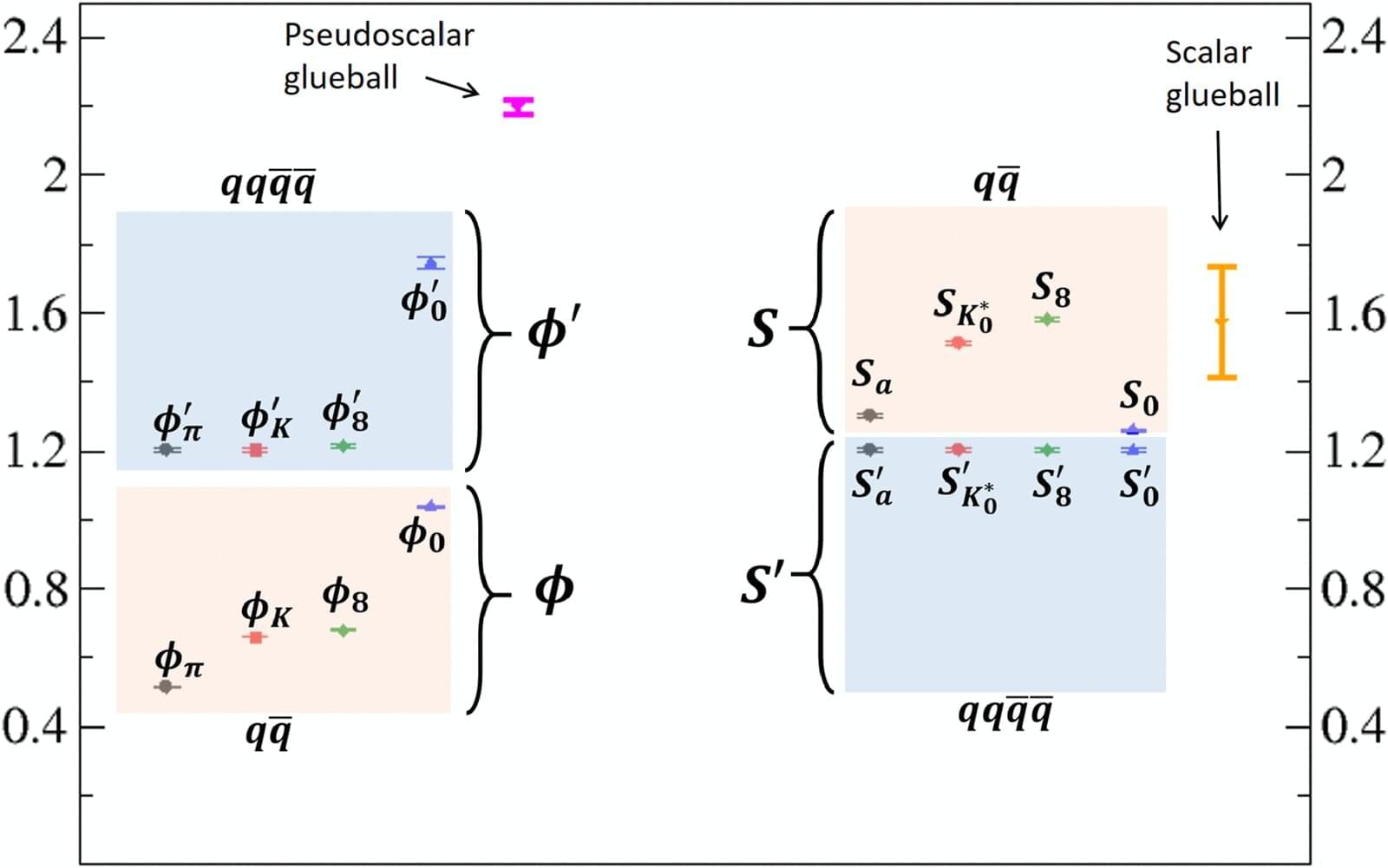

For decades, physics experiments have observed neutrinos—sub-atomic particles that are all around us—behaving in a way that doesn’t fit the Standard Model of particle physics. One of the most promising explanations was the existence of a sterile neutrino, named because they are predicted not to interact with matter at all, whereas other neutrinos can. This means they could pass through the universe almost undetected.