Quantum superposition is a phenomenon in which a tiny particle can be in two states at the same time — but only if it is not being directly observed.

🏭 Q: What upgrades are needed for the grid to handle increased energy demand by 2050? A: The grid needs to be upgraded to handle tripled energy throughput by 2050, requiring more power plants, wires, transformers, and substations to support increased demand from EVs, heat pumps, and AI. Innovative Charging Solutions.

🔋 Q: How do Electric Era’s charging stations reduce grid capacity requirements? A: Electric Era’s charging stations with batteries buffer the load, reducing grid capacity requirements by 70% and allowing for faster deployment in better locations like retail amenities and gas station parking lots.

⏱️ Q: What capabilities do Electric Era’s charging stations offer for energy management? A: Electric Era’s stations offer time of use charging and virtual power plant capabilities, storing energy upstream and providing the best time of use pricing to customers, making them more efficient and cost-effective. Energy Storage and Distribution.

☀️ Q: How can the “duck curve” phenomenon be addressed? A: The duck curve can be solved by building extra energy storage to store excess electrons, such as Tesla’s 10–12 GWh deployed last quarter and Electric Era’s smaller storage at more localized locations.

🔌 Q: What is the transformer scarcity problem and how can it be addressed? A: Transformers are being hoarded due to scarcity and strategic importance, exacerbating grid infrastructure issues. A strategic transformer reserve is needed to address this problem, according to Quincy from Electric Era. ## ## Key Insights ## Grid Infrastructure Challenges.

🔌 The 130-year-old grid infrastructure is antiquated and breaking apart, making it expensive and challenging to upgrade for increased energy demand.

TheAIGRID

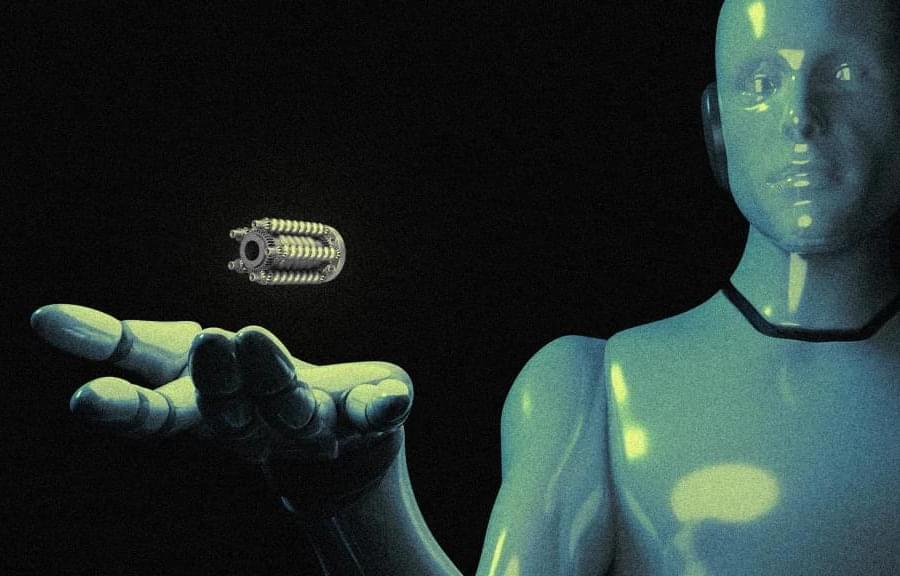

What if doing your chores were as easy as flipping a switch? In this talk and live demo, roboticist and founder of 1X Bernt Børnich introduces NEO, a humanoid robot designed to help you out around the house. Watch as NEO shows off its ability to vacuum, water plants and keep you company, while Børnich tells the story of its development — and shares a vision for robot helpers that could free up your time to focus on what truly matters. (Recorded at TED2025 on April 8, 2025)

If you love watching TED Talks like this one, become a TED Member to support our mission of spreading ideas: https://ted.com/membership.

Follow TED!

X: / tedtalks.

Instagram: / ted.

Facebook: / ted.

LinkedIn: / ted-conferences.

TikTok: / tedtoks.

The TED Talks channel features talks, performances and original series from the world’s leading thinkers and doers. Subscribe to our channel for videos on Technology, Entertainment and Design — plus science, business, global issues, the arts and more. Visit https://TED.com to get our entire library of TED Talks, transcripts, translations, personalized talk recommendations and more.

Watch more: https://go.ted.com/berntbornich.