A critical MongoDB flaw, CVE-2025–14847, is under active exploitation, allowing unauthenticated data leaks from 87,000+ vulnerable servers worldwide.

In December 2024, the popular Ultralytics AI library was compromised, installing malicious code that hijacked system resources for cryptocurrency mining. In August 2025, malicious Nx packages leaked 2,349 GitHub, cloud, and AI credentials. Throughout 2024, ChatGPT vulnerabilities allowed unauthorized extraction of user data from AI memory.

The result: 23.77 million secrets were leaked through AI systems in 2024 alone, a 25% increase from the previous year.

Here’s what these incidents have in common: The compromised organizations had comprehensive security programs. They passed audits. They met compliance requirements. Their security frameworks simply weren’t built for AI threats.

A Lithuanian national has been arrested for his alleged involvement in infecting 2.8 million systems with clipboard-stealing malware disguised as the KMSAuto tool for illegally activating Windows and Office software.

The 29-year-old man was extradited from Georgia to South Korea following a related request under Interpol’s coordination.

According to the Korean National Police Agency, the suspect used KMSAuto to lure victims into downloading a malicious executable that scanned the clipboard for cryptocurrency addresses and replaced them with ones controlled by the attacker — known as ‘clipper malware’

A ransomware attack hit Oltenia Energy Complex (Complexul Energetic Oltenia), Romania’s largest coal-based energy producer, on the second day of Christmas, taking down its IT infrastructure.

The 40-year-old Romanian energy provider employs over 19,000 people, operates four power plants with an installed production capacity of 3,900 MWh, and provides about 30% of Romania’s electricity.

“As a result of the attack, some documents and files were encrypted, and several computer applications became temporarily unavailable, including ERP systems, document management applications, the company’s email service, and website,” it said over the weekend.

In a Science Immunology Review from earlier this year, researchers discuss how interactions between the nervous and immune systems could impact neurological disorders and allergy-related behaviors like food avoidance.

The nervous and type 2 immune systems regulate each other via cytokines and neurotransmitters, suggesting previously unidentified therapeutic avenues.

Liu et al. report chromosome-level genomes of 11 species across 10 Polygonaceae genera (including four previously published genomes), which encompass diverse habitats. Integrating genomic and transcriptomic analyses, this study provides insights into the evolutionary adaptation strategies of Polygonaceae to thrive in various habitats.

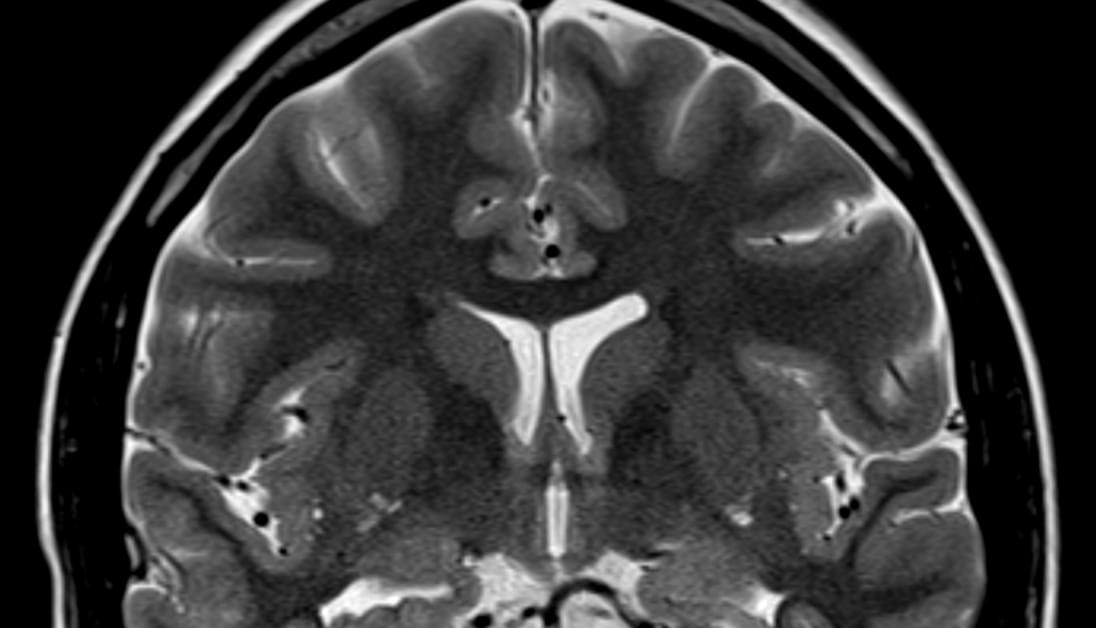

Getting older means losing things. Some are fine, like any f**ks you have left to give or your tolerance for cheap tequila. Others, like the ability to follow a conversation in a loud room, hit harder.

But scientists now think there’s a way to fight back. And it might start at a piano bench.

Researchers publishing in PLOS Biology found that older adults who have played music for decades have brains that function more like those of someone half their age, at least when it comes to understanding speech in loud environments. In brain scans, they showed cleaner, more focused activity while listening to spoken syllables buried in background noise. Their brains weren’t scrambling. They already knew what to do.