By Chuck Brooks

Dear Friends and Colleagues, in this issue of the Security and Tech Insights newsletter, I focus on the risk management of expecting the unexpected. The focus of this issue is on potential Black and Gray Swan events.

Critically ill patients with sepsis who are given statins may be more likely to survive, new research suggests.

Researchers set out to explore whether the cholesterol-busting drugs may bring additional benefits for patients.

The new study examined information on sepsis patients who received statins during a stint in intensive care and compared it with patients in a similar situation who did not receive statins.

As artificial intelligence (AI) tools shake up the scientific workflow, Sam Rodriques dreams of a more systemic transformation. His start-up company, FutureHouse in San Francisco, California, aims to build an ‘AI scientist’ that can command the entire research pipeline, from hypothesis generation to paper production.

Today, his team took a step in that direction, releasing what it calls the first true ‘reasoning model’ specifically designed for scientific tasks. The model, called ether0, is a large language model (LLM) that’s purpose-built for chemistry, which it learnt simply by taking a test of around 500,000 questions. Following instructions in plain English, ether0 can spit out formulae for drug-like molecules that satisfy a range of criteria.

This wireless desk can power almost your entire PC, no cables or batteries needed.

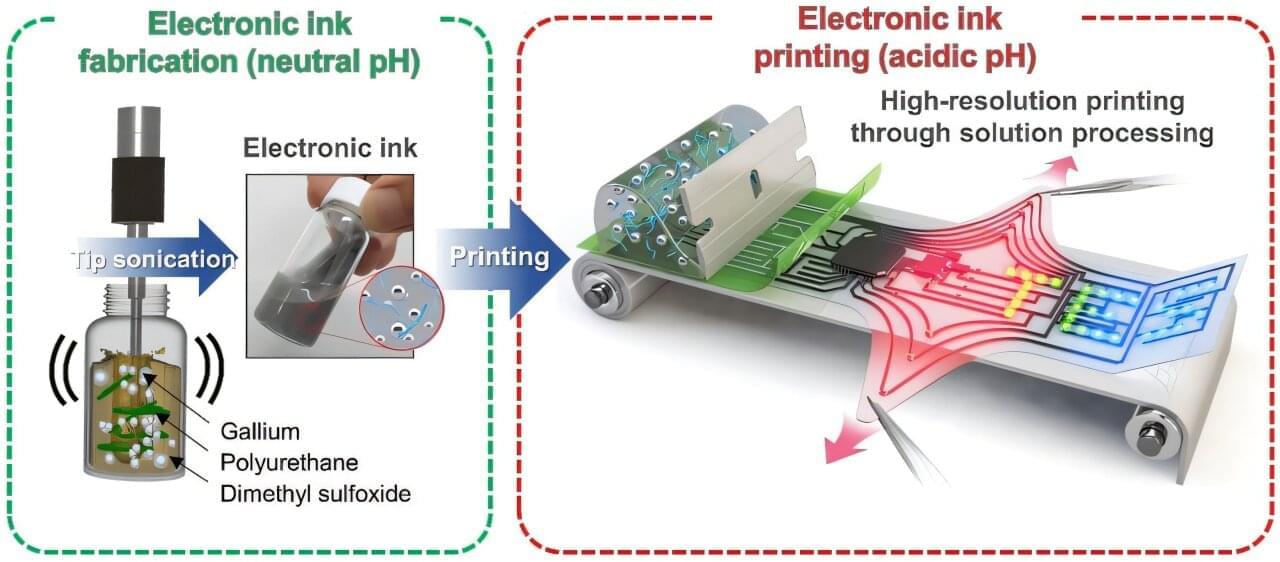

Variable-stiffness electronics are at the forefront of adaptive technology, offering the ability for a single device to transition between rigid and soft modes depending on its use case. Gallium, a metal known for its high rigidity contrast between solid and liquid states, is a promising candidate for such applications. However, its use has been hindered by challenges including high surface tension, low viscosity, and undesirable phase transitions during manufacturing.

In a global first, US scientists demonstrate quantum encryption in a live nuclear reactor using quantum key distribution approach.