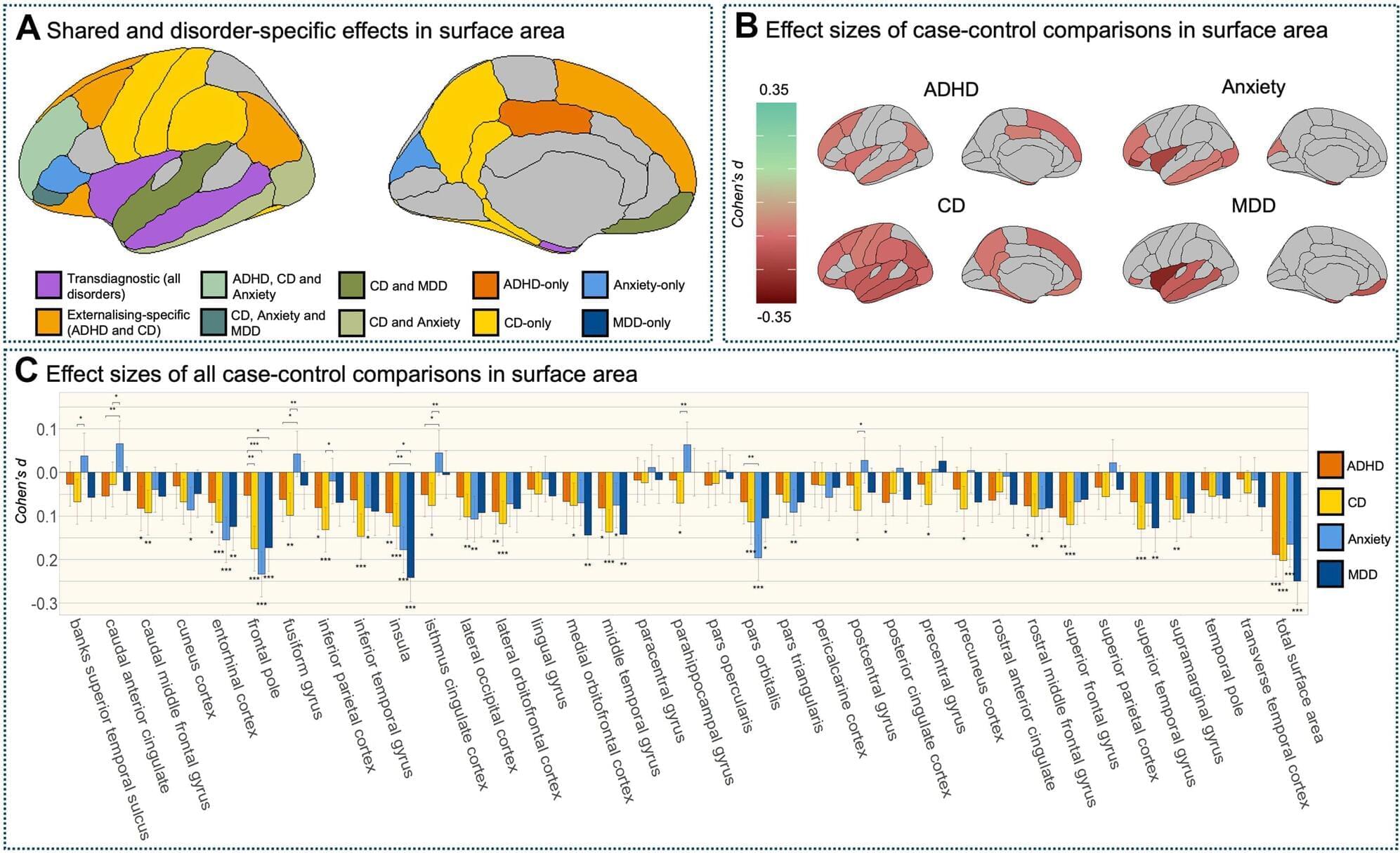

An international study—the largest of its kind—has uncovered similar structural changes in the brains of young people diagnosed with anxiety disorders, depression, ADHD and conduct disorder, offering new insights into the biological roots of mental health conditions in children and young people.

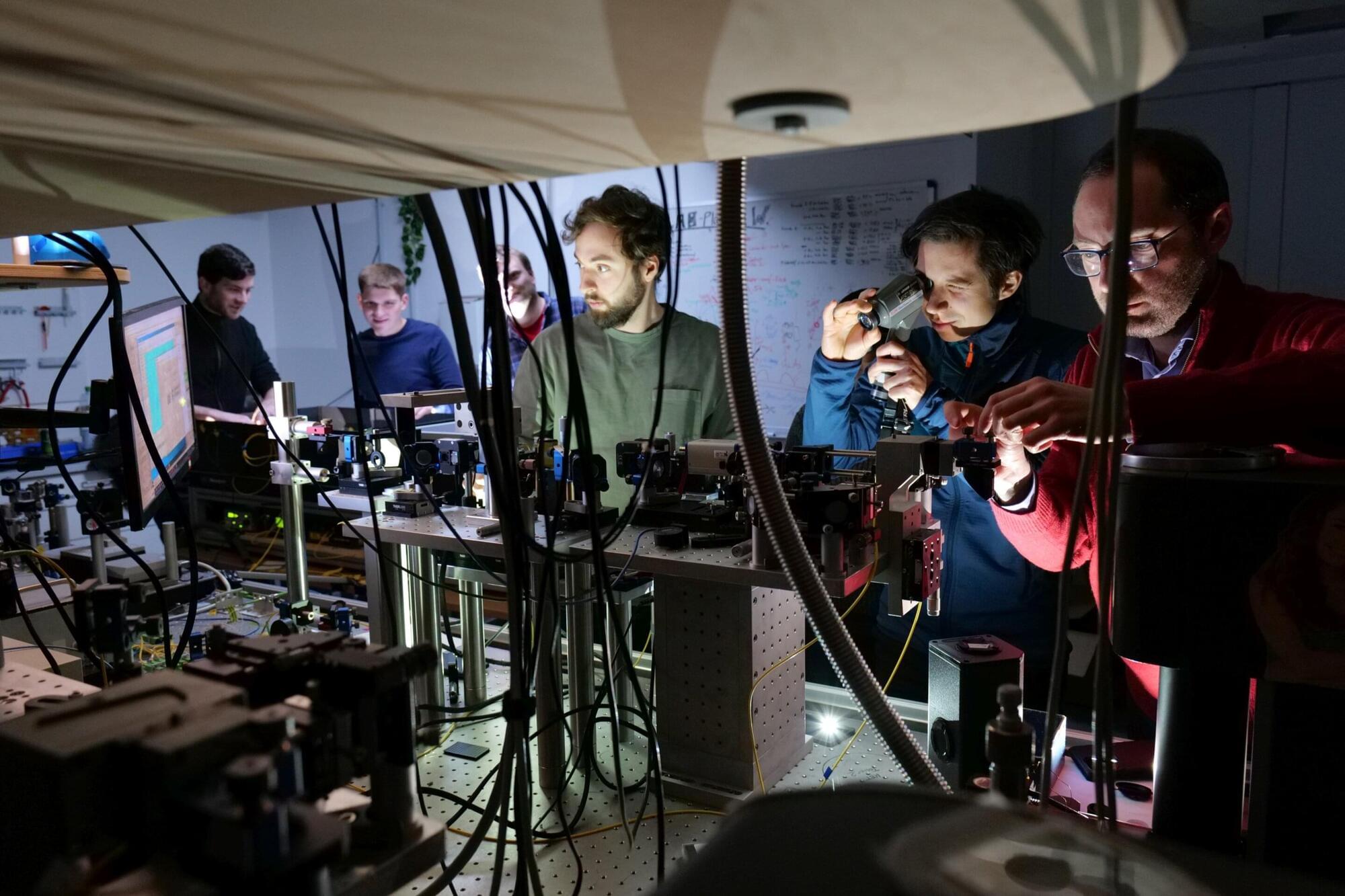

Led by Dr. Sophie Townend, a researcher in the Department of Psychology at the University of Bath, the study, published today in the journal Biological Psychiatry, analyzed brain scans from almost 9,000 children and adolescents—around half with a diagnosed mental health condition—to identify both shared and disorder-specific alterations in brain structure across four of the most common psychiatric disorders in childhood and adolescence.

Among several key findings, the researchers identified common brain changes across all four disorders—notably, a reduced surface area in regions critical for processing emotions, responding to threats and maintaining awareness of bodily states.