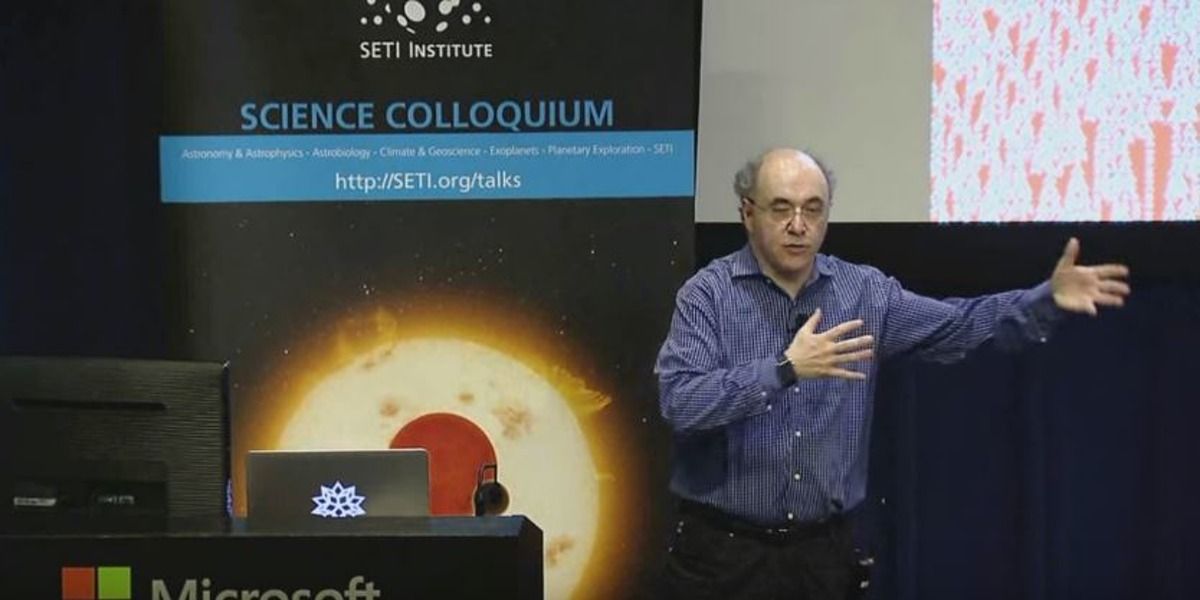

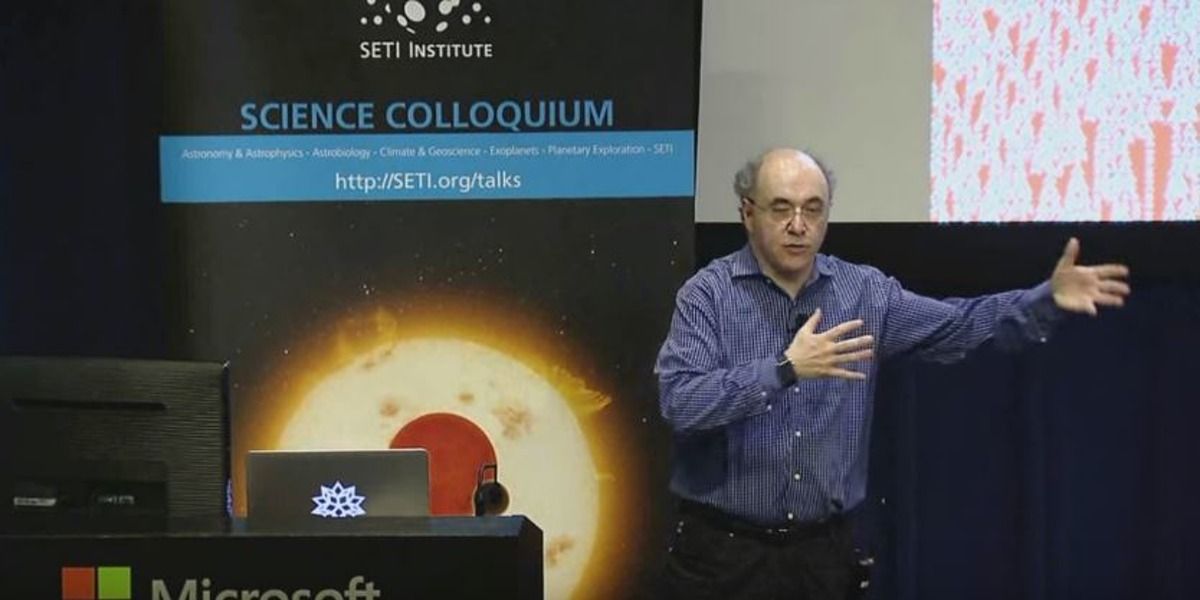

Stephen Wolfram, the inventor of the mathematical programming system Wolfram Language, thinks there might be intelligent life, of a sort, in the digits of pi. He spoke recently at the SETI Institute about what his “principle of computational equivalence” means for non-human intelligence — check out the heady hour-and-a-half lecture below.

The key thread running through his concept is that simple rules underpin complex behavior. For Wolfram, the pigmentation patterns on a mollusk shell, for example, aren’t necessarily the outcome of deliberate evolutionary forces. “I think the mollusk is going out into the computational universe, finding a random program, and running it and printing it on its shell,” Wolfram says in the lecture. “If I’m right, the universe is just like an elaborate version of the digits of pi.” (There is some debate, of course, over just how right Wolfram is — though you won’t really get that from the lecture.)

To show how this simplicity-begetting complexity relates to aliens, Wolfram draws from the end of Carl Sagan’s Contact). Spoilers (for a book): after communicating with the alien intelligence, astronomer Ellie Arroway finds in the digits of pi an image of a circle. She takes it as a sign of intelligence baked into the universe. Because the digits are both random and infinite, this also means that within pi “combinatorially there exists the works of Shakespeare and any possible picture of any possible circle,” Wolfram says.

Read more