“What would you do right now if you wanted to read something stored on a floppy disk? On a Zip drive? In the same way, the web browsers of the future might not be able to open today’s webpages and images …”

Air combat veterans proved to be no match for an artificial intelligence developed by Psibernetix. ALPHA has proven to be “the most aggressive, responsive, dynamic and credible AI seen to date.”

Retired United States Air Force Colonel Gene Lee recently went up against ALPHA, an artificial intelligence developed by a University of Cincinnati doctoral graduate. The contest? A high-fidelity air combat simulator.

And the Colonel lost.

A group of scientists confirmed that there is a pear-shaped nucleus. Not only does this violate some laws in physics, but also suggests that time travel is not possible.

A new form of atomic nuclei has been confirmed by scientists in a recent study published in the journal Physical Review Letters. The pear-shaped, asymmetrical nuclei, first observed in 2013 by researchers from CERN in the isotope Radium-224, is also present in the isotope Barium-144.

This is a monumental importance because most fundamental theories in physics are based on symmetry. This recent confirmation shows that it is possible to have a nuclei that has more mass on one side than the other. “This violates the theory of mirror symmetry and relates to the violation shown in the distribution of matter and antimatter in our Universe,” said Marcus Scheck of University of the West of Scotland, one of the authors of the study.

The Digital Economy: Postnormal?

Posted in business

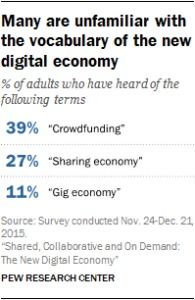

I’m curious if readers of this blog, futurists or otherwise, were as surprised as I was to see the Pew Research Center report last month about how, to the average American, some of the biggest digital economy companies and concepts are still relatively unknown. A few highlights:

I’m curious if readers of this blog, futurists or otherwise, were as surprised as I was to see the Pew Research Center report last month about how, to the average American, some of the biggest digital economy companies and concepts are still relatively unknown. A few highlights:

- 61% of Americans have never heard of the term “crowdfunding.”

- 73% are not familiar with the term “sharing economy.”

- 89% are not familiar with the term “gig economy.”

This was a reminder to me that the digital economy is still far from mainstream, and I think we need to keep that in mind when we analyze new technologies. But there’s another reason the report caught my eye, which is that it I think it’s another good example of a human ecosystem, in this case the digital economy, exhibiting signs of Postnormal Times (PNT). The digital economy is famous for making our lives easier, cutting out various middlemen and red tape to get what the consumer actually wants: a bed, not a grand lobby, a ride, not a car. This seems very normal in terms of the evolution of consumer needs.

But according to Sardar and Sweeney, “many ‘normal’ systems will not continue to operate ‘normally’ in PNT—sooner or later, the 3Cs will have a direct or indirect impact on them.” It may sound somewhat doomsday, but it’s not. PNT is nothing to fear, but a way of observing the world; evidence of the 3Cs are just indicators that a system is evolving and changing, and lets us know that our responses need to change in kind.

One of those Cs stands for “contradictions.” Though contradiction is of course ‘normal,’ the contradiction between the relatively small slice of consumers being served by Uber and AirBNB doesn’t mesh with the massive values (AirBNB worth $30Billion) associated with such startups. This is where my PNT radar goes off.

Here’s another set of interesting facts that show how low actual consumer engagement with the digital economy services actually looks:

- 6% of Americans have ordered groceries online from a local store and had them delivered to their home (Instacart, Peapod or Fresh Direct).

- 4% have hired someone online to do a task or errand (TaskRabbit, Fiverr or Amazon Mechanical Turk).

- 2% have rented clothing or other products for a short period of time online (sites like Rent the Runway).

The digital economy is still maturing, and certain aspects of it (Craigslist, Ebay) are widespread. But I think this contradiction between the high value of the startups and low familiarity with these services is worth considering as a signal of the postnormal, which means being prepared to explore new worldviews that can better meet the need of the modern digital consumer.

Human civilization has always been a virtual reality. At the onset of culture, which was propagated through the proto-media of cave painting, the talking drum, music, fetish art making, oral tradition and the like, Homo sapiens began a march into cultural virtual realities, a march that would span the entirety of the human enterprise. We don’t often think of cultures as virtual realities, but there is no more apt descriptor for our widely diverse sociological organizations and interpretations than the metaphor of the “virtual reality.” Indeed, the virtual reality metaphor encompasses the complete human project.

Virtual Reality researchers, Jim Blascovich and Jeremy Bailenson, write in their book Infinite Reality; “[Cave art] is likely the first animation technology”, where it provided an early means of what they refer to as “virtual travel”. You are in the cave, but the media in that cave, the dynamic-drawn, fire-illuminated art, represents the plains and animals outside—a completely different environment, one facing entirely the opposite direction, beyond the mouth of the cave. When surrounded by cave art, alive with movement from flickering torches, you are at once inside the cave itself whilst the media experience surrounding you encourages you to indulge in fantasy, and to mentally simulate an entirely different environment. Blascovich and Bailenson suggest that in terms of the evolution of media technology, this was the very first immersive VR. Both the room and helmet-sized VRs used in the present day are but a sophistication of this original form of media VR tech.

Its painful to bear views that make many think I’m an imbicile and dislike me. So please, if anybody has a rational argument why any of this is wrong, I beg to be enlightened. I’ve set up a diagram for the purpose that will support you to add your criticism exactly where it is pertinent. https://tssciencecollaboration.com/graphtree/Are%20Vaccines%20Safe/406/4083

(1) The National Academy’s Reviews Of Vaccine Safety

The Institute of Medicine of the National Academies has provided several multi-hundred page surveys studying the safety of vaccines, but rather than reassuring, these itemize some iatrogenic conditions being caused, and pronounce the scientific literature inadequate to say whether most others are. The 2011 Institute of Medicine (IOM) Review[1] looked at 146 vaccine-condition pairs for causality, reporting:

- 14 for which the evidence is said to convincingly support causality, the vaccine is causing the condition.

- 4 where the evidence is said to favor acceptance.

- 5 where the evidence is said to favor rejection, including MMR causing autism.

- 123 where the evidence is said insufficient to evaluate.

The 2003 IOM Review on multiple vaccines said[2]:

“The committee was unable to address the concern that repeated exposure of a susceptible child to multiple immunizations over the developmental period may also produce atypical or non-specific immune or nervous system injury that could lead to severe disability or death (Fisher, 2001). There are no epidemiological studies that address this.”

and:

“the committee concludes that the epidemiological and clinical evidence is inadequate to accept or reject a causal relationship between multiple immunization and an increased risk of allergic disease, particularly asthma.”

- None of the IOM Safety Reviews[1][2][3][4] addressed the aluminum (for example whether the aluminum is causing autism), or mentioned contaminants, or discussed animal models although they had concluded as just quoted there is generally no epidemiological or clinical data worth preferring.

(2) The Aluminum.

Alum was added to vaccines back in the 1920’s, with no test of parenteral toxicity until recently[5], because it prods the immature immune system out of its normal operating range.[6] Maybe they figured aluminum is common in the environment, but injection bypasses half a dozen evolved sequential filters that normally keep it out of circulatory flow during development. Vaccines put hundreds of times as much aluminum into infants’ blood as they would otherwise get, and in an unnatural form that is hard for the body to remove.[7][8 (cfsec 4.2)][9]. The published empirical results indicate its highly toxic.

- Bishop et al in NEJM 97 reported a Randomized Placebo Controlled(RPC) test on preemies.[10][11] Scaling the toxicity they measured to the 4000 mcg in the first six months projects the vaccine series’ aluminum as costing each recipient maybe 15 IQ points and bone density.[12]

- Animal RPC experiments also show highly toxic[13][14][15][16]

- The applicable epidemiology suggests its highly toxic.[8][18][19][20][21][22] Discussed more in point 8 below, basically every study that compares more to less finds less much better.

- Numerous clinical publications, whole special issues, on ASIA (Autoimmune Syndrome Induced by Adjuvants)[23][24][25]

- Any “placebo” controlled test I’ve ever found of an adjuvanted vaccine, the “placebo” contained an adjuvant.

- Safety reviews ignore the issue. Search the pdfs. [1][2][3][4]

- The FDA[26] cites a theory paper[27] that compares a published MRL based on dietary experiments in weaned rodents (thus completely uninformed about toxicity in early development) to a theoretical model of blood aluminum levels from the vaccines, and disdains all the above cited empirical evidence.

(3) The Safety Studies Ignore Confounding Patient Behavior

Since there are no Randomized Placebo Controlled (RPC) trials supporting vaccines, virtually all studies report on the association (or lack thereof) between vaccines and some iatrogenic condition. But parents who believe vaccines made their kids sick, stop vaccinating them, which systematically moves sick or vaccine damaged kids in the studies into the “low vaccine”, “low thimerisol”, or etc. bin. This invalidates most studies supporting safety (and the few remaining ones suck for other reasons). Numerous studies report incredible preventative effects for vaccines, presumably because of this corruption, like having more thimerisol or more MMR’s is strongly preventative of autism and other mental development issues[28][29][30], or like having more vaccines was strongly preventative of atopy, apparently even years before patients got the vaccines[31]. The fact this confounding factor is overlooked demonstrates extreme confirmation bias and is the defining factor of Cargo Cult Science according to R.P. Feynman.[32]

(4) The Animal Models

Animal models reliably and repeatably show in RPC tests (a) that vaccines at the wrong time in development damage the adult brain or behavior [33][34] and (b) that multiple vaccines cause autoimmune disease even in animals bred to be non-autoimmune[35][36]. The effects are said to be robust, and as we’ve already seen there isn’t good human data rebutting them.

(5) The Contaminants

Studies have repeatedly found contaminants such as viruses, retroviruses, circoviruses, and human DNA in vaccines seemingly whenever tested,

and I’ve found no reason to believe off the shelf vaccines are free[37][38][39][40][41]. Reported contaminants have included SV-40 in polio vaccines which were administered even though scientists knew the vaccines were contaminated and already had hunches and experiments indicating SV-40 causes cancer[41][42]. Chimpanzee Coryza Virus became known in humans as RSV and has killed many millions of infants and hospitalizes 100,000/yr in America today[43]. Contaminated polio vaccine is plausibly also the origin of HIV[44][41]. There are discovered viral contaminants in vaccines today[38][39], with unknown long term effects, as well as I expect many undiscovered contaminants.

(6) Studies Ask Whether Some One Vaccine Damages, and Thus Miss That Many Do.

Virtually every study not reporting damage compares kids who got numerous vaccines to kids who got numerous vaccines. Such studies wouldn’t show statistically significant results no matter how much damage the vaccines are doing, unless one vaccine or vector by itself is doing comparable or more damage than the rest put together. The studies more or less test the hypothesis one vaccine is invisibly damaging, the rest are fine, and the studies are all obscured in the presence of multiple problems, much less the kind of timing and interaction effects observed in animal models. The one study[45] often touted as proving “The Risk of Autism is Not Increased by ‘Too Many Vaccines Too Soon’”[46] in fact compares patients based on antigens, and since DTP had more than 3000 antigens and no other vaccine common among the study patients had more than a handful, effectively compared patients who’d had DTP and dozens of vaccines to patients who did not have DTP (many had DTaP instead) and dozens of vaccines. The only counterexamples to this I’ve found are contrived in bizarre ways to avoid reality, such as the study that withheld the 2 month vaccines till 3 months from a group of kids, and asked the mothers, who were terrified enough a bunch insisted on changing back to the early vaccination group, to record symptoms with no doctor even consulted, identifying the placebo effect as vaccine prevention of diseases. The authors wrote it would have been unethical to give a placebo at 2 months to the kids getting the vaccine at 3 months, in order to do the experiment blind, but apparently consider it ethical to inject dozens of vaccines into your kids with zero placebo controlled testing.[47] [48]

(7) The Extensive Evidence Indicating Flu Vaccines Damage Immune Systems, Particularly in Children.

- RPC test reported child flu vaccine recipients getting 4 times the respiratory illnesses of placebo recipients[49]

- Children seen at the Mayo Clinic 1996–2006 were 3 times as likely to be hospitalized if they had had a flu vaccine[50]

- Prior receipt of 2008-09 TIV was associated with increased risk of medically attended pH1N1 illness during the spring–summer 2009 in Canada[51]

- Multiple papers report flu vaccines damage CD8+ T Cells in both children and animal models[52][53][54]

- Flu vaccine recipients’ blood produced less IFN-gamma in response to new flu than people not previously vaccinated[55].

- The equation they use for flu vaccine “effectiveness” counts making recipients sick as effectiveness. Mathematically, if vaccine recipients get twice as many respiratory illnesses that counts the same as if they get half as many flu illnesses.[56][57] The published evidence of “effectiveness” is published evidence of collateral damage.

(8) The Epidemiological Studies That Aren’t Blatantly Confounded

All the credible ecological or epidemiological studies comparing people who got more vaccines to less indicate damage. For example,

- a 1/1000 increase in Infant Mortality is associated with each 7 additional vaccines in a national series regressed over the developed nations [18].

- An extra 680 ASD or Language impaired are associated with every 1% increase in compliance regressed over the 50 US states [19].

- High correlation between and within nations of vaccine aluminum to autism.[8]

- Two studies in Guinea-Bisseau that showed recipients of DTP died far more frequently than non-recipients, even though the recipients were from far more fortunate backgrounds[20][21].

- Vaccine adverse event reports are far more likely to be fatal if they follow multiple vaccinations than two[58].

- 1 in 10 girls is reported to make an ED visit within 42 days of receiving HPV vaccine[59][60].

Every empirical study I’ve read with a methodology that’s not clearly confounded consistently indicates vaccine damage.

(9) The Consistent Anecdotal and Informal Reports

Anecdotal and informal reports actually compare vaccinated and unvaccinated, unlike the contrived and confounded studies offered to support safety.

- Virtually all the Amish who are autistic turn out to have been vaccinated, the large numbers of unvaccinated in certain communities having no ASD whatsoever.[61]

- The Homestead Medical Practice in Chicago’s Dr. Mayer Eisenstein reports: ““My partners and I have over 35,000 patients who have never been vaccinated. You know how many cases of autism we have seen? ZERO, ZERO.” Also he reports virtually zero asthma.[61]

- Southern religious homeschoolers were anecdotally reported to have very low vaccination rates, and similarly virtually no autism.[61]

- An online survey of 13000 fully unvaccinated shows them to have less than a third of the prevalence of numerous conditions from allergies to skoliosis.[62](Figure 1.)

- More than a thousand parents, some of them Doctors, have posted Youtube reports describing why they are confident they saw their child given autism by vaccines.[63]

![Figure 1: Online survey of 13,000 unvaccinated compared to peer-reviewed survey data of the German vaccinated population[62]. The peer-reviewed data shows the vaccinated population averaging better than one chronic ailment per person, the unvaccinated report less than a third of that. The unvaccinated survey is online, selection biased, and self-reported, but there is no trustworthy data rebutting it, and 10 reasons are given in the text to believe the unvaccinated may be much healthier.](https://lifeboat.com/blog.images/the-top-ten-reasons-i-believe-vaccine-safety-is-an-epic-mass-delusion.jpg)

Figure 1: Online survey of 13,000 unvaccinated compared to peer-reviewed survey data of the German vaccinated population[62]. The peer-reviewed data shows the vaccinated population averaging better than one chronic ailment per person, the unvaccinated report less than a third of that. The unvaccinated survey is online, selection biased, and self-reported, but there is no trustworthy data rebutting it.

(10) The Authorities, Big Pharma, and Media Are Demonstrably Not Trustworthy.

- All the above 9 points and more are readily observable, but you wouldn’t learn that from the media or in med school.

- A Senior PhD CDC whistleblower has provided numerous documents and testified to congress about an explicit cover-up within CDC of a vaccine-autism connection,[64][65] and media whitewashed it.

- The vaccine manufacturers are exempt from any liability for vaccine damage.

- The same companies repeatedly plead guilty to marketing and safety violations and pay billions in fines.[66]

- They pay vast sums to media and fund the medical schools and research and give boondoggles and perks and contracts to doctors and revolving door government officials.[67][68]

- The authorities and big pharma never publicly commented while contaminated vaccines scientists expected to cause cancer and other dire problems were administered[41][42][43].

- The way the authorities have averted their eyes from contrary results is again the defining factor of Cargo Cult Science[32].

To summarize 10 points in two: (A) the safety literature, wherever it doesn’t outright show vaccine damage, demonstrably is bollixed to where it doesn’t show much of anything. (B) Lots of peer reviewed publications cogently report lots of consistent damage that no published evidence rationally opposes, but are ignored by authorities and media.

The vaccine safety literature is laid out in considerable detail on this TruthSift diagram https://tssciencecollaboration.com/graphtree/Are%20Vaccines%20Safe/406/4083 where readers are invited to add more pertinent citations or arguments. Anybody who thinks I am confused on any point is invited to challenge any claim above and explain why[69]. Please feel free to ask your Pediatrician or other authority, and let me know what they say. I’ve submitted to 2 medical journals so far, but been unable to obtain a substantive review, a review citing any papers or making a case I’m wrong. As I receive no substantive rebuttal, it reaffirms what I have already concluded from extensive research, none exists.

It is good to see production costs v. value add return comparisons with drugs as part of an ongoing drive to create drugs cheaper and making them cheaper to patients. However, lets do not sacrifice quality (especially in areas like cancer, MS, etc.) for costs of development/ cost savings. Value of life is priceless.

Defining the value of a drug in relation to its cost and benefit is an emerging theme in cancer care but remains untested.

A new UK study has identified a gene signature that predicts poor survival from ovarian cancer. The study also identified genes which help the cancer develop resistance to chemotherapy — offering a new route to help tackle the disease.

The study, published in the International Journal of Cancer, examined the role of HOX genes in ovarian cancer resistance and whether a drug known as HXR9 which targets HOX, could help prevent the resistance from developing.

The HOX gene family enables the remarkably rapid cell division seen in growing embryos. Most of these genes are switched off in adults, but previous research has shown that in several cancers, including ovarian cancer, HOX genes are switched back on, helping the cancer cells to proliferate and survive.

BMI technology is like anything else; you have an evolution process to finally reach a level of maturity. The good news is that at least at this point of time BMI is at least in that cycle where we are no longer crawling and trying to stand up. We’re in that stage of the cycle where we are standing up and taking a couple of steps at a time. In the next 3 to 5 years, things should be extremely interesting in the BMI space especially as we begin to introduce more sophisticated technology to our connected infrastructure.

Will future soldiers be able to use a direct brain interface to control their hardware?

Imagine if the brain could tell a machine what to do without having to type, speak or use other standard interfaces. That’s the aim of the US Defense Advanced Research Projects Agency (DARPA), which has committed US$60 million to a Neural Engineering System Design (NESD) project to do just that.

“Today’s best brain-computer interface systems are like two supercomputers trying to talk to each other using an old 300-baud modem,” said Phillip Alvelda, the NESD program manager. “Imagine what will become possible when we upgrade our tools to really open the channel between the human brain and modern electronics.”