Throughout their everyday lives, humans are typically required to make a wide range of decisions, which can impact their well-being, health, social connections, and finances. Understanding the human decision-making processes is a key objective of many behavioral science studies, as this could in turn help to devise interventions aimed at encouraging people to make better choices.

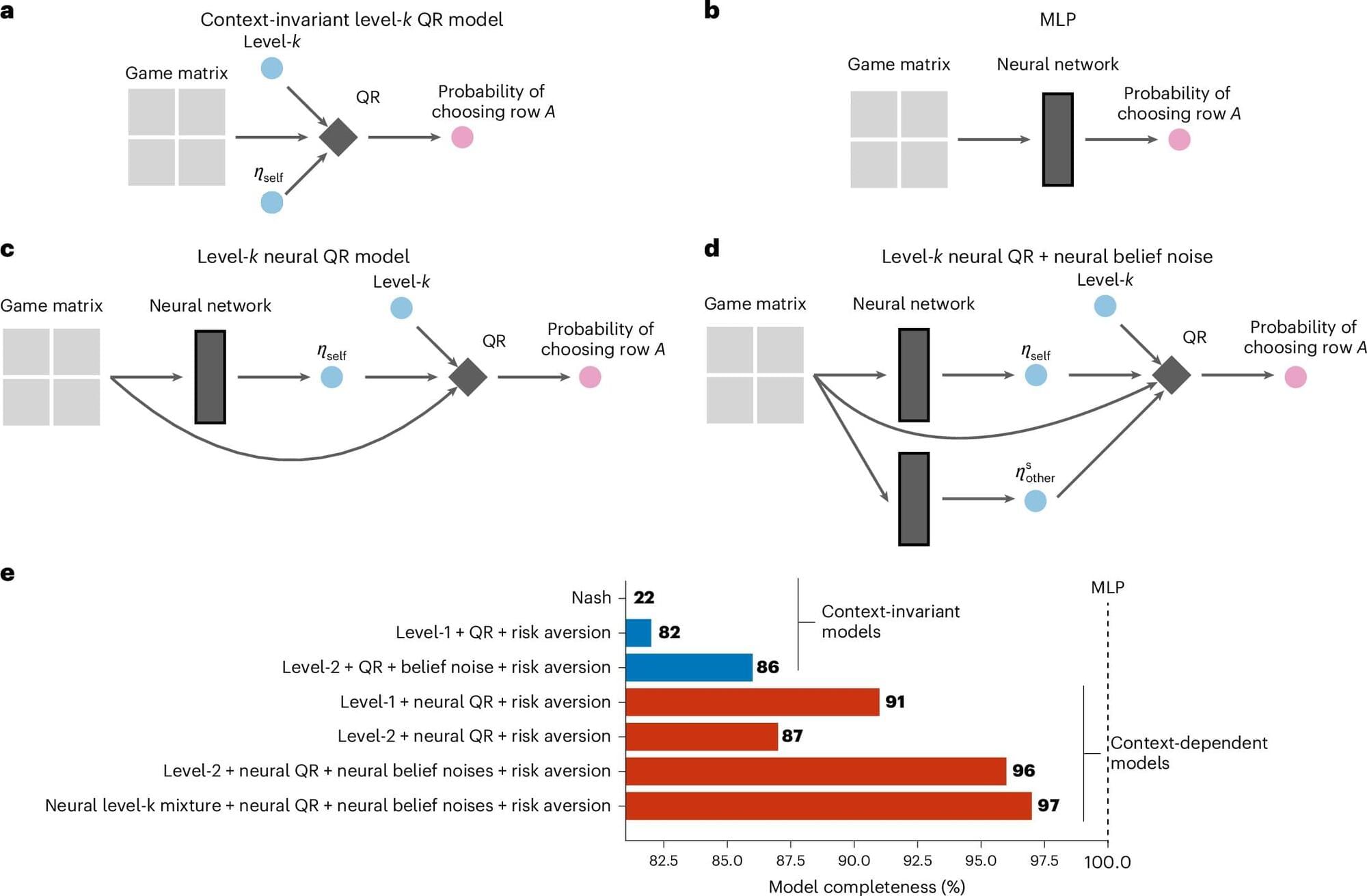

Researchers at Princeton University, Boston University and other institutes used machine learning to predict the strategic decisions of humans in various games. Their paper, published in Nature Human Behavior, shows that a deep neural network trained on human decisions could predict the strategic choices of players with high levels of accuracy.

“Our main motivation is to use modern computational tools to uncover the cognitive mechanisms that drive how people behave in strategic situations,” Jian-Qiao Zhu, first author of the paper, told Phys.org.