Nice discovery.

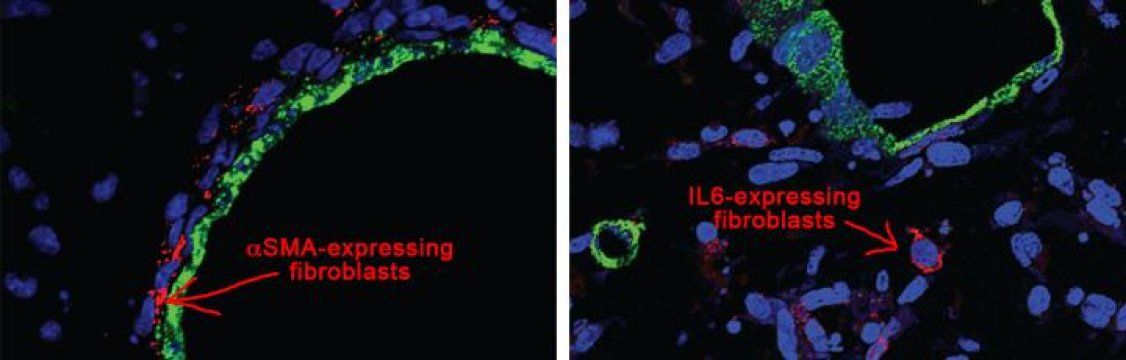

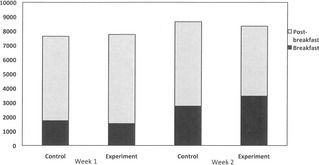

Why are pancreatic tumors so resistant to treatment? One reason is that the “wound”-like tissue that surrounds the tumors, called stroma, is much more dense than stromal tissue surrounding other, more treatable tumor types. Stromal tissue is believed to contain factors that aid tumor survival and growth. Importantly, in pancreatic cancer, its density is thought to be a factor in preventing cancer-killing drugs from reaching the tumor.

“You can think of a pancreas tumor as a big raisin oatmeal cookie, with the raisins representing the cancer cells and oatmeal portion representing the dense stroma that makes up over 90% of the tumor,” says David Tuveson, M.D., Ph.D., Director of the Cancer Center at Cold Spring Harbor Laboratory (CSHL). Tuveson leads the Lustgarten Foundation Designated Lab in Pancreatic Cancer Research at CSHL, and his team today reports an important discovery about stromal tissue in the major form of pancreatic cancer, called pancreatic ductal adenocarcinoma, or PDA.

Tuveson, who is also Director of Research for the Lustgarten Foundation, wants to know more about stromal tissue in PDA. “We were interested to read the results obtained by researchers in other labs, who targeted the stroma in various ways, sometimes with encouraging results, but sometimes causing tumors to grow even faster” he explains.