Join us for the LEAF Festive Fireside chat where we are talking about this years coolest science, our favourite moments and looking to our future goals.

Get the latest international news and world events from around the world.

This 195-gigapixel photo of Shanghai is so huge you can zoom in from miles away and see people’s faces

Smartphone cameras have gotten so good that we struggle to find a reason to invest in a point-and-shoot, but there are still major advancements being made in camera tech that are light years ahead of anything you can fit in your pocket. A new panorama shot by China’s Jingkun Technology (calling themselves “Big Pixel”) is a great example of that, and its size is so jaw-dropping you could spend days staring at it.

The photo, taken from high on the Oriental Pearl Tower in Shanghai, shows the surrounding landscape in stunning detail. From your virtual perch many stories above the ground, you can zoom in so far that you can read the license plates on cars and spot smiling faces greeting each other on the sidewalk.

Stephen Hawking’s Final Theory About Our Universe Will Melt Your Brain

Nope. Too late already. It’s been molten long ago already ha…

Groundbreaking physicist Stephen Hawking left us one last shimmering piece of brilliance before he died: his final paper, detailing his last theory on the origin of the Universe, co-authored with Thomas Hertog from KU Leuven.

The paper, published in the Journal of High Energy Physics in May, puts forward that the Universe is far less complex than current multiverse theories suggest.

It’s based around a concept called eternal inflation, first introduced in 1979 and published in 1981.

Drive-on wheel driven track system

You could give your ride a set of treads this winter.

How The 1% Will Live After The End Of The World | VICE on HBO (Bonus)

Throughout human history, doomsayers — people predicting the end of the world — have lived largely on the fringes of society. Today, a doomsday industry is booming thanks to TV shows, movies, hyper-partisan politics and the news media. With the country’s collective anxiety on the rise, even the nation’s wealthiest people are jumping on board, spending millions of dollars on survival readiness in preparation for unknown calamities. We sent Thomas Morton to see how people across the country are planning to weather the coming storm.

Check out VICE News for more: http://vicenews.com

Follow VICE News here:

Facebook: https://www.facebook.com/vicenews

Twitter: https://twitter.com/vicenews

Tumblr: http://vicenews.tumblr.com/

Instagram: http://instagram.com/vicenews

More videos from the VICE network: https://www.fb.com/vicevideo

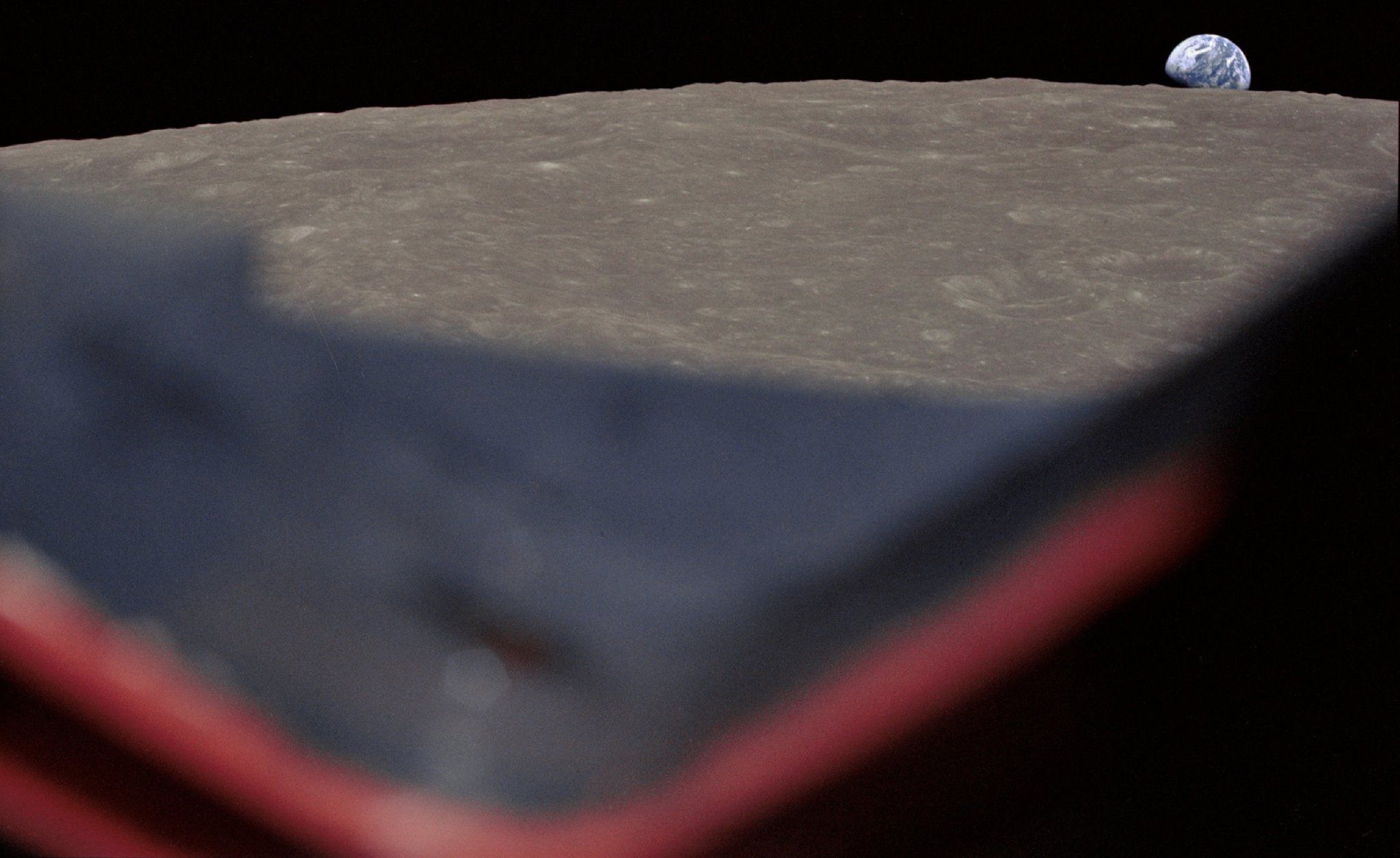

69 hours after launch, the crew reached the far side of the Moon

They glimpsed Earth outside their windows. “It’s a beautiful, beautiful view,” Frank Borman said to Mission Control. That Christmas Eve broadcast ended 1968 on a hopeful note, bringing a reminder of the all encompassing curiosity stitched into the fabric of all humans. Sink into the far side by celebrating our #Apollo50 Anniversary here: https://go.nasa.gov/2EGQJJX