The Urban Farmer is a comprehensive, hands-on, practical manual to help you learn the techniques and business strategies you need to make a good living growing high-yield, high-value crops right in your own backyard (or someone else’s).

What exactly would it take to create our very own Swartzchild Kugelblitz?

Could a Dyson Sphere Harness the Full Power of the Sun? — https://youtu.be/jOHMQbffrt4

Kugelblitz! Powering a Starship With a Black Hole

https://www.space.com/24306-interstellar-flight-black-hole-power.html

“Interstellar flight certainly ranks among the most daunting challenges ever postulated by human civilization. The distances to even the closest stars are so stupendous that constructing even a scale model of interstellar distance is impractical. For instance, if on such a model the separation of the Earth and sun is 1 inch (2.5 centimeters), the nearest star to our solar system (Proxima Centauri) would be 4.3 miles (6.9 kilometers) away!”

Kugelblitz Black Holes: Lasers & Doom

https://futurism.com/kugelblitz-black-holes-lasers-doom

“A kugelblitz black hole could theoretically be created by aiming lasers vastly more powerful than anything we have today at a single point. Logically, one could assume that turning off the lasers would ‘turn off’ the black hole? Well, that’s not quite right”

What is a Dyson sphere?

“In recent years, astronomers explored that possibility with a bizarre star, known to astronomers as KIC 8462852 – more popularly called Tabby’s Star for its discoverer Tabetha Boyajian. This star’s strange light was originally thought to indicate a possible Dyson sphere. That idea has been discarded, but, in 2018, other possibilities emerged, such as that of using the Gaia mission to search for Dyson spheres.“

____________________

Elements is more than just a science show. It’s your science-loving best friend, tasked with keeping you updated and interested on all the compelling, innovative and groundbreaking science happening all around us. Join our passionate hosts as they help break down and present fascinating science, from quarks to quantum theory and beyond.

According to the report, the Defence Advanced Research Projects Agency (DARPA) has requested at least $10 million for its Reactor on a Rocket (ROAR) programme.

The Defence Advanced Research Projects Agency intends to assemble a nuclear thermal propulsion (NTP) system in orbit, Aviation Week reported, citing the Pentagon’s 2020 budget.

“The program will initially develop the use of additive manufacturing approaches to print NTP fuel elements… In addition, the program will investigate on-orbit assembly techniques (AM) to safely assemble the individual core element subassemblies into a full demonstration system configuration, and will perform a technology demonstration”, the budget document says.

Bio-PDO — Susterra propanediol produced by DuPont Tate & Lyle Bio Products — are among the many smart applications of corn, and constitute the building blocks of a number of environmentally friendly materials, increasingly used in the footwear manufacture.

We are all familiar with sweetcorn or corn on the cob and many of us enjoy eating it boiled and coated in butter. Americans in particular are especially fond of it although less than 1% of all the corn grown annually in the USA is for human consumption. The remaining 99% is industrial corn or maize which is used for animal feed and for processing into a variety of other products. Among these is Bio-PDO or, to give it its commercial name, Susterra propanediol produced by DuPont Tate & Lyle Bio Products, which is used as a basis for a number of environmentally friendly materials, increasingly used in footwear manufacture.

The variety of corn involved is known as yellow dent and has a high starch content. After harvesting and drying, it is transported to Tate & Lyle’s wet mill at Loudon, Tennessee. Using a wet milling process, the corn is separated into its four basic components: starch, germ, fibre and protein. The nutrient rich components are used for animal feed while glucose is derived from the remaining starch fraction and is the raw material used for making 1.3- propanediol. The process starts off with a culture of a special microorganism in a small flask with the glucose. As it grows, it is transferred to a seed fermenter, followed by a ten-story high production fermenter. Fermentation takes place under exact temperature conditions and involves a patented process where the microorganism functions as a biocatalyst, converting glucose into biobased 1.3-propaneidol.

Spiders, mushrooms and algae may help build the next Hilfiger, Levi and Chanel.

Organisms are the great designers of our planet, producing materials in distinct patterns to serve a specific function. Bees produce hexagonal honeycombs to store honey, spiders weave symmetrical webs to capture prey, and nautiluses form a logarithmic spiral shell to protect their insides. Synthetic biologists, ever inspired by nature, are leveraging these unique abilities, harnessing nature’s potential to revolutionize apparel by guiding structural assemblies at the molecular level.

Here are three examples of innovative companies — in Tokyo, New York, and Berkeley — that are letting nature show the way to better, more sustainable materials in a quest to alter the fashion and apparel industries forever.

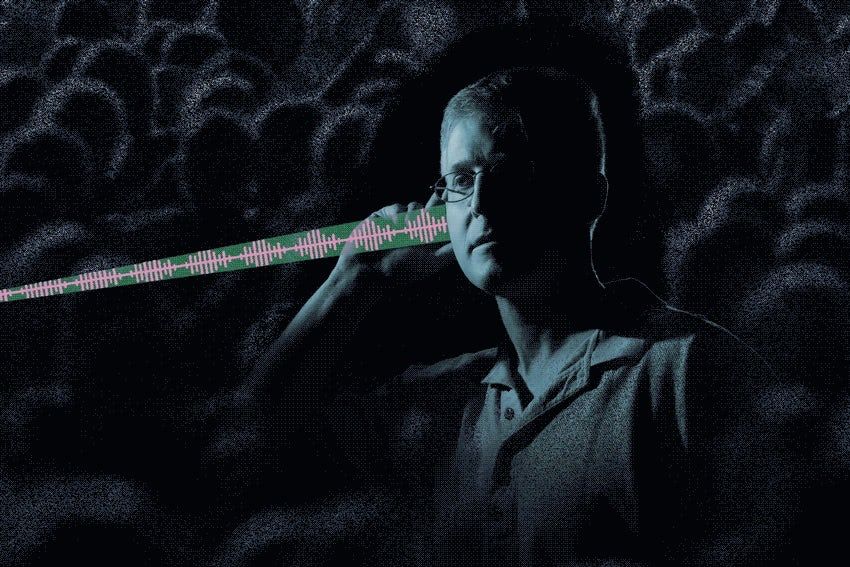

Lasers have been used to send targeted, quiet messages to someone from several meters away, in a way that no one nearby would be able to hear.

How it works: To send the messages, researchers from MIT relied upon the photoacoustic effect, in which water vapor in the air absorbs light and forms sound waves. The researchers used a laser beam to transmit a sound at 60 decibels (roughly the volume of background music or conversation in a restaurant) to a target person who was standing 2.5 meters away.

A second technique modulated the power of the laser beam to encode a message, which produced a quieter but clearer result. The team used it to beam music, recorded speech, and various tones, all at conversational volume. “This can work even in relatively dry conditions because there is almost always a little water in the air, especially around people,” team leader Charles M. Wynn said in a press release. Details of the research were published in Optics Letters.

https://paper.li/e-1437691924#/ https://www.nature.com/articles/s41591-019-0375-9

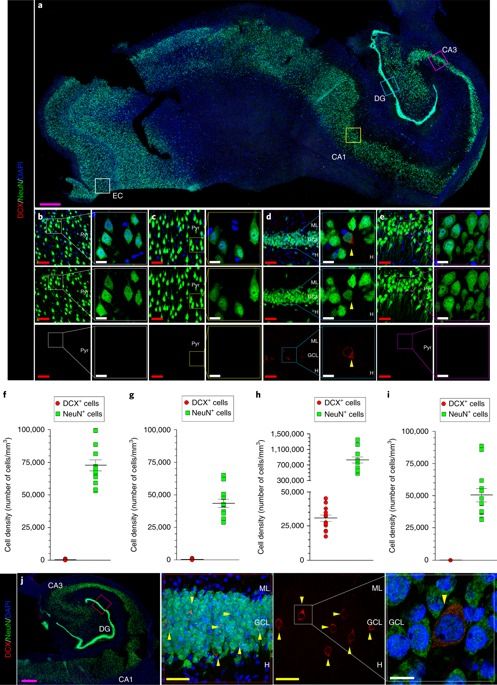

The hippocampus is one of the most affected areas in Alzheimer’s disease (AD). Moreover, this structure hosts one of the most unique phenomena of the adult mammalian brain, namely, the addition of new neurons throughout life. This process, called adult hippocampal neurogenesis (AHN), confers an unparalleled degree of plasticity to the entire hippocampal circuitry3,4. Nonetheless, direct evidence of AHN in humans has remained elusive. Thus, determining whether new neurons are continuously incorporated into the human dentate gyrus (DG) during physiological and pathological aging is a crucial question with outstanding therapeutic potential. By combining human brain samples obtained under tightly controlled conditions and state-of-the-art tissue processing methods, we identified thousands of immature neurons in the DG of neurologically healthy human subjects up to the ninth decade of life. These neurons exhibited variable degrees of maturation along differentiation stages of AHN. In sharp contrast, the number and maturation of these neurons progressively declined as AD advanced. These results demonstrate the persistence of AHN during both physiological and pathological aging in humans and provide evidence for impaired neurogenesis as a potentially relevant mechanism underlying memory deficits in AD that might be amenable to novel therapeutic strategies.

The Post spoke to tech workers in Zhongguancun and other parts of Beijing for a snapshot of what life is really like living in China’s Silicon Valley, as these tech hubs – home to internet giants like Baidu, Meituan and ByteDance – have been dubbed.

Life in China’s tech industry is not easy, with young employees and entrepreneurs battling burnout while also worrying about career ceilings and lay-offs.