Hundreds of people in Florida and Cuba witnessed the phenomenon.

Get the latest international news and world events from around the world.

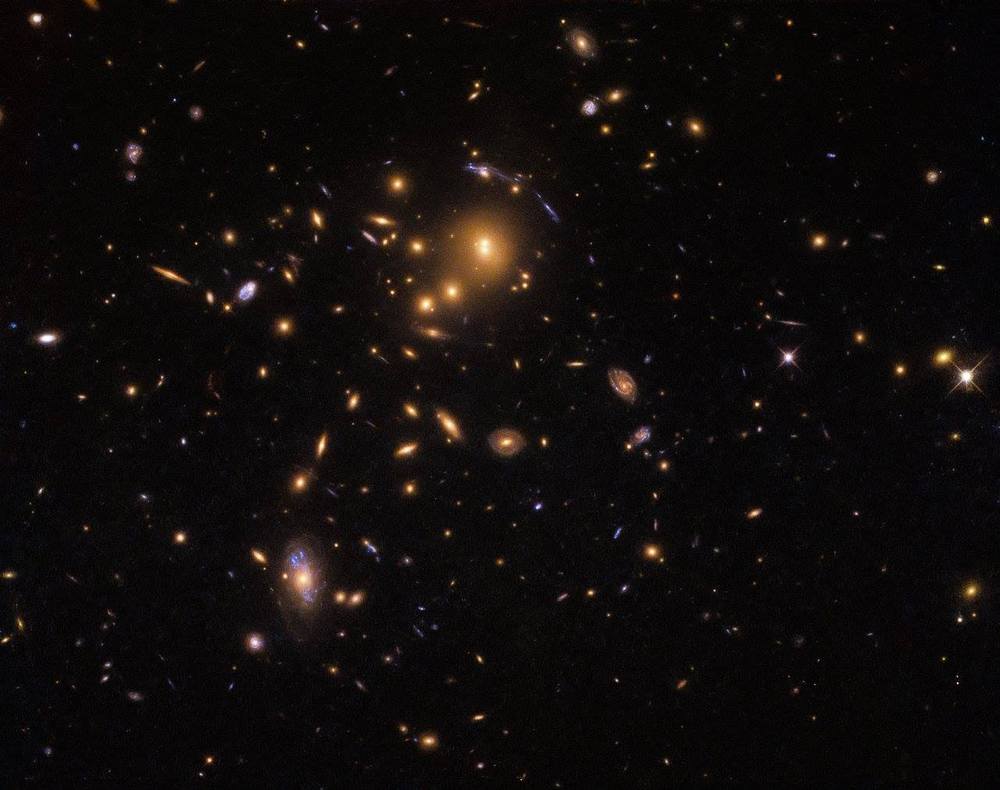

NASA’s Hubble Space Telescope doesn’t usually get much assistance from its celestial subjects — but to take this image

NASA’s Hubble Space Telescope doesn’t usually get much assistance from its celestial subjects — but to take this image, the telescope opted for teamwork and made good use of a fascinating cosmic phenomenon known as gravitational lensing. See the result of this teamwork: https://go.nasa.gov/2SF31sW

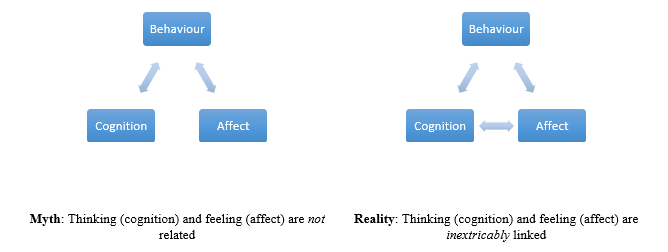

How Emotions Impact Cognition

Two information-processing systems determine the human emotional response: the affect ive and cognitive processing systems. The affect ive system operates outside of conscious thought and is reactive, in that a series of psychophysiological events are initiated automatically following the receipt of sensory information. In contrast, the cognitive processing system is conscious and involves analysis of sensory information to influence and even counteract the affect ive system. Affects (i.e. things that induce some change to the affect ive system) are divided into positive and negative groups. Positive affect has the potential to improve creative thinking, while negative affect narrows thinking and has the potential to adversely affect performance on simple tasks. Emotions are the product of changes in the affect ive system brought about by sensory information stimulation. Research suggests positive emotions—such as happiness, comfort, contentedness, and pleasure—help us make decisions, allow us to consider a larger set of options, decide quicker, and develop more creative problem-solving strategies. These findings suggest attractive things really do work better (Norman 2005), even if this is only the case because they make us feel better when we are using them.

Much of the work into how users and customers behave focuses on the emotional responses elicited by a product. However, emotions are the product of complex processing systems, which essentially convert sensory information into the psychophysiological and behavioral changes that we refer to as emotional responses. According to Don Norman, cognition and affect are in charge of these emotional responses. Cognition and affect are information-processing systems, which help us convert information from our environment into accurate representations of the world and make value judgments that determine how we respond and behave.

Norman distinguishes the cognitive and affect ive systems, and defines emotion, thusly: “The cognitive system interprets and makes sense of the world. Affect is the general term for the judgemental system, whether conscious or subconscious. Emotion is the conscious experience of affect, complete with attribution of its cause and identification of its object”. The affect ive and cognitive systems are thought to work independently, but they influence one another, with the former operating unconsciously while the latter operates at the conscious level. For example, imagine you are about to make a speech in front of a room full of people; the affect ive system is immediately called into action, with chemicals released in your body in response to the situation automatically and without your ability to control this physiological response.

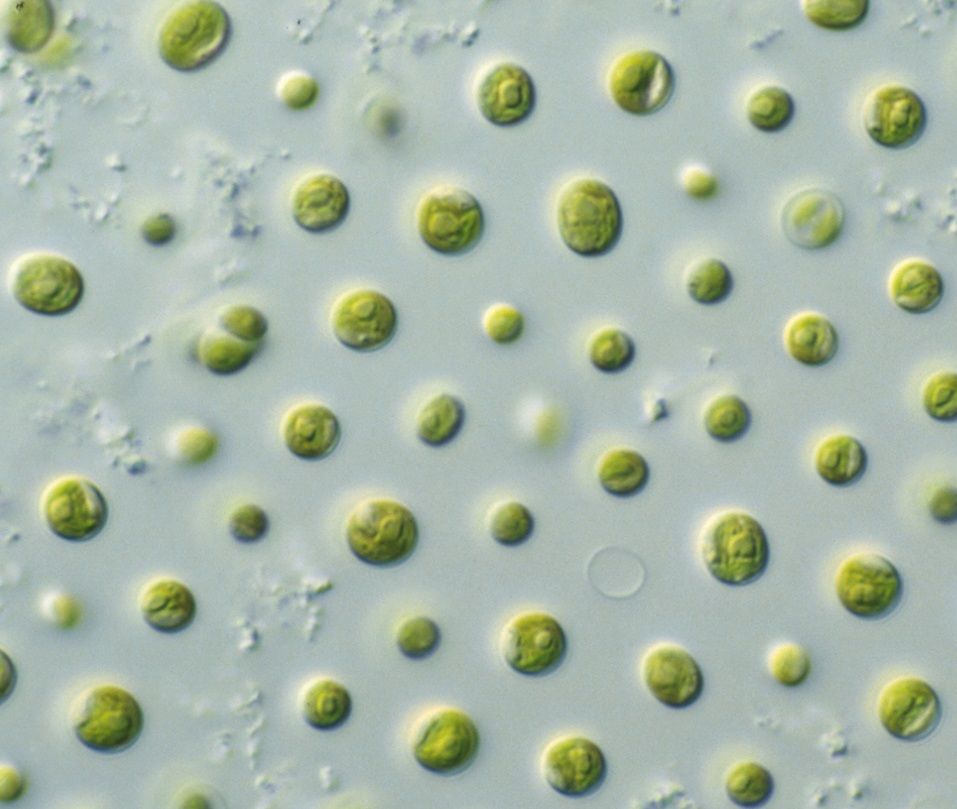

Producing biofuels from algae

Microalgae are showing huge potential as a sustainable source of biofuels.

Producing biofuels from renewable sources.

Due to concerns about peak oil, energy security, fuel diversity and sustainability, there is great interest around the world in renewable sources of biofuels.

Microalgae are great candidates for sustainable production of biofuels and associated bioproducts:

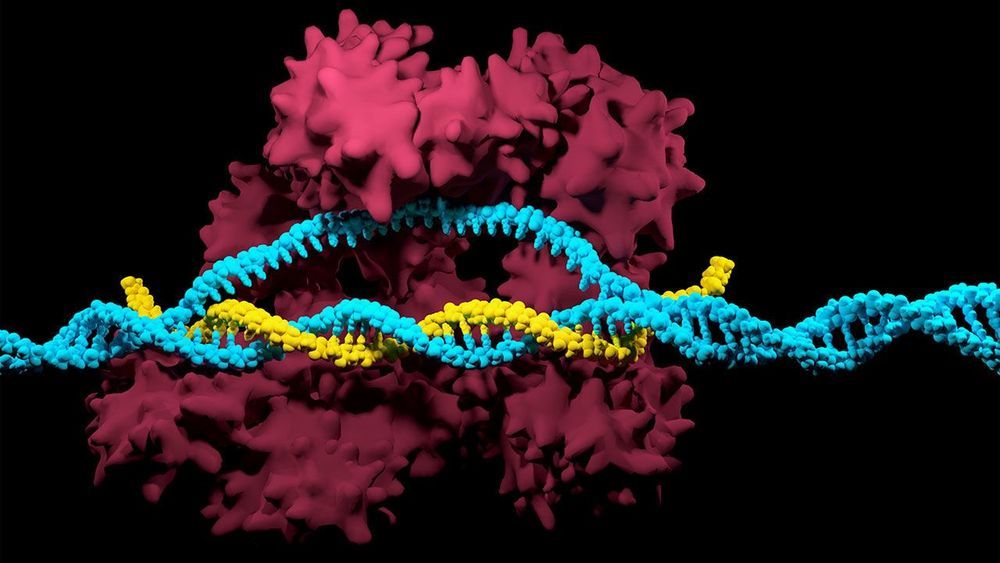

Nanomachines taught to fight cancer

Scientists from ITMO in collaboration with international colleagues have proposed new DNA-based nanomachines that can be used for gene therapy for cancer. This new invention can greatly contribute to more effective and selective treatment of oncological diseases. The results were published in Angewandte Chemie.

Want to live forever? You just have to make it to 2050

“If you’re under 40 reading this article, you’re probably not going to die unless you get a nasty disease.”

Those are the words of esteemed futurologist Dr. Ian Pearson, who told The Sun he believes humans are very close to achieving “immortality” – the ability to never die.

Humans have been trying to find a way to dodge death for years.

Gaze Upon the Black Magic of Electrical Discharge Machining

Ever seen GIFs of metal parts fitting together so precisely that the boundaries between them seem to disappear? That’s the joy of EDM. (Um, the other EDM.)

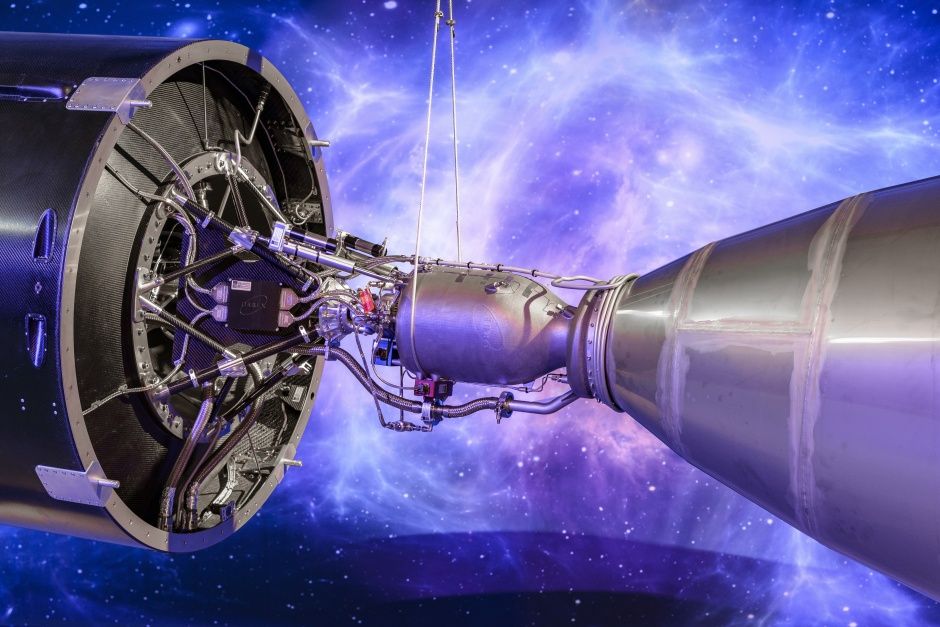

Scottish space firm unveils world’s largest 3D printed rocket engine

Scottish space firm Orbex has unveiled an engineering prototype of a rocket that’s at the heart of plans to develop a UK satellite launch capability.

The company, which is involved in plans to develop the UK’s first spaceport in Sutherland, Scotland unveiled the rocket at the opening of its new headquarters and rocket design facility in Forres in the Scottish Highlands.

Designed to deliver small satellites into Earth’s orbit, Orbex Prime is a two-stage rocket that’s claimed to be up to 30% lighter and 20% more efficient than any other vehicle in the small launcher category. It is also the first commercial rocket engine designed to work with bio-propane, a clean-burning, renewable fuel source that cuts carbon emissions by 90% compared to fossil hydrocarbon fuels.

Virgin Galactic founder Richard Branson sets date of first trip into space

British billionaire plans to make a suborbital flight on the 50th anniversary of the Apollo 11 moon landing.

Sir Richard Branson stands beside the Virgin Galactic spacecraft at the Farnborough International Airshow on Nov. 7, 2012 in Hampshire, England. Steve Parsons / PA Images via Getty Images file.