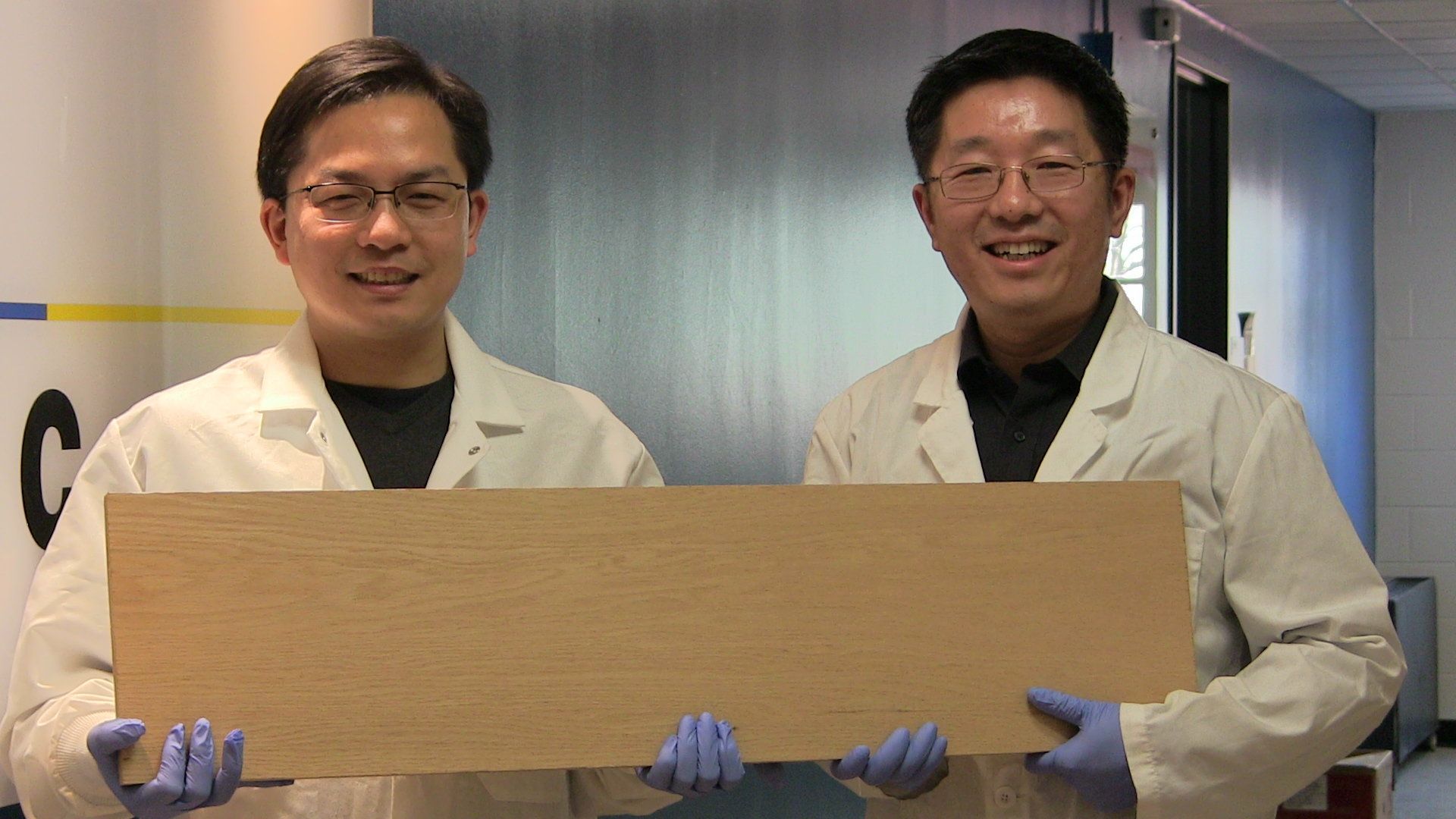

Engineers at the University of Maryland, College Park (UMD) have found a way to make wood more than 10 times times stronger and tougher than before, creating a natural substance that is stronger than many titanium alloys.

“This new way to treat wood makes it 12 times stronger than natural wood and 10 times tougher,” said Liangbing Hu of UMD’s A. James Clark School of Engineering and the leader of the team that did the research, to be published on February 8, 2018 in the journal Nature. “This could be a competitor to steel or even titanium alloys, it is so strong and durable. It’s also comparable to carbon fiber, but much less expensive.” Hu is an associate professor of materials science and engineering and a member of the Maryland Energy Innovation Institute.

“It is both strong and tough, which is a combination not usually found in nature,” said Teng Li, the co-leader of the team and Samuel P. Langley Associate Professor of mechanical engineering at UMD’s Clark School. His team measured the dense wood’s mechanical properties. “It is as strong as steel, but six times lighter. It takes 10 times more energy to fracture than natural wood. It can even be bent and molded at the beginning of the process.”