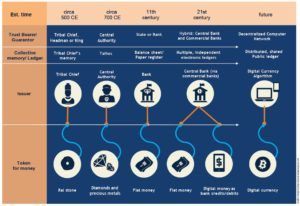

At Quora.com, I respond to quetions on Bitcoin and Cryptocurrency. Today, a reader asked “Will we all be using a blockchain-based currency some day?”.

This is an easy question to answer, but not for usual Geeky reasons: A capped supply, redundant bookkeeping, privacy & liberty or blind passion. No, these are all tangential reasons. But first, let’s be clear about the answer:

Yes, Virginia. We are all destined to move,

eventually, to a blockchain based currency.

I am confident of this because of one enormous benefit that trumps all other considerations. Also, because of flawed arguments behind perceived negatives.

Let’s start by considering the list of reasons why many analysts and individuals expect cryptocurrencies to fail widespread adoption—especially as a currency:

- It lacks ‘intrinsic value’, government backing or a promise of redemption

- It facilitates crime

- Privacy options interfere with legitimate tax enforcement

- It is susceptible to hacks, scams, forgery, etc

- It is inherently deflationary, and thus retards economic growth

- It subverts a government’s right to control its own monetary policy

All statements are untrue, except the last two. My thoughts on each point are explained and justified in other articles—but let’s look at the two points that are partially true:

- Indeed, a capped blockchain-based cryptocurrency is deflationary, but this will not necessarily inhibit economic growth. In fact, it will greatly spur commerce, jobs and international trade.

- Yes, widespread adoption of a permissionless, open source, p2p cryptocurrency (not just as a payment instrument, but as the money itself), will decouple a government from its money supply, interest rates, and more. This independence combined with immutable trust is a very good thing for everyone, especially for government.

How so?…

Legislators, treasuries and reserve boards will lose their ability to manipulate the supply and demand of money. That’s because the biggest spender of all no longer gets to define “What is money?” Each dollar spent must be collected from taxpayers or borrowed from creditors who honestly believe in a nation’s ability to repay. Ultimately, Money out = Money in. This is what balancing the books requires in every organization.

This last point leads to certainty that we will all be using a blockchain based cryptocurrency—and not one that is issued by a government, nor one that is backed by gold, the dollar, a redemption promise—or some other thing of value.

Just like the dollar today, the value arises from trust and a robust two sided network. So, which of these things would you rather trust?

a) The honesty, fiscal restraint and transparency of transient politicians beholden to their political base?

b) The honesty, fiscal restraint and transparency of an asset which is capped, immutable, auditable? —One that has a robust two sided network and is not gated by any authority or sanctioned banking infrastructure

Today, with the exception of the United States Congress, everyone must ultimately balance their books: Individuals, households, corporations, NGOs, churches, charities, clubs, cities, states and even other national governments. Put another way: Only the United States can create money without a requirement to honor, repay or demonstrate equivalency. This remarkable exclusion was made possible by the post World War II evolution of the dollar as a “reserve currency” and the fractional reserve method by which US banks create money out of thin air and then lend it with the illusion of government insurance as backing. (A risky pyramid scheme that is gradually unravelling).

But, imagine a nation that agrees upon a form of cash that arises from a “perfect” and fair natural resource. Imagine a future where no one—not even governments—can game the system. Imagine a future where creditors know that a debtor cannot print paper currency to settle debts. Imagine what can be accomplished if citizens truly respect their government because the government lives by the same accounting rules as everyone else.

A fair cryptocurrency (based on Satoshi’s open-source code and free for anyone to use, mine, or trade) is gold for the modern age. But unlike gold, the total quantity is clearly understood. It is portable, electronically transmittable (instant settlement without a clearing house), immutable—and it needn’t be assayed in the field with each transaction.

And the biggest benefit arises as a byproduct directly of these properties: Cryptocurrency (and Bitcoin in particular) is remarkably good for government. All it takes for eventual success is an understanding of the mechanism, incremental improvement to safety and security practices and widespread trust that others will continue to value/covet your coins in the future. These are all achievable waypoints along the way to universal adoption.

Philip Raymond co-chairs CRYPSA, hosts the Bitcoin Event and is keynote speaker at Cryptocurrency Conferences. He advises The Disruption Experience in Singapore, sits on the New Money Systems board of Lifeboat Foundation and is a top writer at Quora. Book a presentation or consulting engagement.