Microsoft has been on a quest to build the holy grail of computers for over a decade, dumping tons of money into researching quantum computing and the company says they are ready to transition over to the engineering phase of their endeavor. At least that’s what MS executive Todd Holmdahl aims to accomplish by developing the hardware and software to do so.

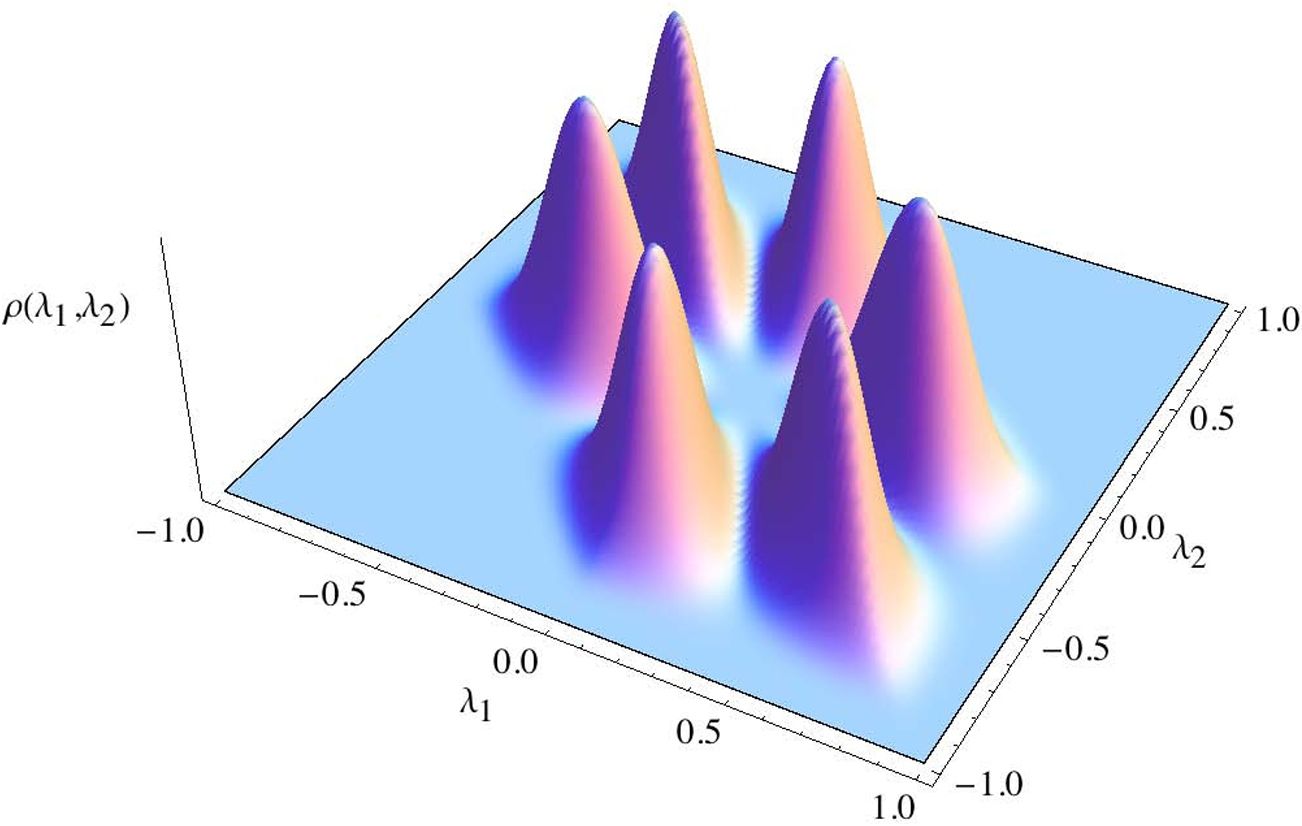

How do 2 random mix Quantum States look like in a graphics image? Pretty.

Today’s Image of the Week comes from a study of The difference between two random mixed quantum states: exact and asymptotic spectral analysis from the Universidad de los Andes and ETH Zürich. In this recent JPhysA paper, José Mejía, Camilo Zapata and Alonso Botero investigate the spectral statistics of the difference of two density matrices, each of which is independently obtained by partially tracing a random bipartite pure quantum state. Their results make it possible to quantify the typical asymptotic distance between the two random mixed states using various distance measures.

Not sure where the author got his messaging on AI and QC (namely AI more fluid and human like due to QC); but it sounds a lot like my words. However, there is one lost piece to the AI story even with QC to make AI more human like and that is when you have Synbio involved in the mix. In fact I can not wait to see what my friend Alex Zhavoronkov and his team does with QC in his anti-aging work. I expect to see many great things with QC, AI, and Synbio together.

Nonetheless, I am glad to see others also seeing the capability that many of us do see.

Applications of Artificial Intelligence In Use Today

Beyond our quantum-computing conundrum, today’s so-called A.I. systems are merely advanced machine learning software with extensive behavioral algorithms that adapt themselves to our likes and dislikes. While extremely useful, these machines aren’t getting smarter in the existential sense, but they are improving their skills and usefulness based on a large dataset. These are some of the most popular examples of artificial intelligence that’s being used today.

Changing another world to support Earth life is called terraforming. But maybe it’s a better idea to just change Earth life to live on other worlds.

Support us at: http://www.patreon.com/universetoday

More stories at: http://www.universetoday.com/

Follow us on Twitter: @universetoday

Ever since Jules Verne and before — perhaps as early as the 5th century B.C. — writers, philosophers and scientists have brought fantasies to life about spaceships carrying humans to other planets, solar systems and galaxies.

Of all the potential targets, only the moon thus far has hosted Earthling “boots on the ground.” Next on most wish lists is Mars. NASA’s tentative schedule designates the first manned mission sometime around 2030.

Aside from the formidable task of designing a safe, efficient vehicle to transport people and supplies, such a mission — depending on the positions of the two planets and other logistics — would take in the neighborhood of nine months each way. Not only is that a long trip, but it would also expose the human body to ambient space radiation for close to a year. Can’t this travel time, many have asked, be cut down somehow?

Wind turbines in Scotland generated power equivalent to all its electricity needs for four straight days, between December 23 and 26, new analysis from WWF Scotland has shown.

Furthermore, on December 24, 74,042 megawatt hours of electricity generated from wind power was sent to the National Grid, a record.

With electricity demand on Christmas Eve 56,089 megawatt hours, WWF Scotland noted that wind turbines generated the “equivalent of 132 percent of Scotland’s total electricity needs that day.” The environmental group’s figures come from analysis of data provided by WeatherEnergy.

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory have developed a new computational model of a neural circuit in the brain, which could shed light on the biological role of inhibitory neurons — neurons that keep other neurons from firing.

The model describes a neural circuit consisting of an array of input neurons and an equivalent number of output neurons. The circuit performs what neuroscientists call a “winner-take-all” operation, in which signals from multiple input neurons induce a signal in just one output neuron.

Using the tools of theoretical computer science, the researchers prove that, within the context of their model, a certain configuration of inhibitory neurons provides the most efficient means of enacting a winner-take-all operation. Because the model makes empirical predictions about the behavior of inhibitory neurons in the brain, it offers a good example of the way in which computational analysis could aid neuroscience.