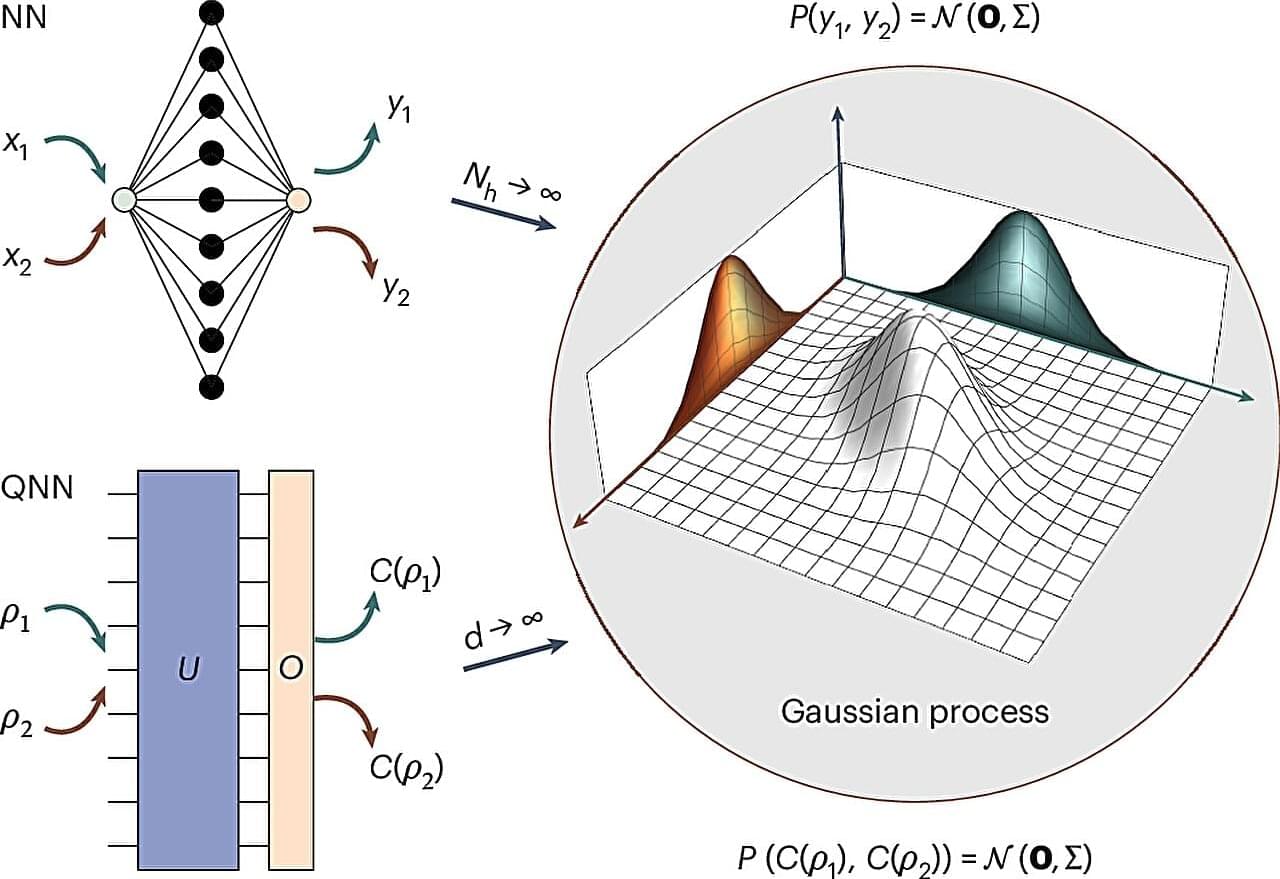

Neural networks revolutionized machine learning for classical computers: self-driving cars, language translation and even artificial intelligence software were all made possible. It is no wonder, then, that researchers wanted to transfer this same power to quantum computers—but all attempts to do so brought unforeseen problems.

Recently, however, a team at Los Alamos National Laboratory developed a new way to bring these same mathematical concepts to quantum computers by leveraging something called the Gaussian process.

“Our goal for this project was to see if we could prove that genuine quantum Gaussian processes exist,” said Marco Cerezo, the Los Alamos team’s lead scientist. “Such a result would spur innovations and new forms of performing quantum machine learning.”