Exclusive: Report from planning exercise in 2016 alerted government of need to stockpile PPE and set up contact tracing system.

In the early days of the pandemic, with commercial COVID tests in short supply, Rockefeller University’s Robert B. Darnell developed an in-house assay to identify positive cases within the Rockefeller community. It turned out to be easier and safer to administer than the tests available at the time, and it has been used tens of thousands of times over the past nine months to identify and isolate infected individuals working on the university’s campus.

Now, a new study in PLOS ONE confirms that Darnell’s test performs as well, if not better, than FDA-authorized nasal and oral swab tests. In a direct head-to-head comparison of 162 individuals who received both Rockefeller’s “DRUL” saliva test and a conventional swab test, DRUL caught all of the cases that the swabs identified as positive—plus four positive cases that the swabs missed entirely.

“This research confirms that the test we developed is sensitive and safe,” says Darnell, the Robert and Harriet Heilbrunn professor and head of the Laboratory of Molecular Neuro-Oncology. “It is inexpensive, has provided excellent surveillance within the Rockefeller community, and has the potential to improve safety in communities as the pandemic drags on.”

The UK Space Agency tweeted: “We are monitoring its re-entry together with @DefenceHQ, and there is no expectation the re-entry will cause any damage. Due to the varying input data, natural forces and associated observation error, there are always high levels of uncertainty when performing re-entry predictions on any satellite”.

“Today, a Starlink-1855 satellite re-entered the Earth’s atmosphere. There is a chance it will re-enter over the UK, and you might be able to spot the satellite as it burns up. Starlink has a fantastic track record or orchestrating safe and reliable re-entries. We do not expect the return of the satellite to cause any damage. Still the UK Space Agency and the Ministry of Defence continually monitor and assess the re-entries of satellite and debris and any risk to British territories through our joined Space Surveillance and Tracking capabilities”.

Japan says it has scrambled fighter aircraft to intercept Chinese military drones and accompanying surveillance aircraft on three consecutive days this week as its defense forces took part in a series of readiness exercises with regional allies.

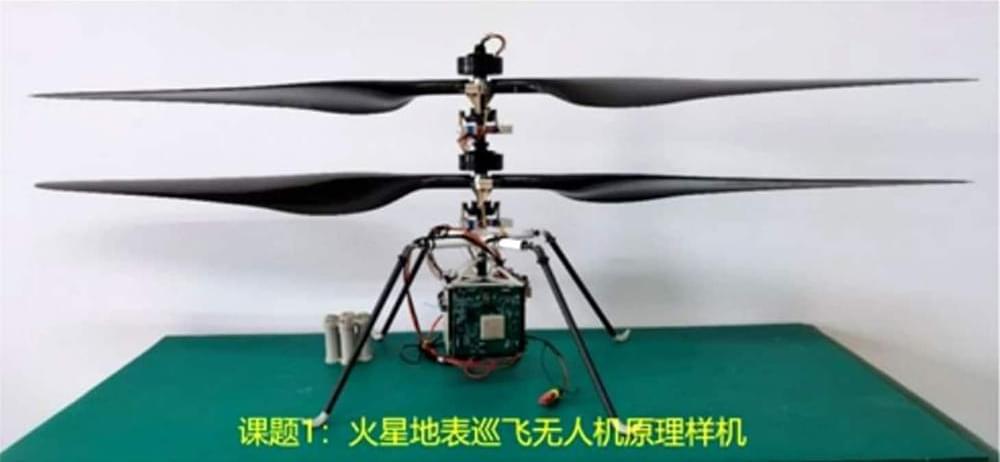

China has shown off the prototype of its “Mars cruise drone” designed for surveillance work on future Mars missions, following the historic landing of a robotic rover on the Red Planet a few months ago.

The prototype of the miniature helicopter successfully passed the final acceptance, China’s National Space Science Center (CNNSC) announced on Wednesday. In the images shared by the science center, the prototype looks similar in appearance to NASA’s Ingenuity helicopter, developed for its Perseverance mission this year.

The Chinese prototype sports two rotor blades, a sensor-and-camera base, and four thin legs, but there is no solar panel at the top like Ingenuity.

Drones are neat and fun and all that good stuff (I should probably add the caveat here that I’m obviously not referring to the big, terrible military variety), but when it comes to quadcopters, there’s always been the looming question of general usefulness. The consumer-facing variety are pretty much the exclusive realm of hobbyists and imaging.

We’ve seen a number of interesting applications for things like agricultural surveillance, real estate and the like, all of which are effectively extensions of that imaging capability. But a lot can be done with a camera and the right processing. One of the more interesting applications I’ve seen cropping up here and there is the warehouse drone — something perhaps a bit counterintuitive, as you likely (and understandably) associate drones with the outdoors.

Looking back, it seems we’ve actually had two separate warehouse drone companies compete in Disrupt Battlefield. There was IFM (Intelligent Flying Machines) in 2016 and Vtrus two years later. That’s really the tip of the iceberg for a big list of startups effectively pushing to bring drones to warehouses and factory floors.

“I am used to being harassed online. But this was different,” she added. “It was as if someone had entered my home, my bedroom, my bathroom. I felt so unsafe and traumatized.”

Oueiss is one of several high-profile female journalists and activists who have allegedly been targeted and harassed by authoritarian regimes in the Middle East through hack-and-leak attacks using the Pegasus spyware, created by Israeli surveillance technology company NSO Group. The spyware transforms a phone into a surveillance device, activating microphones and cameras and exporting files without a user knowing.

Military-grade spyware licensed by an Israeli firm to governments for tracking terrorists and criminals was used in attempted and successful hacks of 37 smartphones belonging to journalists, human rights activists, business executives and two women close to murdered Saudi journalist Jamal Khashoggi, according to an investigation by The Washington Post and 16 media partners.

The phones appeared on a list of more than 50000 numbers that are concentrated in countries known to engage in surveillance of their citizens and also known to have been clients of the Israeli firm, NSO Group, a worldwide leader in the growing and largely unregulated private spyware industry, the investigation found.

The list does not identify who put the numbers on it, or why, and it is unknown how many of the phones were targeted or surveilled. But forensic analysis of the 37 smartphones shows that many display a tight correlation between time stamps associated with a number on the list and the initiation of surveillance, in some cases as brief as a few seconds.

The AIR program was run by a company called Persistent Surveillance Systems with funding from two Texas billionaires. The city police department admitted to using planes to surveil Baltimore residents in 2016 but approved a six-month pilot program in 2020, which was active until October 31st.

The city of Baltimore’s spy plane program was unconstitutional, violating the Fourth Amendment protection against illegal search, and law enforcement in the city cannot use any of the data it gathered, a court ruled Thursday. The Aerial Investigation Research (or AIR) program, which used airplanes and high-resolution cameras to record what was happening in a 32-square-mile part of the city, was canceled by the city in February.

Local Black activist groups, with support from the ACLU, sued to prevent Baltimore law enforcement from using any of the data it had collected in the time the program was up and running. The city tried to argue the case was moot since the program had been canceled. That didn’t sit well with civil liberties activists. “Government agencies have a history of secretly using similar technology for other purposes — including to surveil Black Lives Matter protests in Baltimore in recent years,” the ACLU said in a statement Thursday.

In an en banc ruling, the US Court of Appeals for the Fourth Circuit found that “because the AIR program enables police to deduce from the whole of individuals’ movements, we hold that accessing its data is a search and its warrantless operation violates the Fourth Amendment.” Chief Judge Roger Gregory wrote that the AIR program “is like a 21st century general search, enabling the police to collect all movements,” and that “allowing the police to wield this power unchecked is anathema to the values enshrined in our Fourth Amendment.”

Live Eye Surveillance, a Seattle-based company, takes it to the next level and provides security systems to convenience stores like 7-Eleven; it employs “remote supervisors” who are real people sitting miles away behind the surveillance cameras, monitoring all activity captured by the tools.

Employers are using various surveillance technologies to track employee movement and interactions, and now 7-Eleven stores are involved in the game.