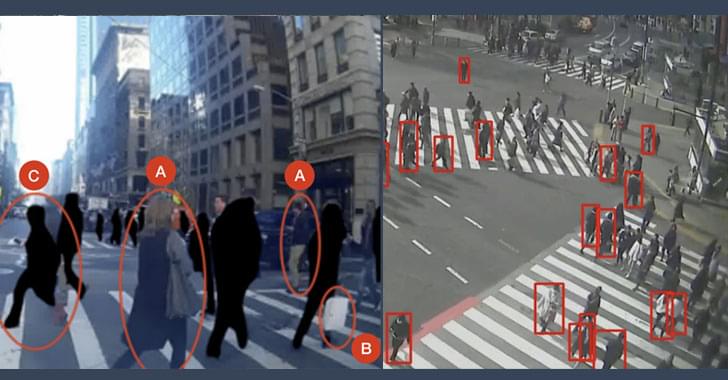

Minecrafts’s Uncensored Library is exploiting a loophole in surveillance technology to sneak the news past government

Category: surveillance – Page 15

Google: Predator spyware infected Android devices using zero-days

Google’s Threat Analysis Group (TAG) says that state-backed threat actors used five zero-day vulnerabilities to install Predator spyware developed by commercial surveillance developer Cytrox.

In these attacks, part of three campaigns that started between August and October 2021, the attackers used zero-day exploits targeting Chrome and the Android OS to install Predator spyware implants on fully up-to-date Android devices.

“We assess with high confidence that these exploits were packaged by a single commercial surveillance company, Cytrox, and sold to different government-backed actors who used them in at least the three campaigns discussed below,” said Google TAG members Clement Lecigne and Christian Resell.

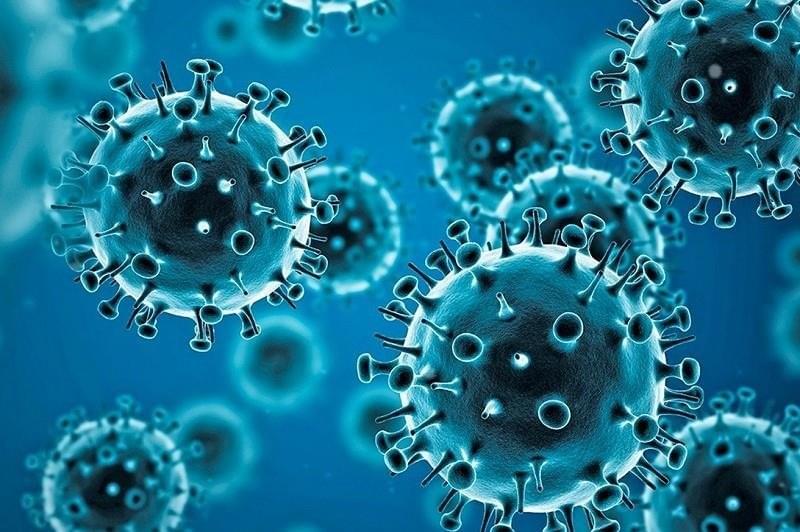

14.9 million excess deaths associated with the COVID-19 pandemic in 2020 and 2021

New estimates from the World Health Organization (WHO) show that the full death toll associated directly or indirectly with the COVID-19 pandemic (described as “excess mortality”) between 1 January 2020 and 31 December 2021 was approximately 14.9 million (range 13.3 million to 16.6 million). “These sobering data not only point to the impact of the pandemic but also to the need for all countries to invest in more resilient health systems that can sustain essential health services during crises, including stronger health information systems,” said Dr Tedros Adhanom Ghebreyesus, WHO Director-General. “WHO is committed to working with all countries to strengthen their health information systems to generate better data for better decisions and better outcomes.” Excess mortality is calculated as the difference between the number of deaths that have occurred and the number that would be expected in the absence of the pandemic based on data from earlier years. Excess mortality includes deaths associated with COVID-19 directly (due to the disease) or indirectly (due to the pandemic’s impact on health systems and society). Deaths linked indirectly to COVID-19 are attributable to other health conditions for which people were unable to access prevention and treatment because health systems were overburdened by the pandemic. The estimated number of excess deaths can be influenced also by deaths averted during the pandemic due to lower risks of certain events, like motor-vehicle accidents or occupational injuries. Most of the excess deaths (84%) are concentrated in South-East Asia, Europe, and the Americas. Some 68% of excess deaths are concentrated in just 10 countries globally. Middle-income countries account for 81% of the 14.9 million excess deaths (53% in lower-middle-income countries and 28% in upper-middle-income countries) over the 24-month period, with high-income and low-income countries each accounting for 15% and 4%, respectively. The estimates for a 24-month period (2020 and 2021) include a breakdown of excess mortality by age and sex. They confirm that the global death toll was higher for men than for women (57% male, 43% female) and higher among older adults. The absolute count of the excess deaths is affected by the population size. The number of excess deaths per 100,000 gives a more objective picture of the pandemic than reported COVID-19 mortality data.“Measurement of excess mortality is an essential component to understand the impact of the pandemic. Shifts in mortality trends provide decision-makers information to guide policies to reduce mortality and effectively prevent future crises. Because of limited investments in data systems in many countries, the true extent of excess mortality often remains hidden,” said Dr Samira Asma, Assistant Director-General for Data, Analytics and Delivery at WHO. “These new estimates use the best available data and have been produced using a robust methodology and a completely transparent approach.”“Data is the foundation of our work every day to promote health, keep the world safe, and serve the vulnerable. We know where the data gaps are, and we must collectively intensify our support to countries, so that every country has the capability to track outbreaks in real-time, ensure delivery of essential health services, and safeguard population health,” said Dr Ibrahima Socé Fall, Assistant Director-General for Emergency Response. The production of these estimates is a result of a global collaboration supported by the work of the Technical Advisory Group for COVID-19 Mortality Assessment and country consultations. This group, convened jointly by the WHO and the United Nations Department of Economic and Social Affairs (UN DESA), consists of many of the world’s leading experts, who developed an innovative methodology to generate comparable mortality estimates even where data are incomplete or unavailable. This methodology has been invaluable as many countries still lack capacity for reliable mortality surveillance and therefore do not collect and generate the data needed to calculate excess mortality. Using the publicly available methodology, countries can use their own data to generate or update their own estimates. “The United Nations system is working together to deliver an authoritative assessment of the global toll of lives lost from the pandemic. This work is an important part of UN DESA’s ongoing collaboration with WHO and other partners to improve global mortality estimates,” said Mr Liu Zhenmin, United Nations Under-Secretary-General for Economic and Social Affairs. Mr Stefan Schweinfest, Director of the Statistics Division of UN DESA, added: “Data deficiencies make it difficult to assess the true scope of a crisis, with serious consequences for people’s lives. The pandemic has been a stark reminder of the need for better coordination of data systems within countries and for increased international support for building better systems, including for the registration of deaths and other vital events.” Note for editors: The methods were developed by the Technical Advisory Group for COVID-19 Mortality Assessment, co-chaired by Professor Debbie Bradshaw and Dr. Kevin McCormack with extensive support from Professor Jon Wakefield at the University of Washington. The methods rely on a statistical model derived using information from countries with adequate data; the model is used to generate estimates for countries with little or no data available. The methods and estimates will continue to be updated as additional data become available and in consultation with countries.

Video shows Ukrainian soldier allegedly taking apart a Russian drone and discovering its regular camera

With some parts held together by duct tape.

In the raging battle between Russia and Ukraine, Russia’s small drones have been reported to be taking a deadly toll on Ukrainian forces. A new video, however, is revealing that the highly efficient precision drones are not as advanced as one might expect.

Ukraine’s Defense Ministry shared a video on Sunday that shows a soldier allegedly dismantling a Russian military surveillance drone and pointing out several highly unsophisticated features. In fact, seeing what the whole drone consists of it seems like something a schoolchild could put together.

## A generic handheld Canon DSLR

The drone being taken apart is an Orlan-10 model that fell on Ukrainian soil. The first thing the soldier points out is that the drone’s camera is a simple generic handheld Canon DSLR, the kind you can find on any tourist’s camera. How can such a complex war tool have such a common feature?

Full Story:

Iris Automation adds TruWeather tech to Casia G system

Safety avionics specialist Iris Automation has made a meteorological enhancement to its Casia G ground-based surveillance system with the integration of TruWeather Solutions sensors and services – a move aiming to add climate security to the company’s aerial detect-and-avoid protection.

Addition of a precision weather utility was a natural step in Iris Automation’s wider objective of ensuring flight safety of, and between, crewed aircraft and drones The company says local micro weather and low-altitude atmospheric conditions often differ considerably from those at higher levels. That differential creates a larger degree of weather uncertainty for aerial service providers, who weigh safety factors heavily into whether they make flights as planned or not.

Drones, hackers and mercenaries — The future of war | DW Documentary

A shadow war is a war that, officially, does not exist. As mercenaries, hackers and drones take over the role armies once played, shadow wars are on the rise.

States are evading their responsibilities and driving the privatization of violence. War in the grey-zone is a booming business: Mercenaries and digital weaponry regularly carry out attacks, while those giving orders remain in the shadows.

Despite its superior army, the U.S. exhausted its military resources in two seemingly endless wars. Now, the superpower is finally bringing its soldiers home. But while the U.S.’s high-tech army may have failed in Afghanistan, it continues to operate outside of official war zones. U.S. Special Forces conduct targeted killings, using drones, hacks and surveillance technologies. All of this is blurring the lines between war and peace.

The documentary also shows viewers how Russian mercenaries and hackers destabilized Ukraine. Indeed, the last decade has seen the rise of cyberspace armament. Hacking, sometimes subsidized by states, has grown into a thriving business. Digital mercenaries sell spy software to authoritarian regimes. Criminal hackers attack any target that can turn a profit for their clients.

But the classic mercenary business is also taking off, because states no longer want their official armies to go into battle. Former mercenary Sean McFate outlines how privatizing warfare creates an even greater demand for it. He warns that a world of mercenaries is a world dominated by war.

#documentary #dwdocumentary.