What kind of life will it find out there?

More accurate space-weather predictions and safer satellite navigation through radiation belts could someday result from new insights into “space waves,” researchers at Embry-Riddle Aeronautical University reported.

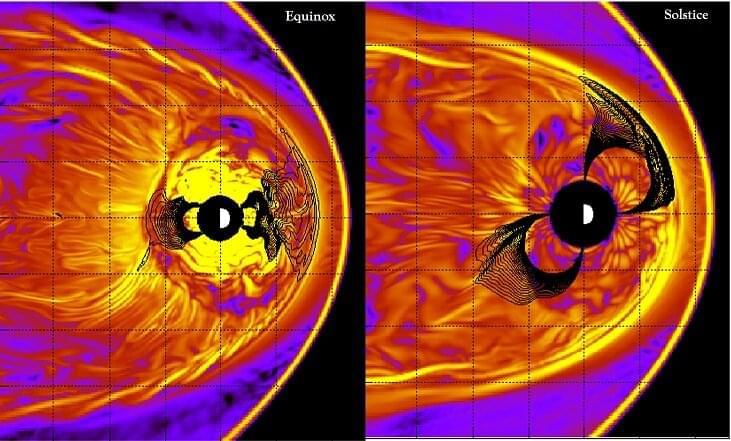

The group’s latest research, published on May 4, 2023, by the journal Nature Communications, shows that seasonal and daily variations in the Earth’s magnetic tilt, toward or away from the sun, can trigger changes in large-wavelength space waves.

These breaking waves, known as Kelvin-Helmholtz waves, occur at the boundary between the solar wind and the Earth’s magnetic shield. The waves happen much more frequently around the spring and fall seasons, researchers reported, while wave activity is poor around summer and winter.

WASHINGTON — Lockheed Martin announced May 4 it is consolidating several businesses focused on space into three sectors: Commercial civil space, national security space, and strategic and missile defense.

“With an eye toward the future and building on our current business momentum, these changes position us to deliver end-to-end solutions for today’s mission demands and well into the future,” said Robert Lightfoot, executive vice president of Lockheed Martin Space.

Emerging Metaverse applications demand accessible, accurate, and easy-to-use tools for 3D digital human creations in order to depict different cultures and societies as if in the physical world. Recent large-scale vision-language advances pave the way to for novices to conveniently customize 3D content. However, the generated CG-friendly assets still cannot represent the desired facial traits for human characteristics. In this paper, we present DreamFace, a progressive scheme to generate personalized 3D faces under text guidance. It enables layman users to naturally customize 3D facial assets that are compatible with CG pipelines, with desired shapes, textures, and fine-grained animation capabilities. From a text input to describe the facial traits, we first introduce a coarse-to-fine scheme to generate the neutral facial geometry with a unified topology. We employ a selection strategy in the CLIP embedding space to generate coarse geometry, and subsequently optimize both the details displacements and normals using Score Distillation Sampling from generic Latent Diffusion Model. Then, for neutral appearance generation, we introduce a dual-path mechanism, which combines the generic LDM with a novel texture LDM to ensure both the diversity and textural specification in the UV space. We also employ a two-stage optimization to perform SDS in both the latent and image spaces to significantly provides compact priors for fine-grained synthesis. Our generated neutral assets naturally support blendshapes-based facial animations. We further improve the animation ability with personalized deformation characteristics by learning the universal expression prior using the cross-identity hypernetwork, and a neural facial tracker for video input. Extensive qualitative and quantitative experiments validate the effectiveness and generalizability of DreamFace. Notably, DreamFace can generate of realistic 3D facial assets with physically-based rendering quality and rich animation ability from video footage, even for fashion icons or exotic characters in cartoons and fiction movies.

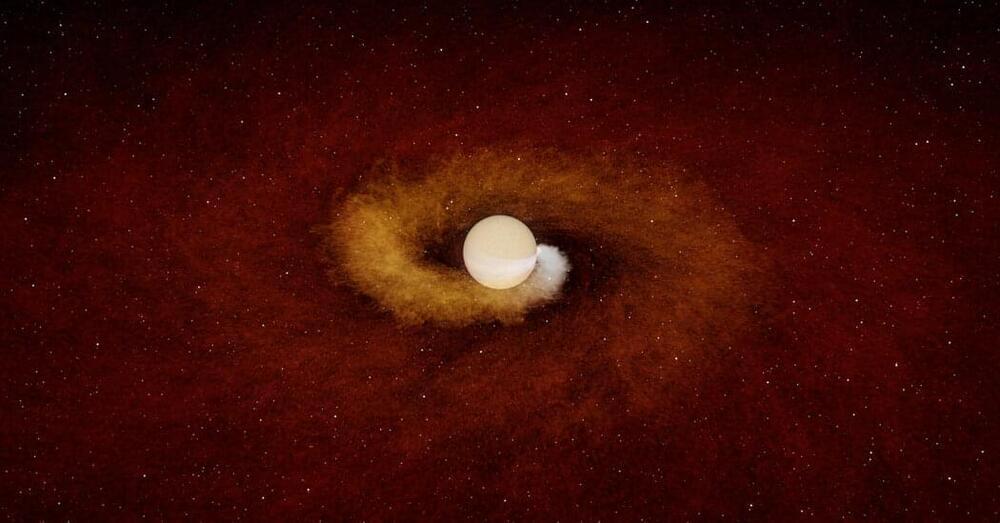

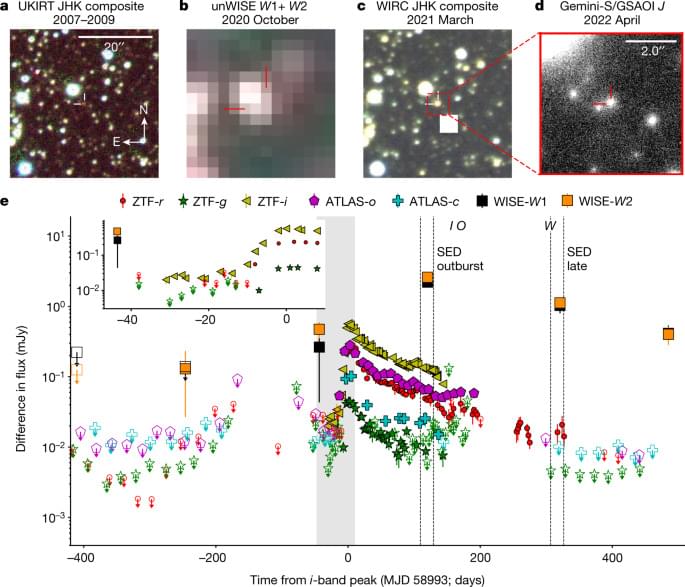

With the power of the Gemini South Adaptive Optics Imager (GSAOI) on Gemini South, one half of the International Gemini Observatory, operated by NSF’s NOIRLab, astronomers have observed the first direct evidence of a dying star expanding to engulf one of its planets. Evidence for this event was found in a telltale “long and low-energy” outburst from a star in the Milky Way about 13,000 light-years from Earth. This event, the devouring of a planet by an engorged star, likely presages the ultimate fate of Mercury, Venus, and Earth when our sun begins its death throes in about five billion years.

“These observations provide a new perspective on finding and studying the billions of stars in our Milky Way that have already consumed their planets,” says Ryan Lau, NOIRLab astronomer and co-author on this study, which is published in the journal Nature.

Researchers from the University of Aberdeen develop an AI algorithm to detect planetary craters with high accuracy, efficiency, and flexibility.

A team of scientists from the University of Aberdeen has developed a new algorithm that could revolutionize planetary studies. The new technology enables scientists to detect planetary craters and accurately map their surfaces using different data types, according to a release.

The team used a new universal crater detection algorithm (CDA) developed using the Segment Anything Model (SAM), an artificial intelligence (AI) model that can automatically identify and cut out any object in any image.

😗

Texas-based Venus Aerospace is working with rotating-detonation propulsion technology to turn the “Stargazer” from sci-concept to Mach-9 business jet that flies at 11110km/h.

By Michael Verdon 02/05/2023

Join top executives in San Francisco on July 11–12, to hear how leaders are integrating and optimizing AI investments for success. Learn More

After several decades of hope, hype and false starts, it appears that artificial intelligence (AI) has finally gone from throwing off sparks to catching fire. Tools like DALL-E and ChatGPT have seized the spotlight and the public imagination, and this latest wave of AI appears poised to be a game-changer across multiple industries.

But what kind of impact will AI have on the 3D engineering space? Will designers and engineers see significant changes in their world and their daily workflows, and if so, what will those changes look like?