Amazon’s @Zoox is on the verge of launching a robotaxi ride-hailing service in Las Vegas. @EdLudlow went for a first ride.

Its fully autonomous vehicle is capable of smoothly traversing a 5-mile stretch just off the Vegas strip.

Currently, computing technologies are rapidly evolving and reshaping how we imagine the future. Quantum computing is taking its first toddling steps toward delivering practical results that promise unprecedented abilities. Meanwhile, artificial intelligence remains in public conversation as it’s used for everything from writing business emails to generating bespoke images or songs from text prompts to producing deep fakes.

Some physicists are exploring the opportunities that arise when the power of machine learning — a widely used approach in AI research—is brought to bear on quantum physics. Machine learning may accelerate quantum research and provide insights into quantum technologies, and quantum phenomena present formidable challenges that researchers can use to test the bounds of machine learning.

When studying quantum physics or its applications (including the development of quantum computers), researchers often rely on a detailed description of many interacting quantum particles. But the very features that make quantum computing potentially powerful also make quantum systems difficult to describe using current computers. In some instances, machine learning has produced descriptions that capture the most significant features of quantum systems while ignoring less relevant details—efficiently providing useful approximations.

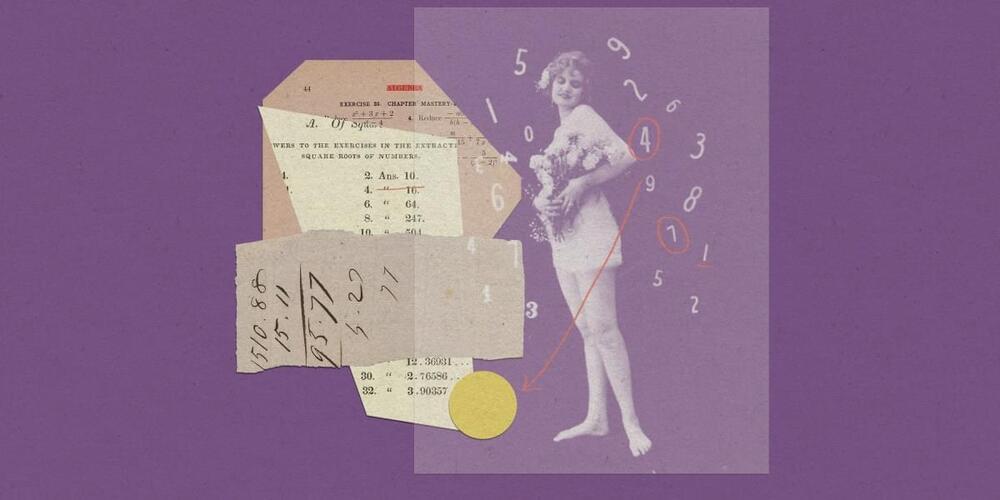

Scientists studying Large Language Models (LLMs) have found that LLMs perform similarly to humans in cognitive tasks, often making judgments and decisions that deviate from rational norms, such as risk and loss aversion. LLMs also exhibit human-like biases and errors, particularly in probability judgments and arithmetic operations tasks. These similarities suggest the potential for using LLMs as models of human cognition. However, significant challenges remain, including the extensive data LLMs are trained on and the unclear origins of these behavioural similarities.

The suitability of LLMs as models of human cognition is debated due to several issues. LLMs are trained on much larger datasets than humans and may have been exposed to test questions, leading to artificial enhancements in human-like behaviors through value alignment processes. Despite these challenges, fine-tuning LLMs, such as the LLaMA-1-65B model, on human choice datasets has improved accuracy in predicting human behavior. Prior research has also highlighted the importance of synthetic datasets in enhancing LLM capabilities, particularly in problem-solving tasks like arithmetic. Pretraining on such datasets can significantly improve performance in predicting human decisions.

Researchers from Princeton University and Warwick University propose enhancing the utility of LLMs as cognitive models by (i) utilizing computationally equivalent tasks that both LLMs and rational agents must master for cognitive problem-solving and (ii) examining task distributions required for LLMs to exhibit human-like behaviors. Applied to decision-making, specifically risky and intertemporal choice, Arithmetic-GPT, an LLM pretrained on an ecologically valid arithmetic dataset, predicts human behavior better than many traditional cognitive models. This pretraining suffices to align LLMs closely with human decision-making.

The expansion of the universe has been a well-established fact of physics for almost a century. By the turn of the millennium the rate of this expansion, referred to as the Hubble constant (H 0), had converged to a value of around 70 km s –1 Mpc –1. However, more recent measurements have given rise to a tension: whereas those derived from the cosmic microwave background (CMB) cluster around a value of 67 km s –1 Mpc –1, direct measurements using a local distance-ladder (such as those based on Cepheids) mostly prefer larger values around 73 km s –1 Mpc –1. This disagreement between early-and late-universe measurements, respectively, stands at the 4–5 σ level, thereby calling for novel measurements.

One such source of new information are large galaxy surveys, such as the one currently being performed by the Dark Energy Spectroscopic Instrument (DESI). This Arizona-based instrument uses 5,000 individual robots that optimise the focal plane of the detector to allow it to measure 5,000 galaxies at the same time. The goal of the survey is to provide a detailed 3D map, which can be used to study the evolution of the universe by focussing on the distance between galaxies. During its first year of observation, the results of which have now been released, DESI has provided a catalogue of millions of objects.

Small fluctuations in the density of the early universe resulted not only in signatures in the CMB, as measured for example by the Planck probe, but also left imprints in the distribution of baryonic matter. Each over-dense region is thought to contain dark matter, baryonic matter and photons. The gravitational force from dark matter on the baryons is countered by radiation pressure from the photons. From the small over-densities, baryons are dragged along by photon pressure until these two types of particles decoupled during the recombination era. The original location of the over-density is surrounded by a sphere of baryonic matter, which typically is at a distance referred to as the sound horizon. The sound horizon at the moment of decoupling, denoted r d, leaves an imprint that has since evolved to produce the density fluctuations in the universe that seeded large-scale structures.

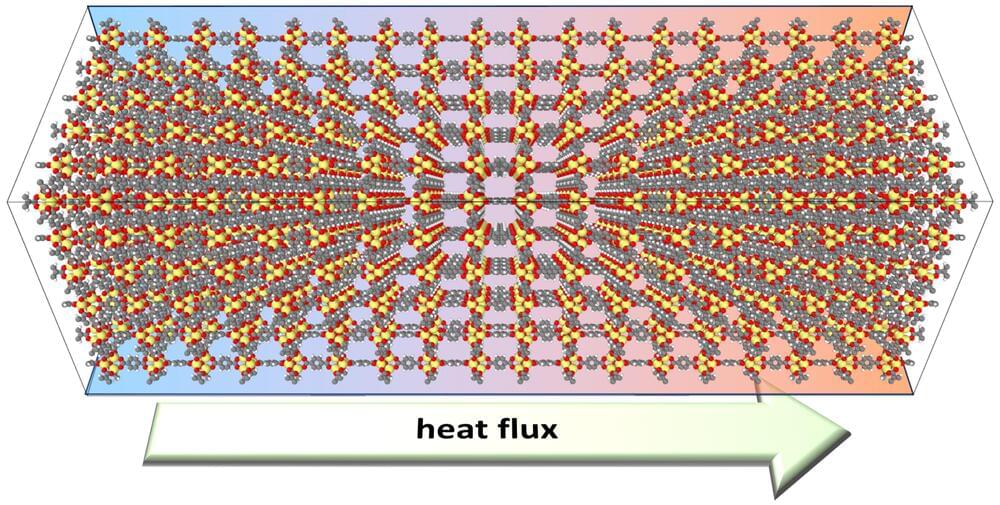

Hydrogen storage, heat conduction, gas storage, CO2 and water sequestration—metal-organic frameworks (MOFs) have extraordinary properties due to their unique structure in the form of microporous crystals, which have a very large surface area despite their small size. This makes them extremely interesting for research and practical applications. However, MOFs are very complex systems that have so far required a great deal of time and computing power to simulate accurately.