ASML has a monopoly on advanced semiconductor manufacturing tools, and it’s poised to gain from CHIPS Act investment.

The world’s first artificial intelligence (AI) beauty pageant has been announced, and the winner will receive over $20,000 in prizes.

The glittering world of modeling and fashion has embraced Al technology, and AI-generated models will take center stage in the beauty pageant named ‘Miss Al.’

Miss AI contest has been announced by World AI Creator Awards (WAICA), in partnership with Fanvue.

According to the company, Meta AI is now one of the world’s leading AI assistants that can boost your intelligence and lighten your load, “helping you learn, get things done, create content, and connect to make the most out of every moment.”

Meta said the assistant’s image-generation feature will be available in beta on WhatsApp and the MetaAI website. Users will see an image appear as they start typing and MetaAI will provide prompts to help change or refine the image. The images can also be animated into a GIF that users can share, reported CNBC.

The company is rolling out Meta AI in English in more than a dozen countries outside of the US. Now, people will have access to Meta AI in Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore, South Africa, Uganda, Zambia, and Zimbabwe.

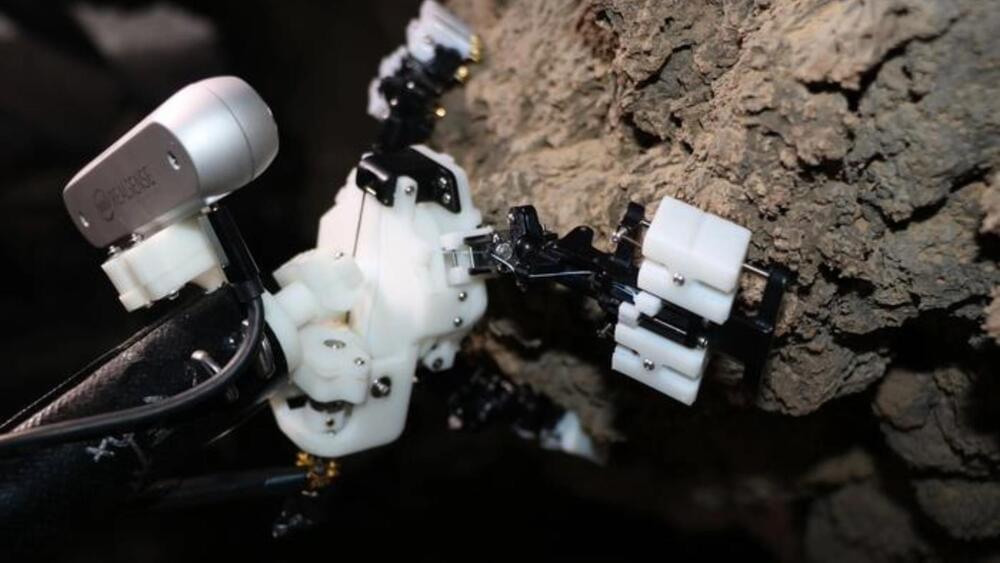

ReachBot is inspired by the movement of the Harvestman spider, also known as a daddy-long-legs. The current model boasts a small body and long, extendable legs equipped with grippers. Moreover, the booms will allow the robot to move ahead.

These appendages allow ReachBot to navigate through the narrow passages of Martian caves to hunt for signs of life and other key resources, like water. The multiple extendable boom limbs have a three-finger gripper that clutches onto the rocks and uses them as anchor points.

Karl Friston, Joscha Bach, and Curt Jaimungal delve into death, neuroscientific models of Ai, God, and consciousness. SPONSOR: HelloFresh: Go to https://HelloFresh.com/theoriesofever… and use code theoriesofeverythingfree for FREE breakfast for life!

TIMESTAMPS:

- 00:00:00 Introduction.

- 00:01:47 Karl and Joscha’s new paper.

- 00:09:13 Sentience vs. consciousness vs. The Self.

- 00:21:00 Self-organization, thingness, and self-evidencing.

- 00:29:02 Overlapping realities and physics as art.

- 00:41:05 Mortal computation and substrate-agnostic AI

- 00:56:38 Beyond Von Neumann architectures.

- 01:00:23 AI surpassing human researchers.

- 01:20:34 Exploring vs. Exploiting (the risk of curiosity in academia)

- 01:27:02 Incompleteness and interdependence.

- 01:32:25 Defining consciousness.

- 01:53:36 Multiple overlapping consciousnesses.

- 02:03:03 Unified experience and schizophrenia \.

Try out my quantum mechanics course (and many others on math and science) on Brilliant using the link https://brilliant.org/sabine. You can get started for free, and the first 200 will get 20% off the annual premium subscription.

Physicists have known that it’s possible to control chaotic systems without just making them even more chaotic since the 1990s. But in the past 10 years this field has really exploded thanks to machine learning.

The full video from TU Wien with the inverted double pendulum is here: • Double Pendulum on a Cart.

The video with the AI-trained racing car is here: • NeuroRacer.

And the full Boston Dynamics video is here: • Do You Love Me?

👉 Transcript and References on Patreon ➜ / sabine.

As discussed. if we went by definition of AI 20 years back we d probably say we are at Agi now. but goal posts are constantly bein moved to superior to humans in all areas. 20 years back would of called it ASI.

Stand back and take a look at the last two years of AI progress as a whole… AI is catching up with humans so quickly, in so many areas, that frankly, we need new tests.

The U.S. Air Force Test Pilot School and the Defense Advanced Research Projects Agency were finalists for the 2023 Robert J. Collier Trophy, a formal acknowledgement of recent breakthroughs that have launched the machine-learning era within the aerospace industry. The teams worked together to test breakthrough executions in artificial intelligence algorithms using the X-62A VISTA aircraft as part of DARPA’s Air Combat Evolution (ACE) program. In less than a calendar year the teams went from the initial installation of live AI agents into the X-62A’s systems, to demonstrating the first AI versus human within-visual-range engagements, otherwise known as a dogfight. In total, the team made over 100,000 lines of flight-critical software changes across 21 test flights. Dogfighting is a highly complex scenario that the X-62A utilized to successfully prove using non-deterministic artificial intelligence safely is possible within aerospace.

“The X-62A is an incredible platform, not just for research and advancing the state of tests, but also for preparing the next generation of test leaders. When ensuring the capability in front of them is safe, efficient, effective and responsible, industry can look to the results of what the X-62A ACE team has done as a paradigm shift,” said Col. James Valpiani, commandant of the Test Pilot School.

“The potential for autonomous air-to-air combat has been imaginable for decades, but the reality has remained a distant dream up until now. In 2023, the X-62A broke one of the most significant barriers in combat aviation. This is a transformational moment, all made possible by breakthrough accomplishments of the X-62A ACE team,” said Secretary of the Air Force Frank Kendall. Secretary Kendall will soon take flight in the X-62A VISTA to personally witness AI in a simulated combat environment during a forthcoming test flight at Edwards.