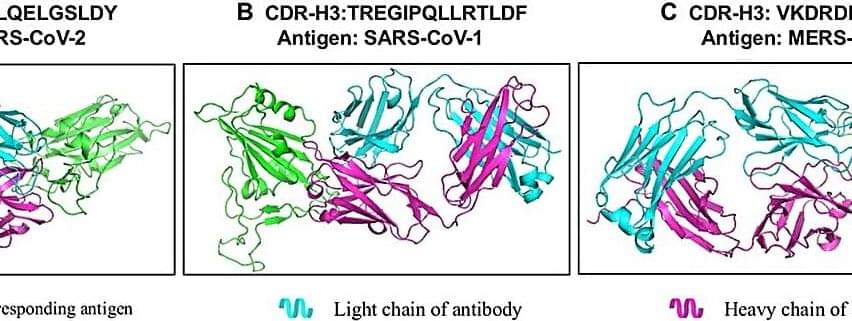

Researchers from Zhejiang University and HKUST (Guangzhou) have developed a cutting-edge AI model, ProtET, that leverages multi-modal learning to enable controllable protein editing through text-based instructions. This innovative approach, published in Health Data Science, bridges the gap between biological language and protein sequence manipulation, enhancing functional protein design across domains like enzyme activity, stability, and antibody binding.

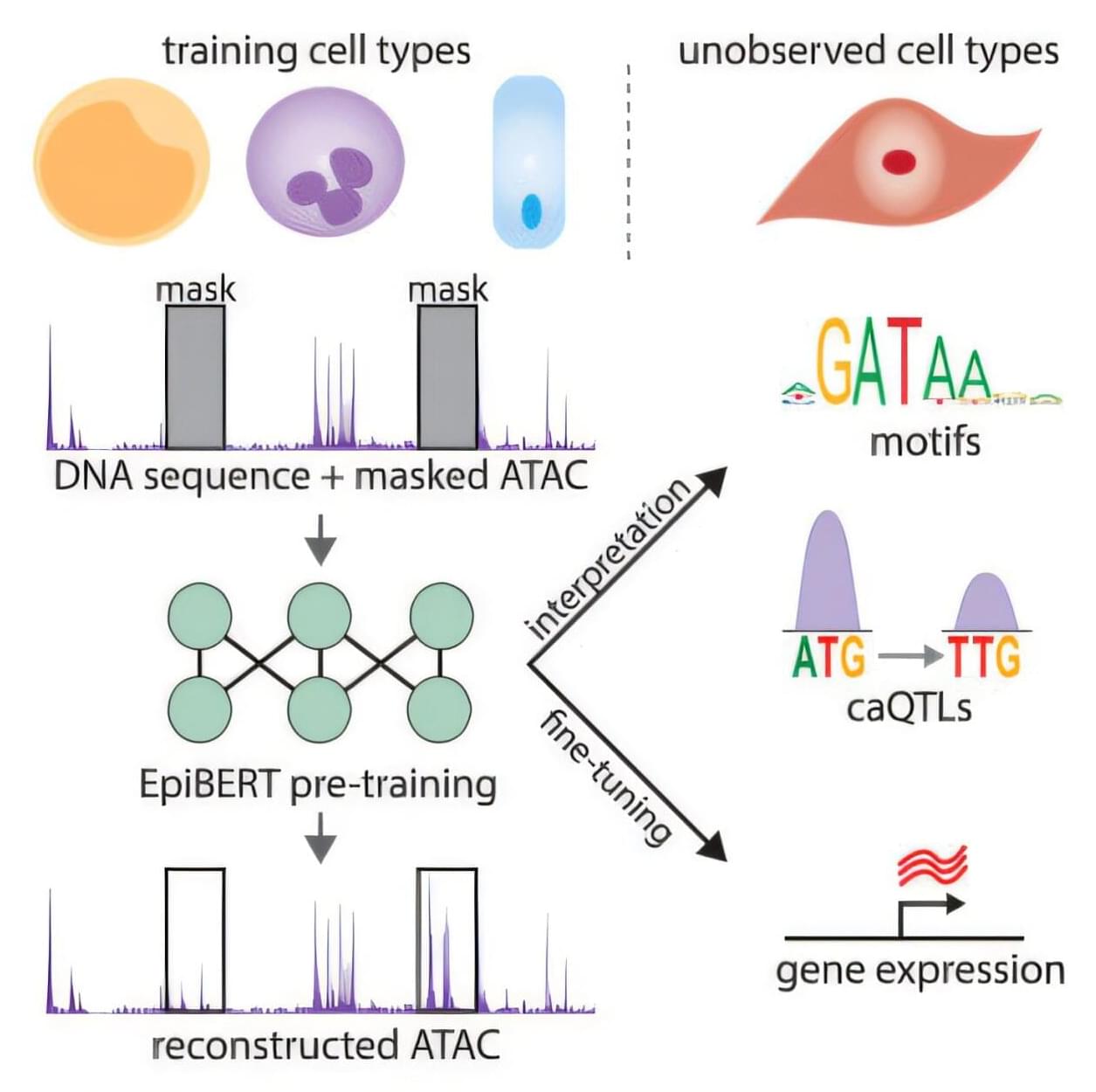

Proteins are the cornerstone of biological functions, and their precise modification holds immense potential for medical therapies, synthetic biology, and biotechnology. While traditional protein editing methods rely on labor-intensive laboratory experiments and single-task optimization models, ProtET introduces a transformer-structured encoder architecture and a hierarchical training paradigm. This model aligns protein sequences with natural language descriptions using contrastive learning, enabling intuitive, text-guided protein modifications.

The research team, led by Mingze Yin from Zhejiang University and Jintai Chen from HKUST (Guangzhou), trained ProtET on a dataset of over 67 million protein–biotext pairs, extracted from Swiss-Prot and TrEMBL databases. The model demonstrated exceptional performance across key benchmarks, improving protein stability by up to 16.9% and optimizing catalytic activities and antibody-specific binding.