Boston Dynamics’ Atlas robot has long been admired for its impressive agility, often performing feats that seem to defy the limits of humanoid robotics. But now, Atlas has reached a new milestone in its evolution—autonomy. Thanks to advancements in both hardware and software, Atlas is no longer just a display of physical prowess. It can now complete tasks independently, operating without the need for pre-programmed movements or human control.

Since its unveiling in 2013, Atlas has undergone continuous improvements, transforming from a partially hydraulic machine to a fully electrified robot. This change alone marked a significant shift in its capabilities, providing better efficiency and flexibility. However, it’s not just about the hardware—Atlas now boasts the ability to think on its feet. A recent demonstration showcased the robot’s impressive ability to move objects autonomously. In the video, Atlas was given a list of locations where it needed to place engine parts. With this simple instruction, the robot set to work, moving the pieces with remarkable fluidity and precision.

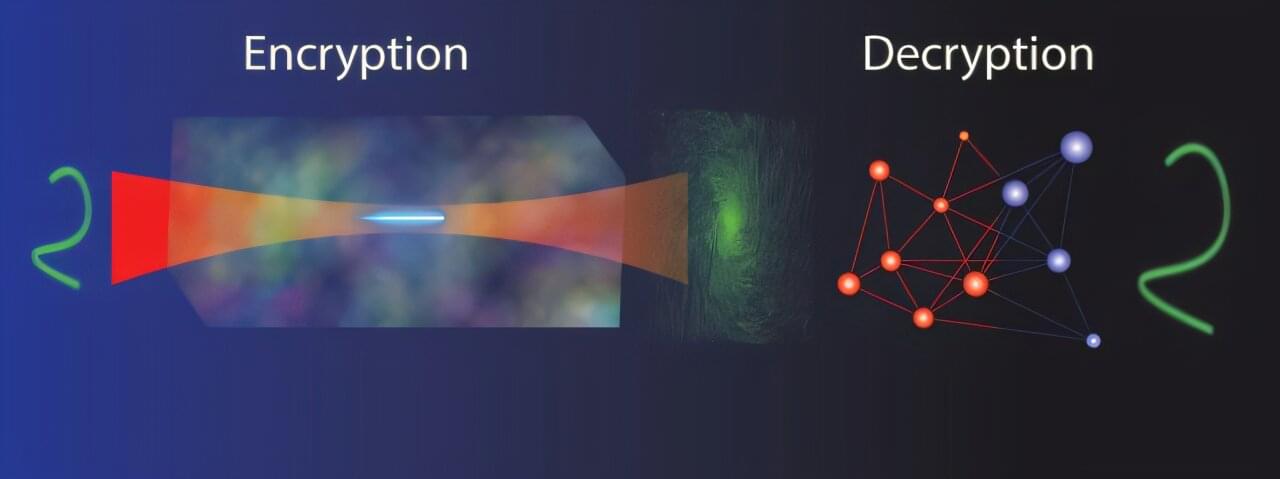

This isn’t just about lifting heavy objects. Atlas has been designed to navigate and adapt to changing environments. The use of machine learning has strengthened its ability to perceive and interact with the world around it. Through enhanced vision systems, Atlas can analyze its surroundings and adjust its actions accordingly. For example, when it encountered difficulty in placing one of the parts, Atlas immediately recalibrated its movements, showing an impressive level of adaptability.