Buildots attaches 360 cameras onto project managers’ hardhats to collect footage inside the construction site, flagging problems to be solved and tracking construction progress.

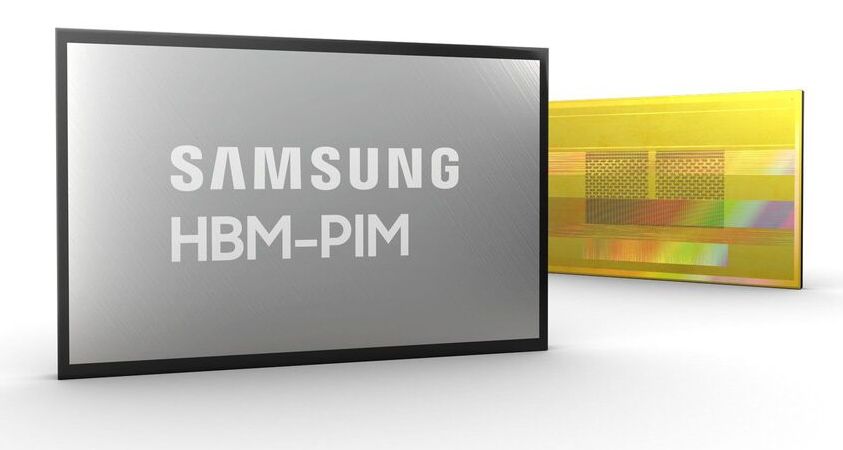

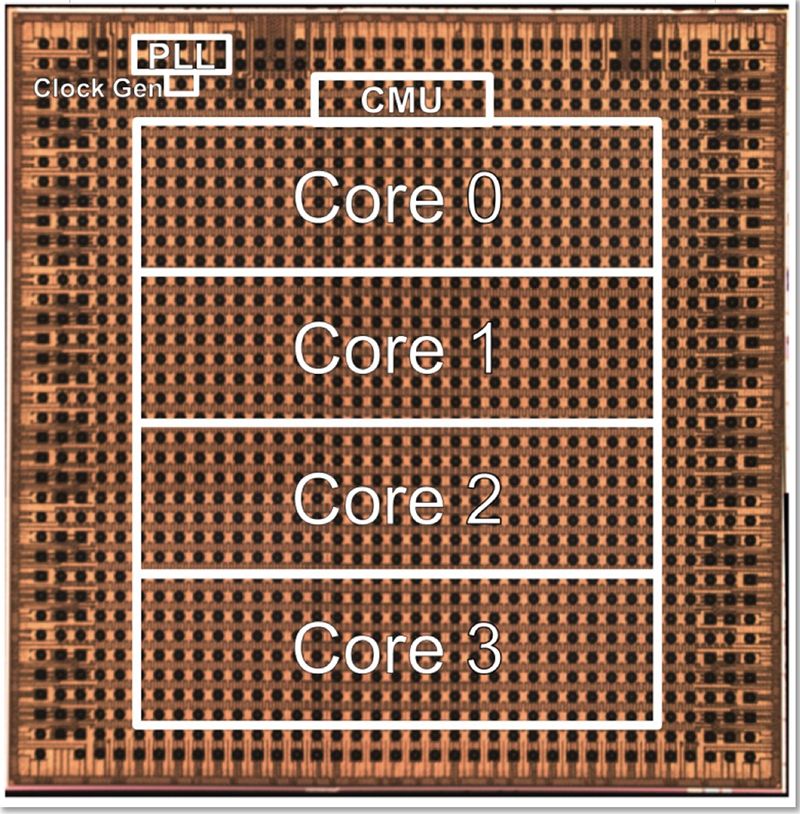

Samsung Electronics has announced on its Newsroom webpage the development of a new kind of memory chip architecture called high-bandwidth memory, processing-in-memory—HBM-PIM. The architecture adds artificial intelligence processing to high-bandwidth memory chips. The new chips will be marketed as a way to speed up data centers, boost speed in high performance computers and to further enable AI applications.

mission experts will talk about the robotic scientist’s touchdown in the most challenging terrain on Mars ever targeted.

Perseverance, which launched July 302020, will search for signs of ancient microbial life, collect carefully selected rock and regolith (broken rock and dust) samples for future return to Earth, characterize Mars’ geology and climate, and pave the way for human exploration beyond the Moon.

Tune in to watch a live broadcast from the Von Karman Auditorium at NASA’s Jet Propulsion Laboratory.

Shouldn’t NASA — National Aeronautics and Space Administration already be building a moon-base with Elon Musk at SpaceX as well as Russia and China? Congress should fund space travel.

RUSSIA and China are joining forces as they prepare to sign a historic deal to build the first moon base after they snubbed the US.

The two countries are to collaborate on the international lunar structure, which was thought up by China — the latest build in the space-race against America.

🚀 Follow our Mars landing live blog for up the minute updates from Perseverance…

The purpose of the International Lunar Research Stations (ILRS), is to create a long-term robotic presence on the Moon by the start of the next decade, before eventually establishing a sustained human presence.