Category: robotics/AI – Page 1,964

Effectively using GPT-J and GPT-Neo, the GPT-3 open-source alternatives, with few-shot learning

GPT-J and GPT-Neo, the open-source alternatives to GPT-3, are among the best NLP models as of this writing. But using them effectively can take practice. Few-shot learning is an NLP technique that works very well with these models.

GPT-J and GPT-Neo.

GPT-Neo and GPT-J are both open-source NLP models, created by EleutherAI (a collective of researchers working to open source AI).

Finally: Here’s American New 6th Generation Fighter Jet

The future of fighter jets is coming, seemingly with more international power and disruptive technologies than predicted. As the US forges ahead to become 1st nation field sixth-generation fighter jet, other major air forces fear falling behind in the competitive race. The US, Europe, Japan, and China have made unbelievable investments looking for unique next-level capabilities like stealth, robust avionics, and navigation systems to present the most technologically advanced fighter jet. But one particular trend is crucial for all 6th gen prototypes. Artificial Intelligence is about to begin a new era of air combat.

Nvidia releases TensorRT 8 for faster AI inference

Nvidia today announced the release of TensorRT 8, the latest version of its software development kit (SDK) designed for AI and machine learning inference. Built for deploying AI models that can power search engines, ad recommendations, chatbots, and more, Nvidia claims that TensorRT 8 cuts inference time in half for language queries compared with the previous release of TensorRT.

Models are growing increasingly complex, and demand is on the rise for real-time deep learning applications. According to a recent O’Reilly survey, 86.7% of organizations are now considering, evaluating, or putting into production AI products. And Deloitte reports that 53% of enterprises adopting AI spent more than $20 million in 2019 and 2020 on technology and talent.

TensorRT essentially dials a model’s mathematical coordinates to a balance of the smallest model size with the highest accuracy for the system it’ll run on. Nvidia claims that TensorRT-based apps perform up to 40 times faster than CPU-only platforms during inference, and that TensorRT 8-specific optimizations allow BERT-Large — one of the most popular Transformer-based models — to run in 1.2 milliseconds.

Untether AI nabs $125M for AI acceleration chips

Untether AI, a startup developing custom-built chips for AI inferencing workloads, today announced it has raised $125 million from Tracker Capital Management and Intel Capital. The round, which was oversubscribed and included participation from Canada Pension Plan Investment Board and Radical Ventures, will be used to support customer expansion.

Increased use of AI — along with the technology’s hardware requirements — poses a challenge for traditional datacenter compute architectures. Untether is among the companies proposing at-memory or near-memory computation as a solution. Essentially, this type of hardware builds memory and logic into an integrated circuit package. In a “2.5D” near-memory compute architecture, processor dies are stacked atop an interposer that links the components and the board, incorporating high-speed memory to bolster chip bandwidth.

Founded in 2018 by CTO Martin Snelgrove, Darrick Wiebe, and Raymond Chik, Untether says it continues to make progress toward mass-producing its RunA1200 chip, which boasts efficiency with computational robustness. Snelgrove and Wiebe claim that data in their architecture moves up to 1000 times faster than is typical, which would be a boon for machine learning, where datasets are frequently dozens or hundreds of gigabytes in size.

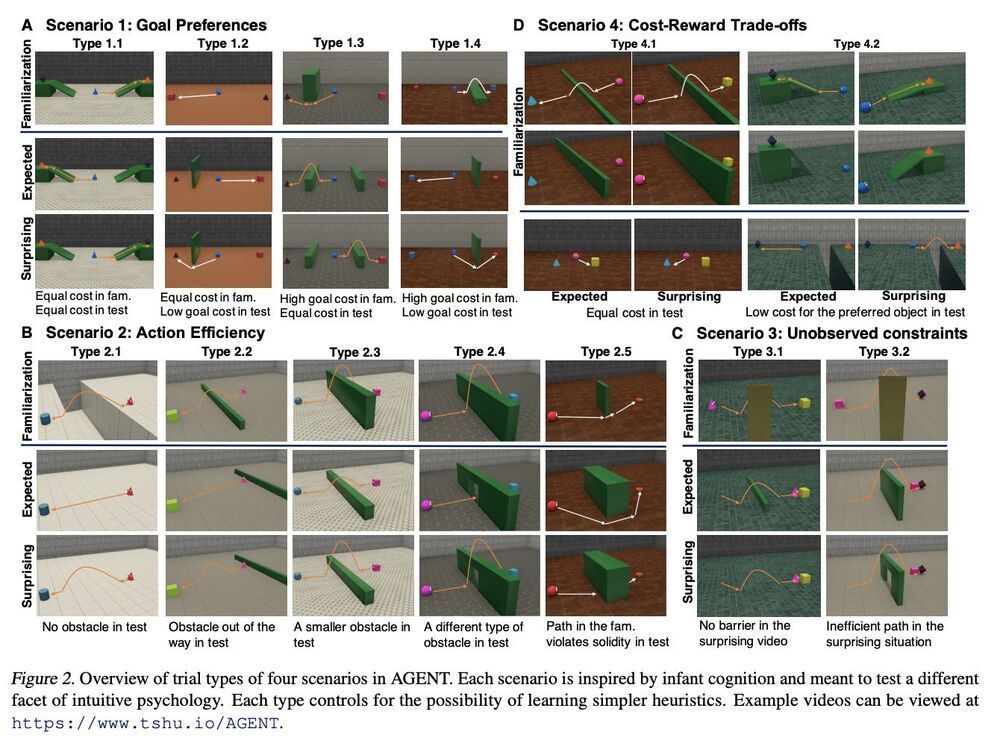

Researchers from IBM, MIT and Harvard Announced The Release Of DARPA “Common Sense AI” Dataset Along With Two Machine Learning Models At ICML 2021

Building machines that can make decisions based on common sense is no easy feat. A machine must be able to do more than merely find patterns in data; it also needs a way of interpreting the intentions and beliefs behind people’s choices.

At the 2021 International Conference on Machine Learning (ICML), Researchers from IBM, MIT, and Harvard University have come together to release a DARPA “Common Sense AI” dataset for benchmarking AI intuition. They are also releasing two machine learning models that represent different approaches to the problem that relies on testing techniques psychologists use to study infants’ behavior to accelerate the development of AI exhibiting common sense.

This research work is the first of its kind to use psychology to create more fluid and better AI systems. It aims to develop machine commonsense that makes sense because it has innate human qualities – such as intuition, common knowledge, or understanding of social cues.

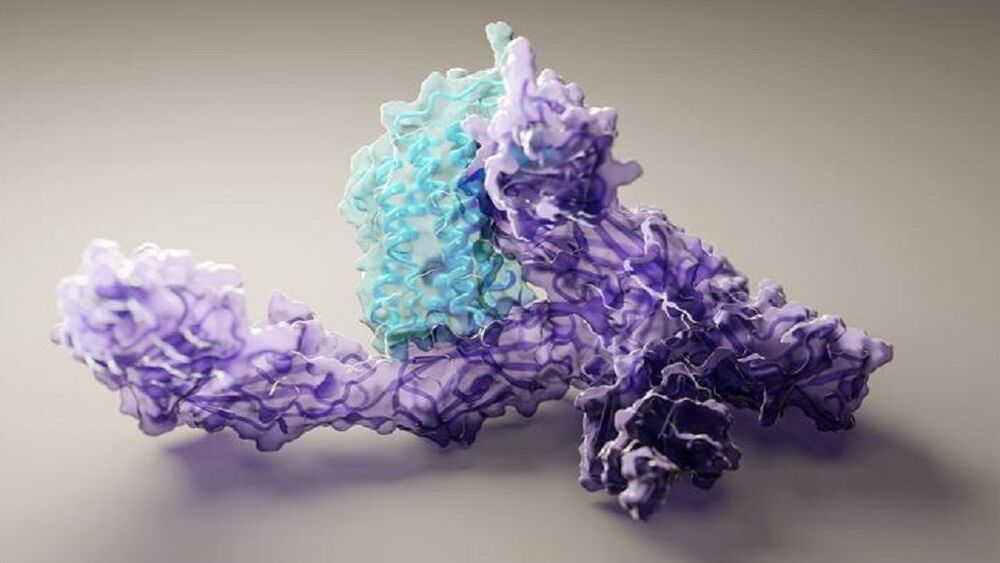

New Protein Folding AI Just Made a ‘Once In a Generation’ Advance in Biology

The tool next examines how one protein’s amino acids interact with another within the same protein, for example, by examining the distance between two distant building blocks. It’s like looking at your hands and feet fully stretched out, versus in a backbend measuring the distance between those extremities as you “fold” into a yoga pose.

Finally, the third track looks at 3D coordinates of each atom that makes up a protein building block—kind of like mapping the studs on a Lego block—to compile the final 3D structure. The network then bounces back and forth between these tracks, so that one output can update another track.

The end results came close to those of DeepMind’s tool, AlphaFold2, which matched the gold standard of structures obtained from experiments. Although RoseTTAFold wasn’t as accurate as AlphaFold2, it seemingly required much less time and energy. For a simple protein, the algorithm was able to solve the structure using a gaming computer in about 10 minutes.

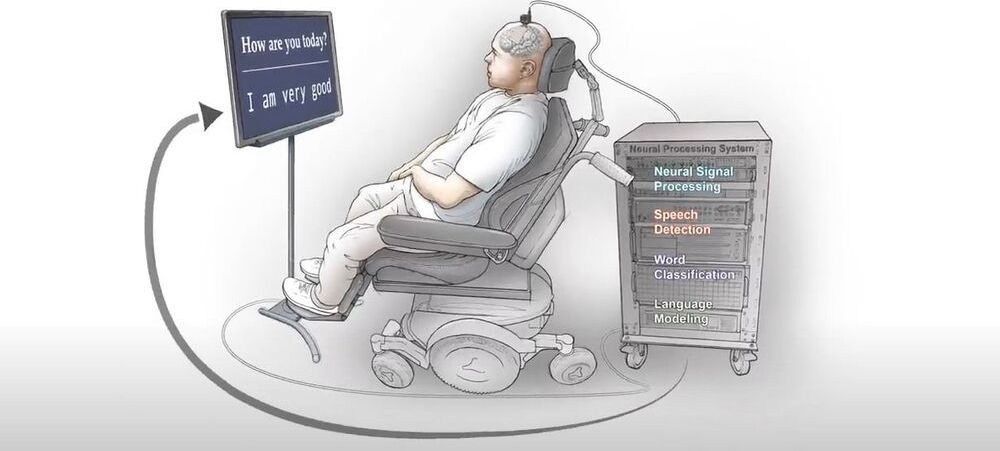

Transforming Brain Waves into Words with AI

New research out of the University of California, San Francisco has given a paralyzed man the ability to communicate by translating his brain signals into computer generated writing. The study, published in The New England Journal of Medicine, marks a significant milestone toward restoring communication for people who have lost the ability to speak.

“To our knowledge, this is the first successful demonstration of direct decoding of full words from the brain activity of someone who is paralyzed and cannot speak,” senior author and the Joan and Sanford Weill Chair of Neurological Surgery at UCSF, Edward Chang said in a press release. “It shows strong promise to restore communication by tapping into the brain’s natural speech machinery.”

Some with speech limitations use assistive devices–such as touchscreens, keyboards, or speech-generating computers to communicate. However, every year thousands lose their speech ability from paralysis or brain damage, leaving them unable to use assistive technologies.

A 3D-printed soft robotic hand that can play Nintendo

A team of researchers from the University of Maryland has 3D printed a soft robotic hand that is agile enough to play Nintendo’s Super Mario Bros. — and win!

The feat, highlighted on the front cover of the latest issue of Science Advances, demonstrates a promising innovation in the field of soft robotics, which centers on creating new types of flexible, inflatable robots that are powered using water or air rather than electricity. The inherent safety and adaptability of soft robots has sparked interest in their use for applications like prosthetics and biomedical devices. Unfortunately, controlling the fluids that make these soft robots bend and move has been especially difficult—until now.

The key breakthrough by the team, led by University of Maryland assistant professor of mechanical engineering Ryan D. Sochol, was the ability to 3D print fully assembled soft robots with integrated fluidic circuits in a single step.